在 TensorFlow.org 上檢視 在 TensorFlow.org 上檢視

|

在 Google Colab 中執行 在 Google Colab 中執行

|

在 GitHub 上檢視原始碼 在 GitHub 上檢視原始碼

|

下載筆記本 下載筆記本

|

總覽

這個筆記本說明如何使用 TensorFlow Compression 壓縮模型。

在以下範例中,我們將 MNIST 分類器的權重壓縮到比其浮點表示法小得多的尺寸,同時保持分類準確度。這透過兩個步驟的流程完成,根據論文 Scalable Model Compression by Entropy Penalized Reparameterization

訓練「可壓縮」模型,在訓練期間明確加入熵懲罰,以鼓勵模型參數的可壓縮性。此懲罰的權重 \(\lambda\) 可持續控制壓縮模型尺寸及其準確度之間的權衡。

使用與懲罰相符的編碼架構,將可壓縮模型編碼為壓縮模型,表示懲罰是模型尺寸的良好預測指標。這確保方法不需要多次迭代訓練、壓縮和重新訓練模型以進行微調。

此方法嚴格來說與壓縮模型尺寸有關,與計算複雜度無關。它可以與模型修剪等技術結合,以縮減尺寸和複雜度。

各種模型的範例壓縮結果

| 模型 (資料集) | 模型尺寸 | 壓縮率 | Top-1 錯誤壓縮 (未壓縮) |

|---|---|---|---|

| LeNet300-100 (MNIST) | 8.56 KB | 124 倍 | 1.9% (1.6%) |

| LeNet5-Caffe (MNIST) | 2.84 KB | 606 倍 | 1.0% (0.7%) |

| VGG-16 (CIFAR-10) | 101 KB | 590 倍 | 10.0% (6.6%) |

| ResNet-20-4 (CIFAR-10) | 128 KB | 134 倍 | 8.8% (5.0%) |

| ResNet-18 (ImageNet) | 1.97 MB | 24 倍 | 30.0% (30.0%) |

| ResNet-50 (ImageNet) | 5.49 MB | 19 倍 | 26.0% (25.0%) |

應用包括

- 大規模將模型部署/廣播到邊緣裝置,節省傳輸中的頻寬。

- 在聯邦學習中,將全域模型狀態傳達給用戶端。模型架構 (隱藏單元數量等) 與初始模型相同,用戶端可以繼續在解壓縮模型上學習。

- 在極度記憶體受限的用戶端上執行推論。在推論期間,可以循序解壓縮每一層的權重,並在計算啟動後立即捨棄。

設定

透過 pip 安裝 Tensorflow Compression。

# Installs the latest version of TFC compatible with the installed TF version.read MAJOR MINOR <<< "$(pip show tensorflow | perl -p -0777 -e 's/.*Version: (\d+)\.(\d+).*/\1 \2/sg')"pip install "tensorflow-compression<$MAJOR.$(($MINOR+1))"

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts. tf-keras 2.16.0 requires tensorflow<2.17,>=2.16, but you have tensorflow 2.14.1 which is incompatible.

匯入程式庫依附元件。

import matplotlib.pyplot as plt

import tensorflow as tf

import tensorflow_compression as tfc

import tensorflow_datasets as tfds

2024-07-13 06:14:49.769559: E tensorflow/compiler/xla/stream_executor/cuda/cuda_dnn.cc:9342] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered 2024-07-13 06:14:49.769599: E tensorflow/compiler/xla/stream_executor/cuda/cuda_fft.cc:609] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered 2024-07-13 06:14:49.769638: E tensorflow/compiler/xla/stream_executor/cuda/cuda_blas.cc:1518] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

定義及訓練基本 MNIST 分類器

為了有效壓縮密集層和卷積層,我們需要定義自訂層類別。這些類別類似於 tf.keras.layers 下的層,但稍後我們會對其進行子類別化,以有效實作 Entropy Penalized Reparameterization (EPR)。為此,我們也會新增複製建構函式。

首先,我們定義標準密集層

class CustomDense(tf.keras.layers.Layer):

def __init__(self, filters, name="dense"):

super().__init__(name=name)

self.filters = filters

@classmethod

def copy(cls, other, **kwargs):

"""Returns an instantiated and built layer, initialized from `other`."""

self = cls(filters=other.filters, name=other.name, **kwargs)

self.build(None, other=other)

return self

def build(self, input_shape, other=None):

"""Instantiates weights, optionally initializing them from `other`."""

if other is None:

kernel_shape = (input_shape[-1], self.filters)

kernel = tf.keras.initializers.GlorotUniform()(shape=kernel_shape)

bias = tf.keras.initializers.Zeros()(shape=(self.filters,))

else:

kernel, bias = other.kernel, other.bias

self.kernel = tf.Variable(

tf.cast(kernel, self.variable_dtype), name="kernel")

self.bias = tf.Variable(

tf.cast(bias, self.variable_dtype), name="bias")

self.built = True

def call(self, inputs):

outputs = tf.linalg.matvec(self.kernel, inputs, transpose_a=True)

outputs = tf.nn.bias_add(outputs, self.bias)

return tf.nn.leaky_relu(outputs)

同樣地,還有 2D 卷積層

class CustomConv2D(tf.keras.layers.Layer):

def __init__(self, filters, kernel_size,

strides=1, padding="SAME", name="conv2d"):

super().__init__(name=name)

self.filters = filters

self.kernel_size = kernel_size

self.strides = strides

self.padding = padding

@classmethod

def copy(cls, other, **kwargs):

"""Returns an instantiated and built layer, initialized from `other`."""

self = cls(filters=other.filters, kernel_size=other.kernel_size,

strides=other.strides, padding=other.padding, name=other.name,

**kwargs)

self.build(None, other=other)

return self

def build(self, input_shape, other=None):

"""Instantiates weights, optionally initializing them from `other`."""

if other is None:

kernel_shape = 2 * (self.kernel_size,) + (input_shape[-1], self.filters)

kernel = tf.keras.initializers.GlorotUniform()(shape=kernel_shape)

bias = tf.keras.initializers.Zeros()(shape=(self.filters,))

else:

kernel, bias = other.kernel, other.bias

self.kernel = tf.Variable(

tf.cast(kernel, self.variable_dtype), name="kernel")

self.bias = tf.Variable(

tf.cast(bias, self.variable_dtype), name="bias")

self.built = True

def call(self, inputs):

outputs = tf.nn.convolution(

inputs, self.kernel, strides=self.strides, padding=self.padding)

outputs = tf.nn.bias_add(outputs, self.bias)

return tf.nn.leaky_relu(outputs)

在繼續進行模型壓縮之前,我們先檢查是否可以成功訓練常規分類器。

定義模型架構

classifier = tf.keras.Sequential([

CustomConv2D(20, 5, strides=2, name="conv_1"),

CustomConv2D(50, 5, strides=2, name="conv_2"),

tf.keras.layers.Flatten(),

CustomDense(500, name="fc_1"),

CustomDense(10, name="fc_2"),

], name="classifier")

2024-07-13 06:14:53.116748: W tensorflow/core/common_runtime/gpu/gpu_device.cc:2211] Cannot dlopen some GPU libraries. Please make sure the missing libraries mentioned above are installed properly if you would like to use GPU. Follow the guide at https://tensorflow.dev.org.tw/install/gpu for how to download and setup the required libraries for your platform. Skipping registering GPU devices...

載入訓練資料

def normalize_img(image, label):

"""Normalizes images: `uint8` -> `float32`."""

return tf.cast(image, tf.float32) / 255., label

training_dataset, validation_dataset = tfds.load(

"mnist",

split=["train", "test"],

shuffle_files=True,

as_supervised=True,

with_info=False,

)

training_dataset = training_dataset.map(normalize_img)

validation_dataset = validation_dataset.map(normalize_img)

最後,訓練模型

def train_model(model, training_data, validation_data, **kwargs):

model.compile(

optimizer=tf.keras.optimizers.Adam(learning_rate=1e-3),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=[tf.keras.metrics.SparseCategoricalAccuracy()],

# Uncomment this to ease debugging:

# run_eagerly=True,

)

kwargs.setdefault("epochs", 5)

kwargs.setdefault("verbose", 1)

log = model.fit(

training_data.batch(128).prefetch(8),

validation_data=validation_data.batch(128).cache(),

validation_freq=1,

**kwargs,

)

return log.history["val_sparse_categorical_accuracy"][-1]

classifier_accuracy = train_model(

classifier, training_dataset, validation_dataset)

print(f"Accuracy: {classifier_accuracy:0.4f}")

Epoch 1/5 469/469 [==============================] - 51s 106ms/step - loss: 0.2019 - sparse_categorical_accuracy: 0.9396 - val_loss: 0.0729 - val_sparse_categorical_accuracy: 0.9771 Epoch 2/5 469/469 [==============================] - 49s 105ms/step - loss: 0.0638 - sparse_categorical_accuracy: 0.9806 - val_loss: 0.0647 - val_sparse_categorical_accuracy: 0.9774 Epoch 3/5 469/469 [==============================] - 49s 105ms/step - loss: 0.0440 - sparse_categorical_accuracy: 0.9864 - val_loss: 0.0518 - val_sparse_categorical_accuracy: 0.9828 Epoch 4/5 469/469 [==============================] - 49s 105ms/step - loss: 0.0323 - sparse_categorical_accuracy: 0.9904 - val_loss: 0.0564 - val_sparse_categorical_accuracy: 0.9830 Epoch 5/5 469/469 [==============================] - 49s 105ms/step - loss: 0.0258 - sparse_categorical_accuracy: 0.9919 - val_loss: 0.0611 - val_sparse_categorical_accuracy: 0.9840 Accuracy: 0.9840

成功!模型訓練良好,並在 5 個週期內在驗證集上達到超過 98% 的準確度。

訓練可壓縮分類器

Entropy Penalized Reparameterization (EPR) 有兩個主要成分

在訓練期間對模型權重套用懲罰,此懲罰對應於機率模型下的熵,並與權重的編碼架構相符。以下我們定義 Keras

Regularizer,以實作此懲罰。重新參數化權重,亦即將權重帶入更可壓縮的潛在表示法 (在可壓縮性和模型效能之間產生更好的權衡)。對於卷積核心,已證明傅立葉域是良好的表示法。對於其他參數,以下範例僅使用具有不同量化步階大小的純量量化 (捨入)。

首先,定義懲罰。

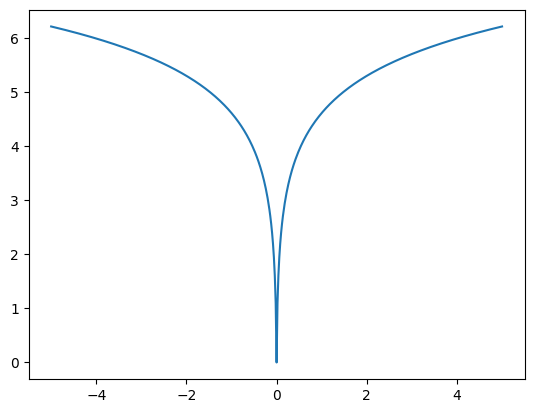

以下範例使用在 tfc.PowerLawEntropyModel 類別中實作的程式碼/機率模型,其靈感來自論文 Optimizing the Communication-Accuracy Trade-off in Federated Learning with Rate-Distortion Theory。懲罰定義為

\[ \log \Bigl(\frac {|x| + \alpha} \alpha\Bigr), \]

其中 \(x\) 是模型參數或其潛在表示法的一個元素,而 \(\alpha\) 是 0 值附近數值穩定性的小常數。

_ = tf.linspace(-5., 5., 501)

plt.plot(_, tfc.PowerLawEntropyModel(0).penalty(_));

懲罰實際上是正規化損失 (有時稱為「權重損失」)。它在零點具有尖點的凹面事實鼓勵權重稀疏性。用於壓縮權重的編碼架構,Elias gamma 程式碼,會為元素的大小產生長度為 \( 1 + \lfloor \log_2 |x| \rfloor \) 位元的程式碼。也就是說,它與懲罰相符,因此套用懲罰會將預期程式碼長度降到最低。

class PowerLawRegularizer(tf.keras.regularizers.Regularizer):

def __init__(self, lmbda):

super().__init__()

self.lmbda = lmbda

def __call__(self, variable):

em = tfc.PowerLawEntropyModel(coding_rank=variable.shape.rank)

return self.lmbda * em.penalty(variable)

# Normalizing the weight of the penalty by the number of model parameters is a

# good rule of thumb to produce comparable results across models.

regularizer = PowerLawRegularizer(lmbda=2./classifier.count_params())

其次,定義 CustomDense 和 CustomConv2D 的子類別,這些子類別具有以下額外功能

- 它們採用上述正規化器的執行個體,並在訓練期間將其套用至核心和偏差。

- 它們將核心和偏差定義為

@property,每當變數被存取時,都會使用直接傳遞梯度執行量化。這準確反映稍後在壓縮模型中執行的計算。 - 它們定義額外的

log_step變數,代表量化步階大小的對數。量化越粗糙,模型尺寸越小,但準確度越低。量化步階大小對於每個模型參數都是可訓練的,因此對懲罰損失函數執行最佳化將決定最佳量化步階大小。

量化步階定義如下

def quantize(latent, log_step):

step = tf.exp(log_step)

return tfc.round_st(latent / step) * step

有了這個,我們可以定義密集層

class CompressibleDense(CustomDense):

def __init__(self, regularizer, *args, **kwargs):

super().__init__(*args, **kwargs)

self.regularizer = regularizer

def build(self, input_shape, other=None):

"""Instantiates weights, optionally initializing them from `other`."""

super().build(input_shape, other=other)

if other is not None and hasattr(other, "kernel_log_step"):

kernel_log_step = other.kernel_log_step

bias_log_step = other.bias_log_step

else:

kernel_log_step = bias_log_step = -4.

self.kernel_log_step = tf.Variable(

tf.cast(kernel_log_step, self.variable_dtype), name="kernel_log_step")

self.bias_log_step = tf.Variable(

tf.cast(bias_log_step, self.variable_dtype), name="bias_log_step")

self.add_loss(lambda: self.regularizer(

self.kernel_latent / tf.exp(self.kernel_log_step)))

self.add_loss(lambda: self.regularizer(

self.bias_latent / tf.exp(self.bias_log_step)))

@property

def kernel(self):

return quantize(self.kernel_latent, self.kernel_log_step)

@kernel.setter

def kernel(self, kernel):

self.kernel_latent = tf.Variable(kernel, name="kernel_latent")

@property

def bias(self):

return quantize(self.bias_latent, self.bias_log_step)

@bias.setter

def bias(self, bias):

self.bias_latent = tf.Variable(bias, name="bias_latent")

卷積層是類似的。此外,每當設定核心時,卷積核心都會儲存為其實值離散傅立葉轉換 (RDFT),並且每當使用核心時,就會反轉轉換。由於核心的不同頻率成分傾向於或多或少可壓縮,因此它們各自獲得指派的量化步階大小。

將傅立葉轉換及其反向定義如下

def to_rdft(kernel, kernel_size):

# The kernel has shape (H, W, I, O) -> transpose to take DFT over last two

# dimensions.

kernel = tf.transpose(kernel, (2, 3, 0, 1))

# The RDFT has type complex64 and shape (I, O, FH, FW).

kernel_rdft = tf.signal.rfft2d(kernel)

# Map real and imaginary parts into regular floats. The result is float32

# and has shape (I, O, FH, FW, 2).

kernel_rdft = tf.stack(

[tf.math.real(kernel_rdft), tf.math.imag(kernel_rdft)], axis=-1)

# Divide by kernel size to make the DFT orthonormal (length-preserving).

return kernel_rdft / kernel_size

def from_rdft(kernel_rdft, kernel_size):

# Undoes the transformations in to_rdft.

kernel_rdft *= kernel_size

kernel_rdft = tf.dtypes.complex(*tf.unstack(kernel_rdft, axis=-1))

kernel = tf.signal.irfft2d(kernel_rdft, fft_length=2 * (kernel_size,))

return tf.transpose(kernel, (2, 3, 0, 1))

有了這個,將卷積層定義為

class CompressibleConv2D(CustomConv2D):

def __init__(self, regularizer, *args, **kwargs):

super().__init__(*args, **kwargs)

self.regularizer = regularizer

def build(self, input_shape, other=None):

"""Instantiates weights, optionally initializing them from `other`."""

super().build(input_shape, other=other)

if other is not None and hasattr(other, "kernel_log_step"):

kernel_log_step = other.kernel_log_step

bias_log_step = other.bias_log_step

else:

kernel_log_step = tf.fill(self.kernel_latent.shape[2:], -4.)

bias_log_step = -4.

self.kernel_log_step = tf.Variable(

tf.cast(kernel_log_step, self.variable_dtype), name="kernel_log_step")

self.bias_log_step = tf.Variable(

tf.cast(bias_log_step, self.variable_dtype), name="bias_log_step")

self.add_loss(lambda: self.regularizer(

self.kernel_latent / tf.exp(self.kernel_log_step)))

self.add_loss(lambda: self.regularizer(

self.bias_latent / tf.exp(self.bias_log_step)))

@property

def kernel(self):

kernel_rdft = quantize(self.kernel_latent, self.kernel_log_step)

return from_rdft(kernel_rdft, self.kernel_size)

@kernel.setter

def kernel(self, kernel):

kernel_rdft = to_rdft(kernel, self.kernel_size)

self.kernel_latent = tf.Variable(kernel_rdft, name="kernel_latent")

@property

def bias(self):

return quantize(self.bias_latent, self.bias_log_step)

@bias.setter

def bias(self, bias):

self.bias_latent = tf.Variable(bias, name="bias_latent")

使用與上述相同的架構定義分類器模型,但使用這些修改後的層

def make_mnist_classifier(regularizer):

return tf.keras.Sequential([

CompressibleConv2D(regularizer, 20, 5, strides=2, name="conv_1"),

CompressibleConv2D(regularizer, 50, 5, strides=2, name="conv_2"),

tf.keras.layers.Flatten(),

CompressibleDense(regularizer, 500, name="fc_1"),

CompressibleDense(regularizer, 10, name="fc_2"),

], name="classifier")

compressible_classifier = make_mnist_classifier(regularizer)

並訓練模型

penalized_accuracy = train_model(

compressible_classifier, training_dataset, validation_dataset)

print(f"Accuracy: {penalized_accuracy:0.4f}")

Epoch 1/5 469/469 [==============================] - 55s 113ms/step - loss: 3.7981 - sparse_categorical_accuracy: 0.9287 - val_loss: 2.1786 - val_sparse_categorical_accuracy: 0.9713 Epoch 2/5 469/469 [==============================] - 53s 112ms/step - loss: 1.6769 - sparse_categorical_accuracy: 0.9763 - val_loss: 1.3201 - val_sparse_categorical_accuracy: 0.9791 Epoch 3/5 469/469 [==============================] - 53s 113ms/step - loss: 1.0883 - sparse_categorical_accuracy: 0.9836 - val_loss: 0.9567 - val_sparse_categorical_accuracy: 0.9826 Epoch 4/5 469/469 [==============================] - 53s 113ms/step - loss: 0.8137 - sparse_categorical_accuracy: 0.9865 - val_loss: 0.7832 - val_sparse_categorical_accuracy: 0.9835 Epoch 5/5 469/469 [==============================] - 53s 113ms/step - loss: 0.6752 - sparse_categorical_accuracy: 0.9881 - val_loss: 0.7038 - val_sparse_categorical_accuracy: 0.9836 Accuracy: 0.9836

可壓縮模型已達到與純分類器相似的準確度。

但是,模型實際上尚未壓縮。若要執行此操作,我們定義另一組子類別,以壓縮形式儲存核心和偏差 - 作為位元序列。

壓縮分類器

以下定義的 CustomDense 和 CustomConv2D 子類別將可壓縮密集層的權重轉換為二進位字串。此外,它們以半精度儲存量化步階大小的對數,以節省空間。每當透過 @property 存取核心或偏差時,它們都會從字串表示法解壓縮並去量化。

首先,定義函數以壓縮和解壓縮模型參數

def compress_latent(latent, log_step, name):

em = tfc.PowerLawEntropyModel(latent.shape.rank)

compressed = em.compress(latent / tf.exp(log_step))

compressed = tf.Variable(compressed, name=f"{name}_compressed")

log_step = tf.cast(log_step, tf.float16)

log_step = tf.Variable(log_step, name=f"{name}_log_step")

return compressed, log_step

def decompress_latent(compressed, shape, log_step):

latent = tfc.PowerLawEntropyModel(len(shape)).decompress(compressed, shape)

step = tf.exp(tf.cast(log_step, latent.dtype))

return latent * step

有了這些,我們可以定義 CompressedDense

class CompressedDense(CustomDense):

def build(self, input_shape, other=None):

assert isinstance(other, CompressibleDense)

self.input_channels = other.kernel.shape[0]

self.kernel_compressed, self.kernel_log_step = compress_latent(

other.kernel_latent, other.kernel_log_step, "kernel")

self.bias_compressed, self.bias_log_step = compress_latent(

other.bias_latent, other.bias_log_step, "bias")

self.built = True

@property

def kernel(self):

kernel_shape = (self.input_channels, self.filters)

return decompress_latent(

self.kernel_compressed, kernel_shape, self.kernel_log_step)

@property

def bias(self):

bias_shape = (self.filters,)

return decompress_latent(

self.bias_compressed, bias_shape, self.bias_log_step)

卷積層類別與上述類似。

class CompressedConv2D(CustomConv2D):

def build(self, input_shape, other=None):

assert isinstance(other, CompressibleConv2D)

self.input_channels = other.kernel.shape[2]

self.kernel_compressed, self.kernel_log_step = compress_latent(

other.kernel_latent, other.kernel_log_step, "kernel")

self.bias_compressed, self.bias_log_step = compress_latent(

other.bias_latent, other.bias_log_step, "bias")

self.built = True

@property

def kernel(self):

rdft_shape = (self.input_channels, self.filters,

self.kernel_size, self.kernel_size // 2 + 1, 2)

kernel_rdft = decompress_latent(

self.kernel_compressed, rdft_shape, self.kernel_log_step)

return from_rdft(kernel_rdft, self.kernel_size)

@property

def bias(self):

bias_shape = (self.filters,)

return decompress_latent(

self.bias_compressed, bias_shape, self.bias_log_step)

若要將可壓縮模型轉換為壓縮模型,我們可以方便地使用 clone_model 函數。compress_layer 會將任何可壓縮層轉換為壓縮層,並簡單地傳遞任何其他類型的層 (例如 Flatten 等)。

def compress_layer(layer):

if isinstance(layer, CompressibleDense):

return CompressedDense.copy(layer)

if isinstance(layer, CompressibleConv2D):

return CompressedConv2D.copy(layer)

return type(layer).from_config(layer.get_config())

compressed_classifier = tf.keras.models.clone_model(

compressible_classifier, clone_function=compress_layer)

現在,讓我們驗證壓縮模型是否仍如預期般執行

compressed_classifier.compile(metrics=[tf.keras.metrics.SparseCategoricalAccuracy()])

_, compressed_accuracy = compressed_classifier.evaluate(validation_dataset.batch(128))

print(f"Accuracy of the compressible classifier: {penalized_accuracy:0.4f}")

print(f"Accuracy of the compressed classifier: {compressed_accuracy:0.4f}")

79/79 [==============================] - 1s 10ms/step - loss: 0.0000e+00 - sparse_categorical_accuracy: 0.9836 Accuracy of the compressible classifier: 0.9836 Accuracy of the compressed classifier: 0.9836

壓縮模型的分類準確度與訓練期間達成的準確度相同!

此外,壓縮模型權重的尺寸遠小於原始模型尺寸

def get_weight_size_in_bytes(weight):

if weight.dtype == tf.string:

return tf.reduce_sum(tf.strings.length(weight, unit="BYTE"))

else:

return tf.size(weight) * weight.dtype.size

original_size = sum(map(get_weight_size_in_bytes, classifier.weights))

compressed_size = sum(map(get_weight_size_in_bytes, compressed_classifier.weights))

print(f"Size of original model weights: {original_size} bytes")

print(f"Size of compressed model weights: {compressed_size} bytes")

print(f"Compression ratio: {(original_size/compressed_size):0.0f}x")

Size of original model weights: 5024320 bytes Size of compressed model weights: 19458 bytes Compression ratio: 258x

在磁碟上儲存模型需要一些額外負荷,以儲存模型架構、函數圖等。

ZIP 等無損壓縮方法擅長壓縮這種類型的資料,但並不擅長壓縮權重本身。這就是為什麼在計算包含該額外負荷的模型尺寸後,以及在也套用 ZIP 壓縮之後,EPR 仍然具有顯著優勢的原因

import os

import shutil

def get_disk_size(model, path):

model.save(path)

zip_path = shutil.make_archive(path, "zip", path)

return os.path.getsize(zip_path)

original_zip_size = get_disk_size(classifier, "/tmp/classifier")

compressed_zip_size = get_disk_size(

compressed_classifier, "/tmp/compressed_classifier")

print(f"Original on-disk size (ZIP compressed): {original_zip_size} bytes")

print(f"Compressed on-disk size (ZIP compressed): {compressed_zip_size} bytes")

print(f"Compression ratio: {(original_zip_size/compressed_zip_size):0.0f}x")

INFO:tensorflow:Assets written to: /tmp/classifier/assets INFO:tensorflow:Assets written to: /tmp/classifier/assets INFO:tensorflow:Assets written to: /tmp/compressed_classifier/assets INFO:tensorflow:Assets written to: /tmp/compressed_classifier/assets Original on-disk size (ZIP compressed): 13906612 bytes Compressed on-disk size (ZIP compressed): 61588 bytes Compression ratio: 226x

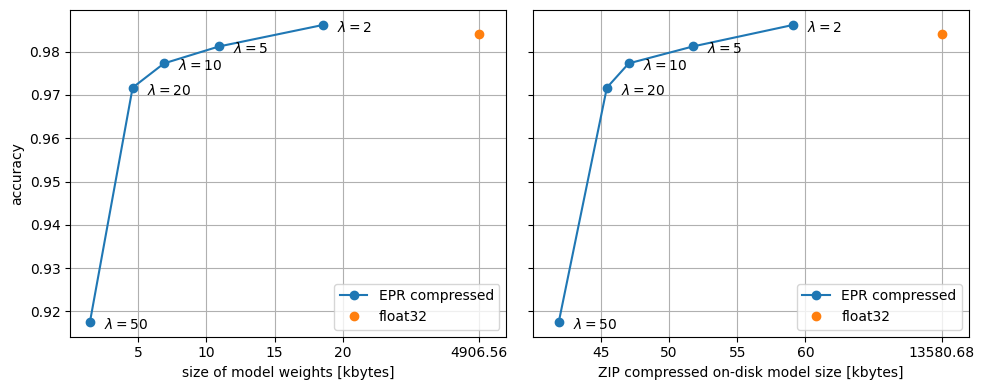

正規化效果和尺寸 - 準確度權衡

在上方,\(\lambda\) 超參數設定為 2 (依模型中的參數數量正規化)。隨著我們增加 \(\lambda\),模型權重會因可壓縮性而受到越來越嚴重的懲罰。

對於低值,懲罰可以像權重正規化器一樣作用。它實際上對分類器的泛化效能具有有益的影響,並可能導致驗證資料集上略高的準確度

Accuracy of the vanilla classifier: 0.9840 Accuracy of the penalized classifier: 0.9836

對於較高的值,我們看到模型尺寸越來越小,但準確度也逐漸降低。若要查看此情況,讓我們訓練幾個模型並繪製其尺寸與準確度的關係圖

def compress_and_evaluate_model(lmbda):

print(f"lambda={lmbda:0.0f}: training...", flush=True)

regularizer = PowerLawRegularizer(lmbda=lmbda/classifier.count_params())

compressible_classifier = make_mnist_classifier(regularizer)

train_model(

compressible_classifier, training_dataset, validation_dataset, verbose=0)

print("compressing...", flush=True)

compressed_classifier = tf.keras.models.clone_model(

compressible_classifier, clone_function=compress_layer)

compressed_size = sum(map(

get_weight_size_in_bytes, compressed_classifier.weights))

compressed_zip_size = float(get_disk_size(

compressed_classifier, "/tmp/compressed_classifier"))

print("evaluating...", flush=True)

compressed_classifier = tf.keras.models.load_model(

"/tmp/compressed_classifier")

compressed_classifier.compile(

metrics=[tf.keras.metrics.SparseCategoricalAccuracy()])

_, compressed_accuracy = compressed_classifier.evaluate(

validation_dataset.batch(128), verbose=0)

print()

return compressed_size, compressed_zip_size, compressed_accuracy

lambdas = (2., 5., 10., 20., 50.)

metrics = [compress_and_evaluate_model(l) for l in lambdas]

metrics = tf.convert_to_tensor(metrics, tf.float32)

lambda=2: training... compressing... WARNING:tensorflow:Compiled the loaded model, but the compiled metrics have yet to be built. `model.compile_metrics` will be empty until you train or evaluate the model. WARNING:tensorflow:Compiled the loaded model, but the compiled metrics have yet to be built. `model.compile_metrics` will be empty until you train or evaluate the model. INFO:tensorflow:Assets written to: /tmp/compressed_classifier/assets INFO:tensorflow:Assets written to: /tmp/compressed_classifier/assets evaluating... WARNING:tensorflow:No training configuration found in save file, so the model was *not* compiled. Compile it manually. WARNING:tensorflow:No training configuration found in save file, so the model was *not* compiled. Compile it manually. lambda=5: training... compressing... WARNING:tensorflow:Compiled the loaded model, but the compiled metrics have yet to be built. `model.compile_metrics` will be empty until you train or evaluate the model. WARNING:tensorflow:Compiled the loaded model, but the compiled metrics have yet to be built. `model.compile_metrics` will be empty until you train or evaluate the model. INFO:tensorflow:Assets written to: /tmp/compressed_classifier/assets INFO:tensorflow:Assets written to: /tmp/compressed_classifier/assets evaluating... WARNING:tensorflow:No training configuration found in save file, so the model was *not* compiled. Compile it manually. WARNING:tensorflow:No training configuration found in save file, so the model was *not* compiled. Compile it manually. lambda=10: training... compressing... WARNING:tensorflow:Compiled the loaded model, but the compiled metrics have yet to be built. `model.compile_metrics` will be empty until you train or evaluate the model. WARNING:tensorflow:Compiled the loaded model, but the compiled metrics have yet to be built. `model.compile_metrics` will be empty until you train or evaluate the model. INFO:tensorflow:Assets written to: /tmp/compressed_classifier/assets INFO:tensorflow:Assets written to: /tmp/compressed_classifier/assets evaluating... WARNING:tensorflow:No training configuration found in save file, so the model was *not* compiled. Compile it manually. WARNING:tensorflow:No training configuration found in save file, so the model was *not* compiled. Compile it manually. lambda=20: training... compressing... WARNING:tensorflow:Compiled the loaded model, but the compiled metrics have yet to be built. `model.compile_metrics` will be empty until you train or evaluate the model. WARNING:tensorflow:Compiled the loaded model, but the compiled metrics have yet to be built. `model.compile_metrics` will be empty until you train or evaluate the model. INFO:tensorflow:Assets written to: /tmp/compressed_classifier/assets INFO:tensorflow:Assets written to: /tmp/compressed_classifier/assets evaluating... WARNING:tensorflow:No training configuration found in save file, so the model was *not* compiled. Compile it manually. WARNING:tensorflow:No training configuration found in save file, so the model was *not* compiled. Compile it manually. lambda=50: training... compressing... WARNING:tensorflow:Compiled the loaded model, but the compiled metrics have yet to be built. `model.compile_metrics` will be empty until you train or evaluate the model. WARNING:tensorflow:Compiled the loaded model, but the compiled metrics have yet to be built. `model.compile_metrics` will be empty until you train or evaluate the model. INFO:tensorflow:Assets written to: /tmp/compressed_classifier/assets INFO:tensorflow:Assets written to: /tmp/compressed_classifier/assets evaluating... WARNING:tensorflow:No training configuration found in save file, so the model was *not* compiled. Compile it manually. WARNING:tensorflow:No training configuration found in save file, so the model was *not* compiled. Compile it manually.

此圖表應理想地顯示肘形尺寸 - 準確度權衡,但準確度指標有些雜訊是正常的。根據初始化,曲線可能會呈現一些扭結。

由於正規化效果,對於小 \(\lambda\) 值,EPR 壓縮模型在測試集上的準確度高於原始模型。即使我們比較在額外 ZIP 壓縮後的尺寸,EPR 壓縮模型也小很多倍。

解壓縮分類器

CompressedDense 和 CompressedConv2D 會在每次正向傳遞時解壓縮其權重。這使它們非常適合記憶體受限的裝置,但解壓縮的計算成本可能很高,尤其對於小批次大小而言。

若要將模型解壓縮一次,並將其用於進一步訓練或推論,我們可以將其轉換回使用常規或可壓縮層的模型。這在模型部署或聯邦學習情境中可能很有用。

首先,轉換回純模型,我們可以執行推論,及/或繼續進行沒有壓縮懲罰的常規訓練

def decompress_layer(layer):

if isinstance(layer, CompressedDense):

return CustomDense.copy(layer)

if isinstance(layer, CompressedConv2D):

return CustomConv2D.copy(layer)

return type(layer).from_config(layer.get_config())

decompressed_classifier = tf.keras.models.clone_model(

compressed_classifier, clone_function=decompress_layer)

decompressed_accuracy = train_model(

decompressed_classifier, training_dataset, validation_dataset, epochs=1)

print(f"Accuracy of the compressed classifier: {compressed_accuracy:0.4f}")

print(f"Accuracy of the decompressed classifier after one more epoch of training: {decompressed_accuracy:0.4f}")

469/469 [==============================] - 50s 106ms/step - loss: 0.0851 - sparse_categorical_accuracy: 0.9749 - val_loss: 0.0695 - val_sparse_categorical_accuracy: 0.9759 Accuracy of the compressed classifier: 0.9836 Accuracy of the decompressed classifier after one more epoch of training: 0.9759

請注意,在額外訓練一個週期後,驗證準確度會下降,因為訓練是在沒有正規化的情況下完成的。

或者,我們可以將模型轉換回「可壓縮」模型,以進行推論及/或使用壓縮懲罰進行進一步訓練

def decompress_layer_with_penalty(layer):

if isinstance(layer, CompressedDense):

return CompressibleDense.copy(layer, regularizer=regularizer)

if isinstance(layer, CompressedConv2D):

return CompressibleConv2D.copy(layer, regularizer=regularizer)

return type(layer).from_config(layer.get_config())

decompressed_classifier = tf.keras.models.clone_model(

compressed_classifier, clone_function=decompress_layer_with_penalty)

decompressed_accuracy = train_model(

decompressed_classifier, training_dataset, validation_dataset, epochs=1)

print(f"Accuracy of the compressed classifier: {compressed_accuracy:0.4f}")

print(f"Accuracy of the decompressed classifier after one more epoch of training: {decompressed_accuracy:0.4f}")

469/469 [==============================] - 55s 113ms/step - loss: 0.8058 - sparse_categorical_accuracy: 0.9901 - val_loss: 0.8403 - val_sparse_categorical_accuracy: 0.9866 Accuracy of the compressed classifier: 0.9836 Accuracy of the decompressed classifier after one more epoch of training: 0.9866

在這裡,在額外訓練一個週期後,準確度會提高。