在 TensorFlow.org 上檢視 在 TensorFlow.org 上檢視

|

在 Google Colab 中執行 在 Google Colab 中執行

|

在 GitHub 上檢視原始碼 在 GitHub 上檢視原始碼

|

下載筆記本 下載筆記本

|

這是 Li 等人於 2020 年 3 月 16 日發表的同名論文的 TensorFlow Probability 移植版本。我們忠實地在 TensorFlow Probability 平台上重現了原始作者的方法和結果,展示了 TFP 在現代流行病學建模環境中的部分功能。移植到 TensorFlow 使我們相較於原始 Matlab 程式碼獲得約 10 倍的速度提升,而且由於 TensorFlow Probability 普遍支援向量化批次運算,因此也能良好地擴展到數百個獨立的複製。

原始論文

Ruiyun Li、Sen Pei、Bin Chen、Yimeng Song、Tao Zhang、Wan Yang 和 Jeffrey Shaman。《大量未記錄感染促使新型冠狀病毒 (SARS-CoV2) 快速傳播》。(2020),doi:https://doi.org/10.1126/science.abb3221。

摘要: 「估計未記錄的新型冠狀病毒 (SARS-CoV2) 感染的盛行率和傳染性,對於瞭解此疾病的總體盛行率和流行潛力至關重要。在此,我們使用中國境內通報的感染觀察結果,結合人口流動資料、網路動態都會人口模型和貝氏推論,來推斷與 SARS-CoV2 相關的關鍵流行病學特徵,包括未記錄感染的比例及其傳染性。我們估計在 2020 年 1 月 23 日的旅行限制之前,所有感染中有 86% 為未記錄感染 (95% CI:[82%–90%])。就個人而言,未記錄感染的傳播率為已記錄感染的 55% ([46%–62%]),但由於其數量較多,未記錄感染是 79% 已記錄病例的感染源。這些發現解釋了 SARS-CoV2 的快速地理傳播,並表明控制這種病毒將特別具有挑戰性。」

Github 連結,連結至程式碼和資料。

總覽

該模型是區室疾病模型,區室分為「易感人群」、「暴露人群」(已感染但尚未具傳染力)、「從未記錄的傳染人群」和「最終記錄的傳染人群」。其中有兩個值得注意的特點:針對 375 個中國城市中的每個城市設立獨立的區室,並假設人口如何在城市之間流動;以及感染通報延遲,因此在第 \(t\) 天成為「最終記錄的傳染人群」的病例,在隨後的隨機某天才會出現在觀察到的病例數中。

該模型假設,從未記錄的病例由於症狀較輕而最終未被記錄,因此傳染給他人的比率較低。原始論文中主要關注的參數是未記錄病例的比例,以估計現有感染的程度,以及未記錄傳播對疾病傳播的影響。

這個 colab 以由下而上的樣式建構為程式碼逐步解說。依序我們將

- 擷取並簡要檢查資料、

- 定義模型的狀態空間和動態、

- 建構一組函數,用於依照 Li 等人的方法在模型中進行推論,以及

- 調用它們並檢查結果。劇透:結果與論文中的相同。

安裝與 Python 匯入

pip3 install -q tf-nightly tfp-nightly

import collections

import io

import requests

import time

import zipfile

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import tensorflow.compat.v2 as tf

import tensorflow_probability as tfp

from tensorflow_probability.python.internal import samplers

tfd = tfp.distributions

tfes = tfp.experimental.sequential

資料匯入

讓我們從 github 匯入資料並檢查部分資料。

r = requests.get('https://raw.githubusercontent.com/SenPei-CU/COVID-19/master/Data.zip')

z = zipfile.ZipFile(io.BytesIO(r.content))

z.extractall('/tmp/')

raw_incidence = pd.read_csv('/tmp/data/Incidence.csv')

raw_mobility = pd.read_csv('/tmp/data/Mobility.csv')

raw_population = pd.read_csv('/tmp/data/pop.csv')

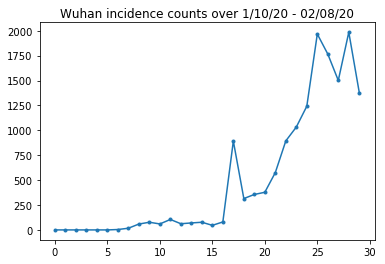

以下我們可以看到每日原始發生率計數。我們最感興趣的是前 14 天 (1 月 10 日至 1 月 23 日),因為旅行限制是在 23 日實施的。該論文透過分別對 1 月 10 日至 23 日和 1 月 23 日以後進行建模來處理此問題,使用不同的參數;我們將僅將我們的重現限制在較早的期間。

raw_incidence.drop('Date', axis=1) # The 'Date' column is all 1/18/21

# Luckily the days are in order, starting on January 10th, 2020.

讓我們對武漢的發生率計數進行健全性檢查。

plt.plot(raw_incidence.Wuhan, '.-')

plt.title('Wuhan incidence counts over 1/10/20 - 02/08/20')

plt.show()

到目前為止還不錯。現在是初始人口數。

raw_population

我們也檢查並記錄哪個條目是武漢。

raw_population['City'][169]

'Wuhan'

WUHAN_IDX = 169

在這裡我們看到了不同城市之間的人口流動矩陣。這是前 14 天不同城市之間人口流動數量的代理。它源自騰訊提供的 2018 年農曆新年期間的 GPS 記錄。Li 等人將 2020 年期間的人口流動建模為某個未知 (受推論約束) 常數因子 \(\theta\) 乘以這個矩陣。

raw_mobility

最後,讓我們將所有這些預先處理成我們可以使用的 numpy 陣列。

# The given populations are only "initial" because of intercity mobility during

# the holiday season.

initial_population = raw_population['Population'].to_numpy().astype(np.float32)

將人口流動資料轉換為 [L, L, T] 形狀的張量,其中 L 是位置數量,T 是時間步數。

daily_mobility_matrices = []

for i in range(1, 15):

day_mobility = raw_mobility[raw_mobility['Day'] == i]

# Make a matrix of daily mobilities.

z = pd.crosstab(

day_mobility.Origin,

day_mobility.Destination,

values=day_mobility['Mobility Index'], aggfunc='sum', dropna=False)

# Include every city, even if there are no rows for some in the raw data on

# some day. This uses the sort order of `raw_population`.

z = z.reindex(index=raw_population['City'], columns=raw_population['City'],

fill_value=0)

# Finally, fill any missing entries with 0. This means no mobility.

z = z.fillna(0)

daily_mobility_matrices.append(z.to_numpy())

mobility_matrix_over_time = np.stack(daily_mobility_matrices, axis=-1).astype(

np.float32)

最後取得觀察到的感染數,並製作 [L, T] 表格。

# Remove the date parameter and take the first 14 days.

observed_daily_infectious_count = raw_incidence.to_numpy()[:14, 1:]

observed_daily_infectious_count = np.transpose(

observed_daily_infectious_count).astype(np.float32)

並再次檢查我們是否以我們想要的方式取得形狀。提醒一下,我們正在處理 375 個城市和 14 天。

print('Mobility Matrix over time should have shape (375, 375, 14): {}'.format(

mobility_matrix_over_time.shape))

print('Observed Infectious should have shape (375, 14): {}'.format(

observed_daily_infectious_count.shape))

print('Initial population should have shape (375): {}'.format(

initial_population.shape))

Mobility Matrix over time should have shape (375, 375, 14): (375, 375, 14) Observed Infectious should have shape (375, 14): (375, 14) Initial population should have shape (375): (375,)

定義狀態和參數

讓我們開始定義我們的模型。我們正在重現的模型是 SEIR 模型 的變體。在這種情況下,我們有以下隨時間變化的狀態

- \(S\): 每個城市中對疾病易感的人數。

- \(E\): 每個城市中暴露於該疾病但尚未具傳染力的人數。在生物學上,這對應於感染該疾病,因為所有暴露人群最終都會變得具傳染力。

- \(I^u\): 每個城市中具有傳染力但未記錄的人數。在模型中,這實際上意味著「永遠不會被記錄」。

- \(I^r\): 每個城市中具有傳染力且已記錄的人數。Li 等人對通報延遲進行建模,因此 \(I^r\) 實際上對應於類似「病例嚴重到足以在未來某個時間點被記錄」的情況。

正如我們將在下面看到的,我們將透過在時間上向前執行集成調整卡爾曼濾波器 (EAKF) 來推斷這些狀態。EAKF 的狀態向量是每個城市的這些量的索引向量。

該模型具有以下可推斷的整體、時不變參數

- \(\beta\): 由已記錄的傳染性個體引起的傳播率。

- \(\mu\): 由未記錄的傳染性個體引起的相對傳播率。這將透過乘積 \(\mu \beta\) 發揮作用。

- \(\theta\): 城市間人口流動因子。這是一個大於 1 的因子,用於校正人口流動資料的低報 (以及 2018 年至 2020 年的人口成長)。

- \(Z\): 平均潛伏期 (即「暴露」狀態下的時間)。

- \(\alpha\): 這是嚴重到足以 (最終) 被記錄的感染比例。

- \(D\): 感染的平均持續時間 (即在任一「傳染性」狀態下的時間)。

我們將使用圍繞 EAKF 狀態的迭代濾波迴圈來推斷這些參數的點估計值。

該模型也取決於未推斷的常數

- \(M\): 城市間人口流動矩陣。這是隨時間變化的,並假定為給定值。回想一下,它會按推斷參數 \(\theta\) 縮放,以給出城市之間實際的人口流動。

- \(N\): 每個城市的總人口數。初始人口數視為給定值,人口隨時間的變化是根據人口流動數量 \(\theta M\) 計算得出的。

首先,我們為自己提供一些資料結構來保存我們的狀態和參數。

SEIRComponents = collections.namedtuple(

typename='SEIRComponents',

field_names=[

'susceptible', # S

'exposed', # E

'documented_infectious', # I^r

'undocumented_infectious', # I^u

# This is the count of new cases in the "documented infectious" compartment.

# We need this because we will introduce a reporting delay, between a person

# entering I^r and showing up in the observable case count data.

# This can't be computed from the cumulative `documented_infectious` count,

# because some portion of that population will move to the 'recovered'

# state, which we aren't tracking explicitly.

'daily_new_documented_infectious'])

ModelParams = collections.namedtuple(

typename='ModelParams',

field_names=[

'documented_infectious_tx_rate', # Beta

'undocumented_infectious_tx_relative_rate', # Mu

'intercity_underreporting_factor', # Theta

'average_latency_period', # Z

'fraction_of_documented_infections', # Alpha

'average_infection_duration' # D

]

)

我們也編碼了 Li 等人對參數值的界限。

PARAMETER_LOWER_BOUNDS = ModelParams(

documented_infectious_tx_rate=0.8,

undocumented_infectious_tx_relative_rate=0.2,

intercity_underreporting_factor=1.,

average_latency_period=2.,

fraction_of_documented_infections=0.02,

average_infection_duration=2.

)

PARAMETER_UPPER_BOUNDS = ModelParams(

documented_infectious_tx_rate=1.5,

undocumented_infectious_tx_relative_rate=1.,

intercity_underreporting_factor=1.75,

average_latency_period=5.,

fraction_of_documented_infections=1.,

average_infection_duration=5.

)

SEIR 動態

在這裡我們定義參數和狀態之間的關係。

Li 等人的時間動態方程式 (補充材料,方程式 1-5) 如下

\(\frac{dS_i}{dt} = -\beta \frac{S_i I_i^r}{N_i} - \mu \beta \frac{S_i I_i^u}{N_i} + \theta \sum_k \frac{M_{ij} S_j}{N_j - I_j^r} - + \theta \sum_k \frac{M_{ji} S_j}{N_i - I_i^r}\)

\(\frac{dE_i}{dt} = \beta \frac{S_i I_i^r}{N_i} + \mu \beta \frac{S_i I_i^u}{N_i} -\frac{E_i}{Z} + \theta \sum_k \frac{M_{ij} E_j}{N_j - I_j^r} - + \theta \sum_k \frac{M_{ji} E_j}{N_i - I_i^r}\)

\(\frac{dI^r_i}{dt} = \alpha \frac{E_i}{Z} - \frac{I_i^r}{D}\)

\(\frac{dI^u_i}{dt} = (1 - \alpha) \frac{E_i}{Z} - \frac{I_i^u}{D} + \theta \sum_k \frac{M_{ij} I_j^u}{N_j - I_j^r} - + \theta \sum_k \frac{M_{ji} I^u_j}{N_i - I_i^r}\)

\(N_i = N_i + \theta \sum_j M_{ij} - \theta \sum_j M_{ji}\)

提醒一下,\(i\) 和 \(j\) 下標索引城市。這些方程式透過以下方式對疾病的時間演變進行建模

- 與傳染性個體接觸導致更多感染;

- 疾病從「暴露」進展到「傳染性」狀態之一;

- 疾病從「傳染性」狀態進展到康復,我們透過從建模人口中移除來對其進行建模;

- 城市間人口流動,包括暴露或未記錄的傳染性人群;以及

- 每日城市人口數透過城市間人口流動隨時間變化。

依照 Li 等人的方法,我們假設病例嚴重到足以最終被通報的人員不會在城市之間旅行。

同樣依照 Li 等人的方法,我們將這些動態視為受到逐項 Poisson 雜訊的影響,即每一項實際上都是 Poisson 的比率,從中採樣得出真實的變化。Poisson 雜訊是逐項的,因為減去 (而不是加上) Poisson 樣本不會產生 Poisson 分佈的結果。

我們將使用經典的四階龍格-庫塔積分器在時間上向前演化這些動態,但首先讓我們定義計算它們的函數 (包括採樣 Poisson 雜訊)。

def sample_state_deltas(

state, population, mobility_matrix, params, seed, is_deterministic=False):

"""Computes one-step change in state, including Poisson sampling.

Note that this is coded to support vectorized evaluation on arbitrary-shape

batches of states. This is useful, for example, for running multiple

independent replicas of this model to compute credible intervals for the

parameters. We refer to the arbitrary batch shape with the conventional

`B` in the parameter documentation below. This function also, of course,

supports broadcasting over the batch shape.

Args:

state: A `SEIRComponents` tuple with fields Tensors of shape

B + [num_locations] giving the current disease state.

population: A Tensor of shape B + [num_locations] giving the current city

populations.

mobility_matrix: A Tensor of shape B + [num_locations, num_locations] giving

the current baseline inter-city mobility.

params: A `ModelParams` tuple with fields Tensors of shape B giving the

global parameters for the current EAKF run.

seed: Initial entropy for pseudo-random number generation. The Poisson

sampling is repeatable by supplying the same seed.

is_deterministic: A `bool` flag to turn off Poisson sampling if desired.

Returns:

delta: A `SEIRComponents` tuple with fields Tensors of shape

B + [num_locations] giving the one-day changes in the state, according

to equations 1-4 above (including Poisson noise per Li et al).

"""

undocumented_infectious_fraction = state.undocumented_infectious / population

documented_infectious_fraction = state.documented_infectious / population

# Anyone not documented as infectious is considered mobile

mobile_population = (population - state.documented_infectious)

def compute_outflow(compartment_population):

raw_mobility = tf.linalg.matvec(

mobility_matrix, compartment_population / mobile_population)

return params.intercity_underreporting_factor * raw_mobility

def compute_inflow(compartment_population):

raw_mobility = tf.linalg.matmul(

mobility_matrix,

(compartment_population / mobile_population)[..., tf.newaxis],

transpose_a=True)

return params.intercity_underreporting_factor * tf.squeeze(

raw_mobility, axis=-1)

# Helper for sampling the Poisson-variate terms.

seeds = samplers.split_seed(seed, n=11)

if is_deterministic:

def sample_poisson(rate):

return rate

else:

def sample_poisson(rate):

return tfd.Poisson(rate=rate).sample(seed=seeds.pop())

# Below are the various terms called U1-U12 in the paper. We combined the

# first two, which should be fine; both are poisson so their sum is too, and

# there's no risk (as there could be in other terms) of going negative.

susceptible_becoming_exposed = sample_poisson(

state.susceptible *

(params.documented_infectious_tx_rate *

documented_infectious_fraction +

(params.undocumented_infectious_tx_relative_rate *

params.documented_infectious_tx_rate) *

undocumented_infectious_fraction)) # U1 + U2

susceptible_population_inflow = sample_poisson(

compute_inflow(state.susceptible)) # U3

susceptible_population_outflow = sample_poisson(

compute_outflow(state.susceptible)) # U4

exposed_becoming_documented_infectious = sample_poisson(

params.fraction_of_documented_infections *

state.exposed / params.average_latency_period) # U5

exposed_becoming_undocumented_infectious = sample_poisson(

(1 - params.fraction_of_documented_infections) *

state.exposed / params.average_latency_period) # U6

exposed_population_inflow = sample_poisson(

compute_inflow(state.exposed)) # U7

exposed_population_outflow = sample_poisson(

compute_outflow(state.exposed)) # U8

documented_infectious_becoming_recovered = sample_poisson(

state.documented_infectious /

params.average_infection_duration) # U9

undocumented_infectious_becoming_recovered = sample_poisson(

state.undocumented_infectious /

params.average_infection_duration) # U10

undocumented_infectious_population_inflow = sample_poisson(

compute_inflow(state.undocumented_infectious)) # U11

undocumented_infectious_population_outflow = sample_poisson(

compute_outflow(state.undocumented_infectious)) # U12

# The final state_deltas

return SEIRComponents(

# Equation [1]

susceptible=(-susceptible_becoming_exposed +

susceptible_population_inflow +

-susceptible_population_outflow),

# Equation [2]

exposed=(susceptible_becoming_exposed +

-exposed_becoming_documented_infectious +

-exposed_becoming_undocumented_infectious +

exposed_population_inflow +

-exposed_population_outflow),

# Equation [3]

documented_infectious=(

exposed_becoming_documented_infectious +

-documented_infectious_becoming_recovered),

# Equation [4]

undocumented_infectious=(

exposed_becoming_undocumented_infectious +

-undocumented_infectious_becoming_recovered +

undocumented_infectious_population_inflow +

-undocumented_infectious_population_outflow),

# New to-be-documented infectious cases, subject to the delayed

# observation model.

daily_new_documented_infectious=exposed_becoming_documented_infectious)

這是積分器。除了將 PRNG 種子傳遞到 sample_state_deltas 函數以在龍格-庫塔方法要求的每個部分步驟中獲得獨立的 Poisson 雜訊外,這完全是標準的。

@tf.function(autograph=False)

def rk4_one_step(state, population, mobility_matrix, params, seed):

"""Implement one step of RK4, wrapped around a call to sample_state_deltas."""

# One seed for each RK sub-step

seeds = samplers.split_seed(seed, n=4)

deltas = tf.nest.map_structure(tf.zeros_like, state)

combined_deltas = tf.nest.map_structure(tf.zeros_like, state)

for a, b in zip([1., 2, 2, 1.], [6., 3., 3., 6.]):

next_input = tf.nest.map_structure(

lambda x, delta, a=a: x + delta / a, state, deltas)

deltas = sample_state_deltas(

next_input,

population,

mobility_matrix,

params,

seed=seeds.pop(), is_deterministic=False)

combined_deltas = tf.nest.map_structure(

lambda x, delta, b=b: x + delta / b, combined_deltas, deltas)

return tf.nest.map_structure(

lambda s, delta: s + tf.round(delta),

state, combined_deltas)

初始化

在這裡我們實作論文中的初始化方案。

依照 Li 等人的方法,我們的推論方案將是集成調整卡爾曼濾波器內迴圈,外圍環繞著迭代濾波外迴圈 (IF-EAKF)。在計算上,這意味著我們需要三種類型的初始化

- 內部 EAKF 的初始狀態

- 外部 IF 的初始參數,這也是第一個 EAKF 的初始參數

- 從一個 IF 迭代更新到下一個 IF 迭代的參數,這些參數充當每個 EAKF (第一個 EAKF 除外) 的初始參數。

def initialize_state(num_particles, num_batches, seed):

"""Initialize the state for a batch of EAKF runs.

Args:

num_particles: `int` giving the number of particles for the EAKF.

num_batches: `int` giving the number of independent EAKF runs to

initialize in a vectorized batch.

seed: PRNG entropy.

Returns:

state: A `SEIRComponents` tuple with Tensors of shape [num_particles,

num_batches, num_cities] giving the initial conditions in each

city, in each filter particle, in each batch member.

"""

num_cities = mobility_matrix_over_time.shape[-2]

state_shape = [num_particles, num_batches, num_cities]

susceptible = initial_population * np.ones(state_shape, dtype=np.float32)

documented_infectious = np.zeros(state_shape, dtype=np.float32)

daily_new_documented_infectious = np.zeros(state_shape, dtype=np.float32)

# Following Li et al, initialize Wuhan with up to 2000 people exposed

# and another up to 2000 undocumented infectious.

rng = np.random.RandomState(seed[0] % (2**31 - 1))

wuhan_exposed = rng.randint(

0, 2001, [num_particles, num_batches]).astype(np.float32)

wuhan_undocumented_infectious = rng.randint(

0, 2001, [num_particles, num_batches]).astype(np.float32)

# Also following Li et al, initialize cities adjacent to Wuhan with three

# days' worth of additional exposed and undocumented-infectious cases,

# as they may have traveled there before the beginning of the modeling

# period.

exposed = 3 * mobility_matrix_over_time[

WUHAN_IDX, :, 0] * wuhan_exposed[

..., np.newaxis] / initial_population[WUHAN_IDX]

undocumented_infectious = 3 * mobility_matrix_over_time[

WUHAN_IDX, :, 0] * wuhan_undocumented_infectious[

..., np.newaxis] / initial_population[WUHAN_IDX]

exposed[..., WUHAN_IDX] = wuhan_exposed

undocumented_infectious[..., WUHAN_IDX] = wuhan_undocumented_infectious

# Following Li et al, we do not remove the initial exposed and infectious

# persons from the susceptible population.

return SEIRComponents(

susceptible=tf.constant(susceptible),

exposed=tf.constant(exposed),

documented_infectious=tf.constant(documented_infectious),

undocumented_infectious=tf.constant(undocumented_infectious),

daily_new_documented_infectious=tf.constant(daily_new_documented_infectious))

def initialize_params(num_particles, num_batches, seed):

"""Initialize the global parameters for the entire inference run.

Args:

num_particles: `int` giving the number of particles for the EAKF.

num_batches: `int` giving the number of independent EAKF runs to

initialize in a vectorized batch.

seed: PRNG entropy.

Returns:

params: A `ModelParams` tuple with fields Tensors of shape

[num_particles, num_batches] giving the global parameters

to use for the first batch of EAKF runs.

"""

# We have 6 parameters. We'll initialize with a Sobol sequence,

# covering the hyper-rectangle defined by our parameter limits.

halton_sequence = tfp.mcmc.sample_halton_sequence(

dim=6, num_results=num_particles * num_batches, seed=seed)

halton_sequence = tf.reshape(

halton_sequence, [num_particles, num_batches, 6])

halton_sequences = tf.nest.pack_sequence_as(

PARAMETER_LOWER_BOUNDS, tf.split(

halton_sequence, num_or_size_splits=6, axis=-1))

def interpolate(minval, maxval, h):

return (maxval - minval) * h + minval

return tf.nest.map_structure(

interpolate,

PARAMETER_LOWER_BOUNDS, PARAMETER_UPPER_BOUNDS, halton_sequences)

def update_params(num_particles, num_batches,

prev_params, parameter_variance, seed):

"""Update the global parameters between EAKF runs.

Args:

num_particles: `int` giving the number of particles for the EAKF.

num_batches: `int` giving the number of independent EAKF runs to

initialize in a vectorized batch.

prev_params: A `ModelParams` tuple of the parameters used for the previous

EAKF run.

parameter_variance: A `ModelParams` tuple specifying how much to drift

each parameter.

seed: PRNG entropy.

Returns:

params: A `ModelParams` tuple with fields Tensors of shape

[num_particles, num_batches] giving the global parameters

to use for the next batch of EAKF runs.

"""

# Initialize near the previous set of parameters. This is the first step

# in Iterated Filtering.

seeds = tf.nest.pack_sequence_as(

prev_params, samplers.split_seed(seed, n=len(prev_params)))

return tf.nest.map_structure(

lambda x, v, seed: x + tf.math.sqrt(v) * tf.random.stateless_normal([

num_particles, num_batches, 1], seed=seed),

prev_params, parameter_variance, seeds)

延遲

此模型的重要特徵之一是明確考慮到感染的通報時間晚於開始時間。也就是說,我們預期從 \(E\) 區室移動到 \(I^r\) 區室的人員在第 \(t\) 天可能不會在稍後的某天才出現在可觀察的通報病例數中。

我們假設延遲呈伽瑪分佈。依照 Li 等人的方法,我們使用 1.85 作為形狀,並將比率參數化以產生 9 天的平均通報延遲。

def raw_reporting_delay_distribution(gamma_shape=1.85, reporting_delay=9.):

return tfp.distributions.Gamma(

concentration=gamma_shape, rate=gamma_shape / reporting_delay)

我們的觀察是離散的,因此我們將原始 (連續) 延遲四捨五入到最接近的天數。我們也有有限的資料時程,因此單個人的延遲分佈是剩餘天數的分類。因此,我們可以透過預先計算多項式延遲機率,更有效率地計算每個城市的預測觀察結果,而不是採樣 \(O(I^r)\) 伽瑪。

def reporting_delay_probs(num_timesteps, gamma_shape=1.85, reporting_delay=9.):

gamma_dist = raw_reporting_delay_distribution(gamma_shape, reporting_delay)

multinomial_probs = [gamma_dist.cdf(1.)]

for k in range(2, num_timesteps + 1):

multinomial_probs.append(gamma_dist.cdf(k) - gamma_dist.cdf(k - 1))

# For samples that are larger than T.

multinomial_probs.append(gamma_dist.survival_function(num_timesteps))

multinomial_probs = tf.stack(multinomial_probs)

return multinomial_probs

以下是將這些延遲實際應用於每日新增記錄的傳染性計數的程式碼

def delay_reporting(

daily_new_documented_infectious, num_timesteps, t, multinomial_probs, seed):

# This is the distribution of observed infectious counts from the current

# timestep.

raw_delays = tfd.Multinomial(

total_count=daily_new_documented_infectious,

probs=multinomial_probs).sample(seed=seed)

# The last bucket is used for samples that are out of range of T + 1. Thus

# they are not going to be observable in this model.

clipped_delays = raw_delays[..., :-1]

# We can also remove counts that are such that t + i >= T.

clipped_delays = clipped_delays[..., :num_timesteps - t]

# We finally shift everything by t. That means prepending with zeros.

return tf.concat([

tf.zeros(

tf.concat([

tf.shape(clipped_delays)[:-1], [t]], axis=0),

dtype=clipped_delays.dtype),

clipped_delays], axis=-1)

推論

首先,我們將定義一些用於推論的資料結構。

特別是,我們將想要進行迭代濾波,它將狀態和參數包裝在一起,同時進行推論。因此,我們將定義一個 ParameterStatePair 物件。

我們也想要將任何附帶資訊包裝到模型中。

ParameterStatePair = collections.namedtuple(

'ParameterStatePair', ['state', 'params'])

# Info that is tracked and mutated but should not have inference performed over.

SideInfo = collections.namedtuple(

'SideInfo', [

# Observations at every time step.

'observations_over_time',

'initial_population',

'mobility_matrix_over_time',

'population',

# Used for variance of measured observations.

'actual_reported_cases',

# Pre-computed buckets for the multinomial distribution.

'multinomial_probs',

'seed',

])

# Cities can not fall below this fraction of people

MINIMUM_CITY_FRACTION = 0.6

# How much to inflate the covariance by.

INFLATION_FACTOR = 1.1

INFLATE_FN = tfes.inflate_by_scaled_identity_fn(INFLATION_FACTOR)

以下是完整的觀察模型,針對集成卡爾曼濾波器進行包裝。

有趣的特點是通報延遲 (如先前計算)。上游模型在每個時間步針對每個城市發出 daily_new_documented_infectious。

# We observe the observed infections.

def observation_fn(t, state_params, extra):

"""Generate reported cases.

Args:

state_params: A `ParameterStatePair` giving the current parameters

and state.

t: Integer giving the current time.

extra: A `SideInfo` carrying auxiliary information.

Returns:

observations: A Tensor of predicted observables, namely new cases

per city at time `t`.

extra: Update `SideInfo`.

"""

# Undo padding introduced in `inference`.

daily_new_documented_infectious = state_params.state.daily_new_documented_infectious[..., 0]

# Number of people that we have already committed to become

# observed infectious over time.

# shape: batch + [num_particles, num_cities, time]

observations_over_time = extra.observations_over_time

num_timesteps = observations_over_time.shape[-1]

seed, new_seed = samplers.split_seed(extra.seed, salt='reporting delay')

daily_delayed_counts = delay_reporting(

daily_new_documented_infectious, num_timesteps, t,

extra.multinomial_probs, seed)

observations_over_time = observations_over_time + daily_delayed_counts

extra = extra._replace(

observations_over_time=observations_over_time,

seed=new_seed)

# Actual predicted new cases, re-padded.

adjusted_observations = observations_over_time[..., t][..., tf.newaxis]

# Finally observations have variance that is a function of the true observations:

return tfd.MultivariateNormalDiag(

loc=adjusted_observations,

scale_diag=tf.math.maximum(

2., extra.actual_reported_cases[..., t][..., tf.newaxis] / 2.)), extra

在這裡我們定義轉換動態。我們已經完成了語義工作;在這裡我們只是針對 EAKF 架構對其進行包裝,並且依照 Li 等人的方法,裁剪城市人口,以防止它們變得太小。

def transition_fn(t, state_params, extra):

"""SEIR dynamics.

Args:

state_params: A `ParameterStatePair` giving the current parameters

and state.

t: Integer giving the current time.

extra: A `SideInfo` carrying auxiliary information.

Returns:

state_params: A `ParameterStatePair` predicted for the next time step.

extra: Updated `SideInfo`.

"""

mobility_t = extra.mobility_matrix_over_time[..., t]

new_seed, rk4_seed = samplers.split_seed(extra.seed, salt='Transition')

new_state = rk4_one_step(

state_params.state,

extra.population,

mobility_t,

state_params.params,

seed=rk4_seed)

# Make sure population doesn't go below MINIMUM_CITY_FRACTION.

new_population = (

extra.population + state_params.params.intercity_underreporting_factor * (

# Inflow

tf.reduce_sum(mobility_t, axis=-2) -

# Outflow

tf.reduce_sum(mobility_t, axis=-1)))

new_population = tf.where(

new_population < MINIMUM_CITY_FRACTION * extra.initial_population,

extra.initial_population * MINIMUM_CITY_FRACTION,

new_population)

extra = extra._replace(population=new_population, seed=new_seed)

# The Ensemble Kalman Filter code expects the transition function to return a distribution.

# As the dynamics and noise are encapsulated above, we construct a `JointDistribution` that when

# sampled, returns the values above.

new_state = tfd.JointDistributionNamed(

model=tf.nest.map_structure(lambda x: tfd.VectorDeterministic(x), new_state))

params = tfd.JointDistributionNamed(

model=tf.nest.map_structure(lambda x: tfd.VectorDeterministic(x), state_params.params))

state_params = tfd.JointDistributionNamed(

model=ParameterStatePair(state=new_state, params=params))

return state_params, extra

最後,我們定義推論方法。這是兩個迴圈,外迴圈是迭代濾波,而內迴圈是集成調整卡爾曼濾波。

# Use tf.function to speed up EAKF prediction and updates.

ensemble_kalman_filter_predict = tf.function(

tfes.ensemble_kalman_filter_predict, autograph=False)

ensemble_adjustment_kalman_filter_update = tf.function(

tfes.ensemble_adjustment_kalman_filter_update, autograph=False)

def inference(

num_ensembles,

num_batches,

num_iterations,

actual_reported_cases,

mobility_matrix_over_time,

seed=None,

# This is how much to reduce the variance by in every iterative

# filtering step.

variance_shrinkage_factor=0.9,

# Days before infection is reported.

reporting_delay=9.,

# Shape parameter of Gamma distribution.

gamma_shape_parameter=1.85):

"""Inference for the Shaman, et al. model.

Args:

num_ensembles: Number of particles to use for EAKF.

num_batches: Number of batches of IF-EAKF to run.

num_iterations: Number of iterations to run iterative filtering.

actual_reported_cases: `Tensor` of shape `[L, T]` where `L` is the number

of cities, and `T` is the timesteps.

mobility_matrix_over_time: `Tensor` of shape `[L, L, T]` which specifies the

mobility between locations over time.

variance_shrinkage_factor: Python `float`. How much to reduce the

variance each iteration of iterated filtering.

reporting_delay: Python `float`. How many days before the infection

is reported.

gamma_shape_parameter: Python `float`. Shape parameter of Gamma distribution

of reporting delays.

Returns:

result: A `ModelParams` with fields Tensors of shape [num_batches],

containing the inferred parameters at the final iteration.

"""

print('Starting inference.')

num_timesteps = actual_reported_cases.shape[-1]

params_per_iter = []

multinomial_probs = reporting_delay_probs(

num_timesteps, gamma_shape_parameter, reporting_delay)

seed = samplers.sanitize_seed(seed, salt='Inference')

for i in range(num_iterations):

start_if_time = time.time()

seeds = samplers.split_seed(seed, n=4, salt='Initialize')

if params_per_iter:

parameter_variance = tf.nest.map_structure(

lambda minval, maxval: variance_shrinkage_factor ** (

2 * i) * (maxval - minval) ** 2 / 4.,

PARAMETER_LOWER_BOUNDS, PARAMETER_UPPER_BOUNDS)

params_t = update_params(

num_ensembles,

num_batches,

prev_params=params_per_iter[-1],

parameter_variance=parameter_variance,

seed=seeds.pop())

else:

params_t = initialize_params(num_ensembles, num_batches, seed=seeds.pop())

state_t = initialize_state(num_ensembles, num_batches, seed=seeds.pop())

population_t = sum(x for x in state_t)

observations_over_time = tf.zeros(

[num_ensembles,

num_batches,

actual_reported_cases.shape[0], num_timesteps])

extra = SideInfo(

observations_over_time=observations_over_time,

initial_population=tf.identity(population_t),

mobility_matrix_over_time=mobility_matrix_over_time,

population=population_t,

multinomial_probs=multinomial_probs,

actual_reported_cases=actual_reported_cases,

seed=seeds.pop())

# Clip states

state_t = clip_state(state_t, population_t)

params_t = clip_params(params_t, seed=seeds.pop())

# Accrue the parameter over time. We'll be averaging that

# and using that as our MLE estimate.

params_over_time = tf.nest.map_structure(

lambda x: tf.identity(x), params_t)

state_params = ParameterStatePair(state=state_t, params=params_t)

eakf_state = tfes.EnsembleKalmanFilterState(

step=tf.constant(0), particles=state_params, extra=extra)

for j in range(num_timesteps):

seeds = samplers.split_seed(eakf_state.extra.seed, n=3)

extra = extra._replace(seed=seeds.pop())

# Predict step.

# Inflate and clip.

new_particles = INFLATE_FN(eakf_state.particles)

state_t = clip_state(new_particles.state, eakf_state.extra.population)

params_t = clip_params(new_particles.params, seed=seeds.pop())

eakf_state = eakf_state._replace(

particles=ParameterStatePair(params=params_t, state=state_t))

eakf_predict_state = ensemble_kalman_filter_predict(eakf_state, transition_fn)

# Clip the state and particles.

state_params = eakf_predict_state.particles

state_t = clip_state(

state_params.state, eakf_predict_state.extra.population)

state_params = ParameterStatePair(state=state_t, params=state_params.params)

# We preprocess the state and parameters by affixing a 1 dimension. This is because for

# inference, we treat each city as independent. We could also introduce localization by

# considering cities that are adjacent.

state_params = tf.nest.map_structure(lambda x: x[..., tf.newaxis], state_params)

eakf_predict_state = eakf_predict_state._replace(particles=state_params)

# Update step.

eakf_update_state = ensemble_adjustment_kalman_filter_update(

eakf_predict_state,

actual_reported_cases[..., j][..., tf.newaxis],

observation_fn)

state_params = tf.nest.map_structure(

lambda x: x[..., 0], eakf_update_state.particles)

# Clip to ensure parameters / state are well constrained.

state_t = clip_state(

state_params.state, eakf_update_state.extra.population)

# Finally for the parameters, we should reduce over all updates. We get

# an extra dimension back so let's do that.

params_t = tf.nest.map_structure(

lambda x, y: x + tf.reduce_sum(y[..., tf.newaxis] - x, axis=-2, keepdims=True),

eakf_predict_state.particles.params, state_params.params)

params_t = clip_params(params_t, seed=seeds.pop())

params_t = tf.nest.map_structure(lambda x: x[..., 0], params_t)

state_params = ParameterStatePair(state=state_t, params=params_t)

eakf_state = eakf_update_state

eakf_state = eakf_state._replace(particles=state_params)

# Flatten and collect the inferred parameter at time step t.

params_over_time = tf.nest.map_structure(

lambda s, x: tf.concat([s, x], axis=-1), params_over_time, params_t)

est_params = tf.nest.map_structure(

# Take the average over the Ensemble and over time.

lambda x: tf.math.reduce_mean(x, axis=[0, -1])[..., tf.newaxis],

params_over_time)

params_per_iter.append(est_params)

print('Iterated Filtering {} / {} Ran in: {:.2f} seconds'.format(

i, num_iterations, time.time() - start_if_time))

return tf.nest.map_structure(

lambda x: tf.squeeze(x, axis=-1), params_per_iter[-1])

最後的細節:裁剪參數和狀態包括確保它們在範圍內且非負數。

def clip_state(state, population):

"""Clip state to sensible values."""

state = tf.nest.map_structure(

lambda x: tf.where(x < 0, 0., x), state)

# If S > population, then adjust as well.

susceptible = tf.where(state.susceptible > population, population, state.susceptible)

return SEIRComponents(

susceptible=susceptible,

exposed=state.exposed,

documented_infectious=state.documented_infectious,

undocumented_infectious=state.undocumented_infectious,

daily_new_documented_infectious=state.daily_new_documented_infectious)

def clip_params(params, seed):

"""Clip parameters to bounds."""

def _clip(p, minval, maxval):

return tf.where(

p < minval,

minval * (1. + 0.1 * tf.random.stateless_uniform(p.shape, seed=seed)),

tf.where(p > maxval,

maxval * (1. - 0.1 * tf.random.stateless_uniform(

p.shape, seed=seed)), p))

params = tf.nest.map_structure(

_clip, params, PARAMETER_LOWER_BOUNDS, PARAMETER_UPPER_BOUNDS)

return params

將所有內容整合在一起執行

# Let's sample the parameters.

#

# NOTE: Li et al. run inference 1000 times, which would take a few hours.

# Here we run inference 30 times (in a single, vectorized batch).

best_parameters = inference(

num_ensembles=300,

num_batches=30,

num_iterations=10,

actual_reported_cases=observed_daily_infectious_count,

mobility_matrix_over_time=mobility_matrix_over_time)

Starting inference. Iterated Filtering 0 / 10 Ran in: 26.65 seconds Iterated Filtering 1 / 10 Ran in: 28.69 seconds Iterated Filtering 2 / 10 Ran in: 28.06 seconds Iterated Filtering 3 / 10 Ran in: 28.48 seconds Iterated Filtering 4 / 10 Ran in: 28.57 seconds Iterated Filtering 5 / 10 Ran in: 28.35 seconds Iterated Filtering 6 / 10 Ran in: 28.35 seconds Iterated Filtering 7 / 10 Ran in: 28.19 seconds Iterated Filtering 8 / 10 Ran in: 28.58 seconds Iterated Filtering 9 / 10 Ran in: 28.23 seconds

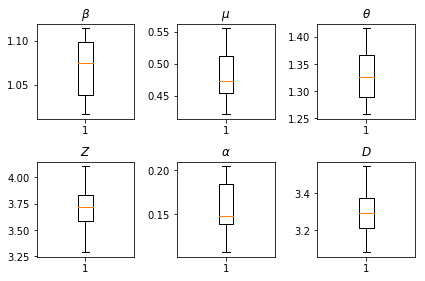

我們的推論結果。我們繪製所有整體參數的最大概似值,以顯示它們在我們的 num_batches 獨立推論執行中的變化。這對應於補充材料中的表 S1。

fig, axs = plt.subplots(2, 3)

axs[0, 0].boxplot(best_parameters.documented_infectious_tx_rate,

whis=(2.5,97.5), sym='')

axs[0, 0].set_title(r'$\beta$')

axs[0, 1].boxplot(best_parameters.undocumented_infectious_tx_relative_rate,

whis=(2.5,97.5), sym='')

axs[0, 1].set_title(r'$\mu$')

axs[0, 2].boxplot(best_parameters.intercity_underreporting_factor,

whis=(2.5,97.5), sym='')

axs[0, 2].set_title(r'$\theta$')

axs[1, 0].boxplot(best_parameters.average_latency_period,

whis=(2.5,97.5), sym='')

axs[1, 0].set_title(r'$Z$')

axs[1, 1].boxplot(best_parameters.fraction_of_documented_infections,

whis=(2.5,97.5), sym='')

axs[1, 1].set_title(r'$\alpha$')

axs[1, 2].boxplot(best_parameters.average_infection_duration,

whis=(2.5,97.5), sym='')

axs[1, 2].set_title(r'$D$')

plt.tight_layout()