此範例從 PyMC3 範例筆記本 多層次模型貝氏方法入門 移植而來

在 TensorFlow.org 上檢視 在 TensorFlow.org 上檢視

|

在 Google Colab 中執行 在 Google Colab 中執行

|

在 GitHub 上檢視原始碼 在 GitHub 上檢視原始碼

|

下載筆記本 下載筆記本

|

依賴項目與先決條件

匯入

1 簡介

在這個 Colab 中,我們將使用廣受歡迎的 Radon 資料集,擬合各種模型複雜程度的階層式線性模型 (HLM)。我們將使用 TFP 基本元件及其馬可夫鏈蒙地卡羅工具組。

為了更好地擬合資料,我們的目標是利用資料集中存在的自然階層結構。我們先從傳統方法開始:完全合併模型和未合併模型。然後繼續使用多層次模型:探索部分合併模型、群組層級預測因子和情境效應。

如需另一個也使用 TFP 在 Radon 資料集上擬合 HLM 的筆記本,請查看「{TF Probability、R、Stan} 中的線性混合效應迴歸」。

如果您對此處的資料有任何疑問,請隨時聯絡 (或加入) TensorFlow Probability 郵寄清單。我們很樂意提供協助。

2 多層次模型總覽

多層次模型貝氏方法入門

階層式或多層次模型化是迴歸模型化的概括。

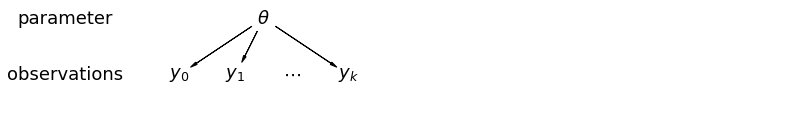

多層次模型是迴歸模型,其中組成模型參數會給定機率分佈。這表示模型參數可以依群組而異。觀察單位通常自然地叢集。儘管叢集隨機抽樣和叢集內隨機抽樣,但叢集會導致觀察值之間產生依賴性。

階層式模型是一種特定的多層次模型,其中參數彼此巢狀。有些多層次結構不是階層式的。

例如,「國家」和「年份」不是巢狀的,但可能代表獨立但重疊的參數叢集。我們將使用環境流行病學範例來闡述這個主題。

範例:氡污染 (Gelman 和 Hill 2006)

氡是一種放射性氣體,會透過與地面的接觸點進入房屋。它是一種致癌物,是非吸菸者罹患肺癌的主要原因。氡含量因住戶而異。

美國環保署 (EPA) 對 80,000 戶房屋的氡含量進行了研究。兩個重要的預測因子是:1. 在地下室或一樓測量(地下室的氡含量較高)2. 郡的鈾含量(與氡含量呈正相關)

我們將專注於明尼蘇達州的氡含量建模。此範例中的階層結構是每個郡內的住戶。

3 資料整理

在本節中,我們取得 radon 資料集並進行一些最少的預先處理。

def load_and_preprocess_radon_dataset(state='MN'):

"""Preprocess Radon dataset as done in "Bayesian Data Analysis" book.

We filter to Minnesota data (919 examples) and preprocess to obtain the

following features:

- `log_uranium_ppm`: Log of soil uranium measurements.

- `county`: Name of county in which the measurement was taken.

- `floor`: Floor of house (0 for basement, 1 for first floor) on which the

measurement was taken.

The target variable is `log_radon`, the log of the Radon measurement in the

house.

"""

ds = tfds.load('radon', split='train')

radon_data = tfds.as_dataframe(ds)

radon_data.rename(lambda s: s[9:] if s.startswith('feat') else s, axis=1, inplace=True)

df = radon_data[radon_data.state==state.encode()].copy()

# For any missing or invalid activity readings, we'll use a value of `0.1`.

df['radon'] = df.activity.apply(lambda x: x if x > 0. else 0.1)

# Make county names look nice.

df['county'] = df.county.apply(lambda s: s.decode()).str.strip().str.title()

# Remap categories to start from 0 and end at max(category).

county_name = sorted(df.county.unique())

df['county'] = df.county.astype(

pd.api.types.CategoricalDtype(categories=county_name)).cat.codes

county_name = list(map(str.strip, county_name))

df['log_radon'] = df['radon'].apply(np.log)

df['log_uranium_ppm'] = df['Uppm'].apply(np.log)

df = df[['idnum', 'log_radon', 'floor', 'county', 'log_uranium_ppm']]

return df, county_name

radon, county_name = load_and_preprocess_radon_dataset()

num_counties = len(county_name)

num_observations = len(radon)

# Create copies of variables as Tensors.

county = tf.convert_to_tensor(radon['county'], dtype=tf.int32)

floor = tf.convert_to_tensor(radon['floor'], dtype=tf.float32)

log_radon = tf.convert_to_tensor(radon['log_radon'], dtype=tf.float32)

log_uranium = tf.convert_to_tensor(radon['log_uranium_ppm'], dtype=tf.float32)

radon.head()

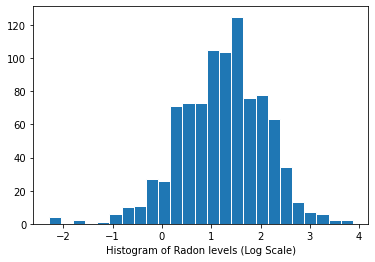

氡含量分佈(對數尺度)

plt.hist(log_radon.numpy(), bins=25, edgecolor='white')

plt.xlabel("Histogram of Radon levels (Log Scale)")

plt.show()

4 傳統方法

用於氡暴露建模的兩種傳統替代方案代表了偏差-變異數權衡的兩個極端

完全合併

將所有郡視為相同,並估計單一氡含量。

\[y_i = \alpha + \beta x_i + \epsilon_i\]

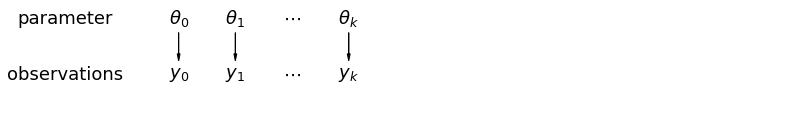

未合併

獨立地為每個郡的氡建模。

\(y_i = \alpha_{j[i]} + \beta x_i + \epsilon_i\) 其中 \(j = 1,\ldots,85\)

誤差 \(\epsilon_i\) 可能代表測量誤差、房屋內部的時間變異或房屋之間的變異。

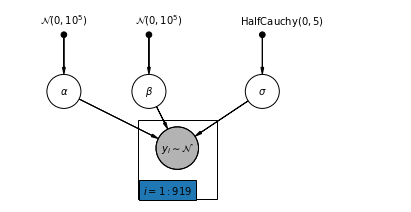

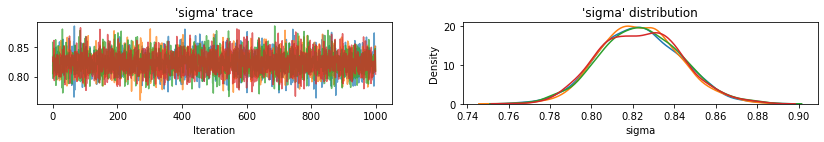

4.1 完全合併模型

下面,我們使用哈密頓蒙地卡羅法擬合完全合併模型。

@tf.function

def affine(x, kernel_diag, bias=tf.zeros([])):

"""`kernel_diag * x + bias` with broadcasting."""

kernel_diag = tf.ones_like(x) * kernel_diag

bias = tf.ones_like(x) * bias

return x * kernel_diag + bias

def pooled_model(floor):

"""Creates a joint distribution representing our generative process."""

return tfd.JointDistributionSequential([

tfd.Normal(loc=0., scale=1e5), # alpha

tfd.Normal(loc=0., scale=1e5), # beta

tfd.HalfCauchy(loc=0., scale=5), # sigma

lambda s, b1, b0: tfd.MultivariateNormalDiag( # y

loc=affine(floor, b1[..., tf.newaxis], b0[..., tf.newaxis]),

scale_identity_multiplier=s)

])

@tf.function

def pooled_log_prob(alpha, beta, sigma):

"""Computes `joint_log_prob` pinned at `log_radon`."""

return pooled_model(floor).log_prob([alpha, beta, sigma, log_radon])

@tf.function

def sample_pooled(num_chains, num_results, num_burnin_steps, num_observations):

"""Samples from the pooled model."""

hmc = tfp.mcmc.HamiltonianMonteCarlo(

target_log_prob_fn=pooled_log_prob,

num_leapfrog_steps=10,

step_size=0.005)

initial_state = [

tf.zeros([num_chains], name='init_alpha'),

tf.zeros([num_chains], name='init_beta'),

tf.ones([num_chains], name='init_sigma')

]

# Constrain `sigma` to the positive real axis. Other variables are

# unconstrained.

unconstraining_bijectors = [

tfb.Identity(), # alpha

tfb.Identity(), # beta

tfb.Exp() # sigma

]

kernel = tfp.mcmc.TransformedTransitionKernel(

inner_kernel=hmc, bijector=unconstraining_bijectors)

samples, kernel_results = tfp.mcmc.sample_chain(

num_results=num_results,

num_burnin_steps=num_burnin_steps,

current_state=initial_state,

kernel=kernel)

acceptance_probs = tf.reduce_mean(

tf.cast(kernel_results.inner_results.is_accepted, tf.float32), axis=0)

return samples, acceptance_probs

PooledModel = collections.namedtuple('PooledModel', ['alpha', 'beta', 'sigma'])

samples, acceptance_probs = sample_pooled(

num_chains=4,

num_results=1000,

num_burnin_steps=1000,

num_observations=num_observations)

print('Acceptance Probabilities for each chain: ', acceptance_probs.numpy())

pooled_samples = PooledModel._make(samples)

Acceptance Probabilities for each chain: [0.999 0.996 0.995 0.995]

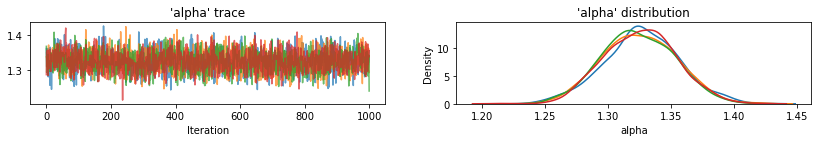

for var, var_samples in pooled_samples._asdict().items():

print('R-hat for ', var, ':\t',

tfp.mcmc.potential_scale_reduction(var_samples).numpy())

R-hat for alpha : 1.0019042 R-hat for beta : 1.0135655 R-hat for sigma : 0.99958754

def reduce_samples(var_samples, reduce_fn):

"""Reduces across leading two dims using reduce_fn."""

# Collapse the first two dimensions, typically (num_chains, num_samples), and

# compute np.mean or np.std along the remaining axis.

if isinstance(var_samples, tf.Tensor):

var_samples = var_samples.numpy() # convert to numpy array

var_samples = np.reshape(var_samples, (-1,) + var_samples.shape[2:])

return np.apply_along_axis(reduce_fn, axis=0, arr=var_samples)

sample_mean = lambda samples : reduce_samples(samples, np.mean)

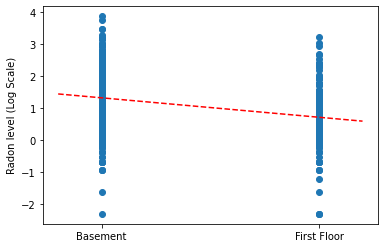

繪製完全合併模型的斜率和截距的點估計值。

LinearEstimates = collections.namedtuple('LinearEstimates',

['intercept', 'slope'])

pooled_estimate = LinearEstimates(

intercept=sample_mean(pooled_samples.alpha),

slope=sample_mean(pooled_samples.beta)

)

plt.scatter(radon.floor, radon.log_radon)

xvals = np.linspace(-0.2, 1.2)

plt.ylabel('Radon level (Log Scale)')

plt.xticks([0, 1], ['Basement', 'First Floor'])

plt.plot(xvals, pooled_estimate.intercept + pooled_estimate.slope * xvals, 'r--')

plt.show()

繪製取樣變數追蹤的公用函式。

for var, var_samples in pooled_samples._asdict().items():

plot_traces(var, samples=var_samples, num_chains=4)

接下來,我們估計未合併模型中每個郡的氡含量。

4.2 未合併模型

def unpooled_model(floor, county):

"""Creates a joint distribution for the unpooled model."""

return tfd.JointDistributionSequential([

tfd.MultivariateNormalDiag( # alpha

loc=tf.zeros([num_counties]), scale_identity_multiplier=1e5),

tfd.Normal(loc=0., scale=1e5), # beta

tfd.HalfCauchy(loc=0., scale=5), # sigma

lambda s, b1, b0: tfd.MultivariateNormalDiag( # y

loc=affine(

floor, b1[..., tf.newaxis], tf.gather(b0, county, axis=-1)),

scale_identity_multiplier=s)

])

@tf.function

def unpooled_log_prob(beta0, beta1, sigma):

"""Computes `joint_log_prob` pinned at `log_radon`."""

return (

unpooled_model(floor, county).log_prob([beta0, beta1, sigma, log_radon]))

@tf.function

def sample_unpooled(num_chains, num_results, num_burnin_steps):

"""Samples from the unpooled model."""

# Initialize the HMC transition kernel.

hmc = tfp.mcmc.HamiltonianMonteCarlo(

target_log_prob_fn=unpooled_log_prob,

num_leapfrog_steps=10,

step_size=0.025)

initial_state = [

tf.zeros([num_chains, num_counties], name='init_beta0'),

tf.zeros([num_chains], name='init_beta1'),

tf.ones([num_chains], name='init_sigma')

]

# Contrain `sigma` to the positive real axis. Other variables are

# unconstrained.

unconstraining_bijectors = [

tfb.Identity(), # alpha

tfb.Identity(), # beta

tfb.Exp() # sigma

]

kernel = tfp.mcmc.TransformedTransitionKernel(

inner_kernel=hmc, bijector=unconstraining_bijectors)

samples, kernel_results = tfp.mcmc.sample_chain(

num_results=num_results,

num_burnin_steps=num_burnin_steps,

current_state=initial_state,

kernel=kernel)

acceptance_probs = tf.reduce_mean(

tf.cast(kernel_results.inner_results.is_accepted, tf.float32), axis=0)

return samples, acceptance_probs

UnpooledModel = collections.namedtuple('UnpooledModel',

['alpha', 'beta', 'sigma'])

samples, acceptance_probs = sample_unpooled(

num_chains=4, num_results=1000, num_burnin_steps=1000)

print('Acceptance Probabilities: ', acceptance_probs.numpy())

unpooled_samples = UnpooledModel._make(samples)

print('R-hat for beta:',

tfp.mcmc.potential_scale_reduction(unpooled_samples.beta).numpy())

print('R-hat for sigma:',

tfp.mcmc.potential_scale_reduction(unpooled_samples.sigma).numpy())

Acceptance Probabilities: [0.895 0.897 0.893 0.901] R-hat for beta: 1.0052257 R-hat for sigma: 1.0035229

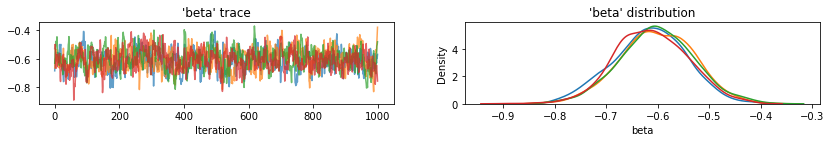

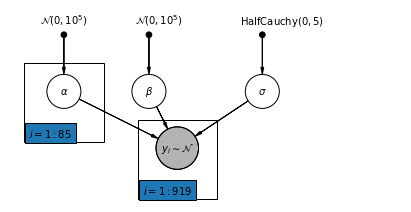

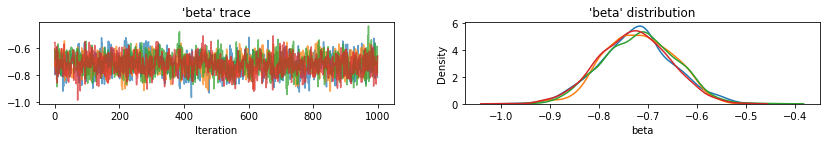

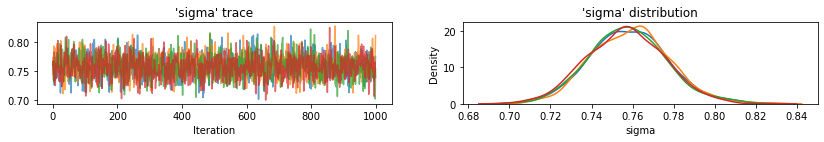

plot_traces(var_name='beta', samples=unpooled_samples.beta, num_chains=4)

plot_traces(var_name='sigma', samples=unpooled_samples.sigma, num_chains=4)

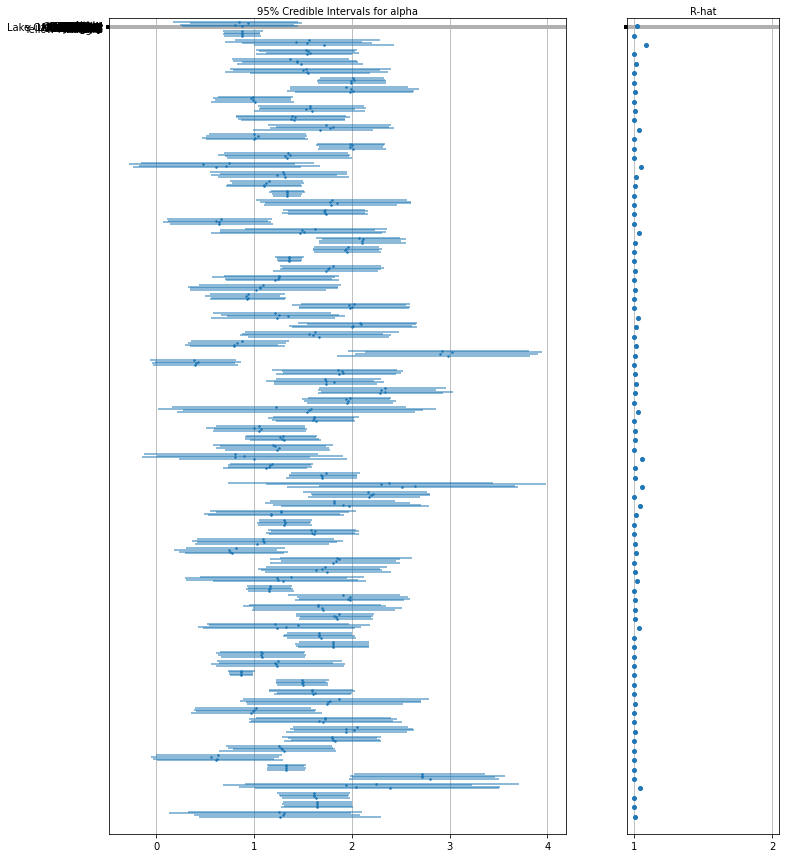

這是每個鏈的未合併郡預期截距值以及 95% 可信區間。我們還報告每個郡估計值的 R-hat 值。

森林圖的公用函式。

forest_plot(

num_chains=4,

num_vars=num_counties,

var_name='alpha',

var_labels=county_name,

samples=unpooled_samples.alpha.numpy())

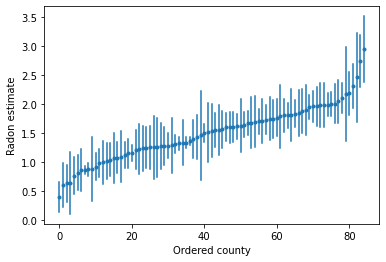

我們可以繪製排序後的估計值,以識別氡含量高的郡

unpooled_intercepts = reduce_samples(unpooled_samples.alpha, np.mean)

unpooled_intercepts_se = reduce_samples(unpooled_samples.alpha, np.std)

def plot_ordered_estimates():

means = pd.Series(unpooled_intercepts, index=county_name)

std_errors = pd.Series(unpooled_intercepts_se, index=county_name)

order = means.sort_values().index

plt.plot(range(num_counties), means[order], '.')

for i, m, se in zip(range(num_counties), means[order], std_errors[order]):

plt.plot([i, i], [m - se, m + se], 'C0-')

plt.xlabel('Ordered county')

plt.ylabel('Radon estimate')

plt.show()

plot_ordered_estimates()

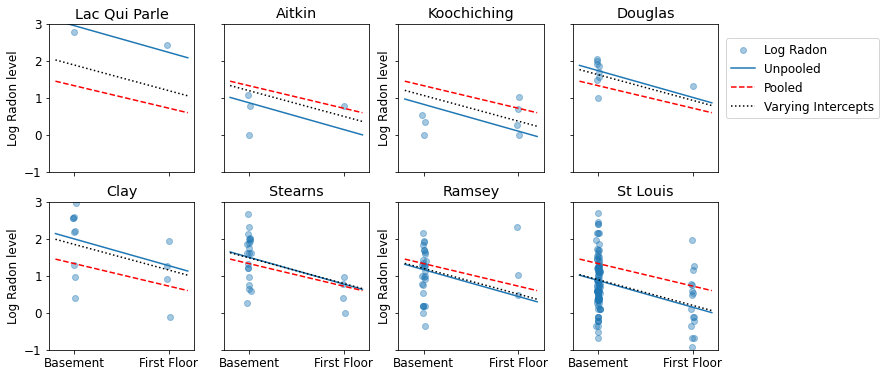

繪製郡樣本集估計值的公用函式。

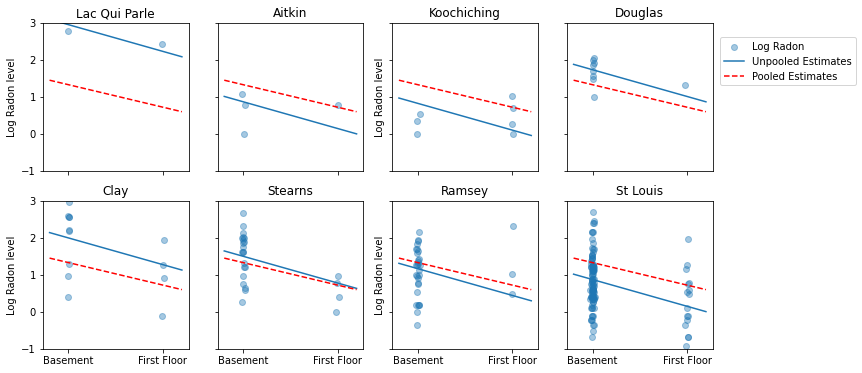

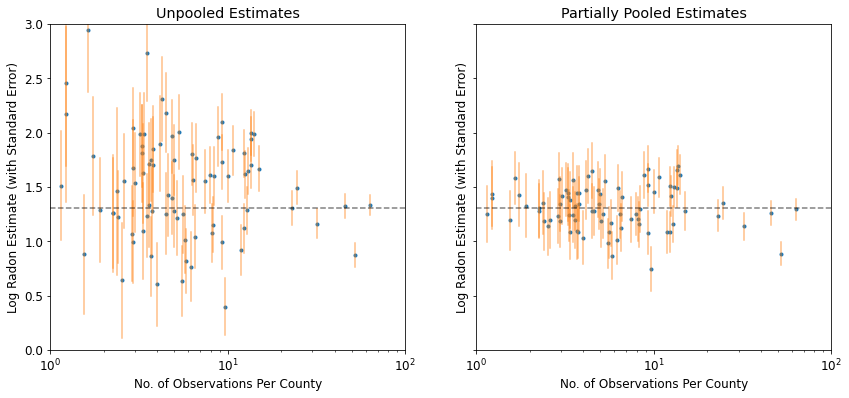

這是代表各種樣本大小的郡子集中合併和未合併估計值之間的視覺比較。

unpooled_estimates = LinearEstimates(

sample_mean(unpooled_samples.alpha),

sample_mean(unpooled_samples.beta)

)

sample_counties = ('Lac Qui Parle', 'Aitkin', 'Koochiching', 'Douglas', 'Clay',

'Stearns', 'Ramsey', 'St Louis')

plot_estimates(

linear_estimates=[unpooled_estimates, pooled_estimate],

labels=['Unpooled Estimates', 'Pooled Estimates'],

sample_counties=sample_counties)

這些模型都不令人滿意

- 如果我們試圖識別氡含量高的郡,合併是沒有用的。

- 我們不信任使用少量觀察值的模型產生的極端未合併估計值。

5 多層次和階層式模型

當我們合併資料時,我們會遺失不同資料點來自不同郡的資訊。這表示每個 radon-level 觀察值都是從相同的機率分佈中取樣的。這種模型無法學習在群組(例如郡)內固有的取樣單位中的任何變異。它只考慮取樣變異數。

當我們分析未合併的資料時,我們暗示它們是從不同的模型中獨立取樣的。與合併情況相反的極端情況是,這種方法聲稱取樣單位之間的差異太大而無法合併它們

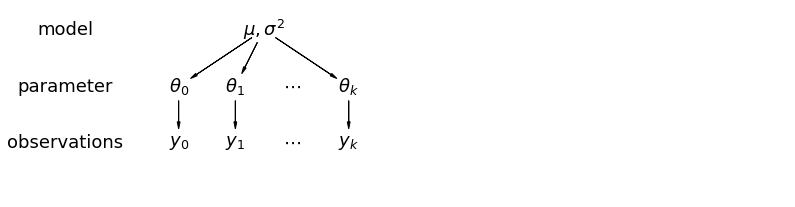

在階層式模型中,參數被視為來自參數母體分佈的樣本。因此,我們認為它們既非完全不同也非完全相同。這稱為部分合併。

5.1 部分合併

針對住戶氡資料集最簡單的部分合併模型是僅估計氡含量,而沒有群組或個人層級的任何預測因子。個人層級預測因子的範例是資料點是否來自地下室或一樓。群組層級預測因子可以是郡級平均鈾含量。

部分合併模型代表合併和未合併極端情況之間的折衷方案,大約是未合併郡估計值和合併估計值的加權平均值(基於樣本大小)。

令 \(\hat{\alpha}_j\) 為郡 \(j\) 中估計的對數氡含量。這只是一個截距;我們現在忽略斜率。 \(n_j\) 是來自郡 \(j\) 的觀察值數量。 \(\sigma_{\alpha}\) 和 \(\sigma_y\) 分別是參數內的變異數和取樣變異數。然後,部分合併模型可以假設

\[\hat{\alpha}_j \approx \frac{(n_j/\sigma_y^2)\bar{y}_j + (1/\sigma_{\alpha}^2)\bar{y} }{(n_j/\sigma_y^2) + (1/\sigma_{\alpha}^2)}\]

我們在使用部分合併時預期以下情況

- 樣本大小較小的郡的估計值將會縮小到州級平均值。

- 樣本大小較大的郡的估計值將會更接近未合併的郡估計值。

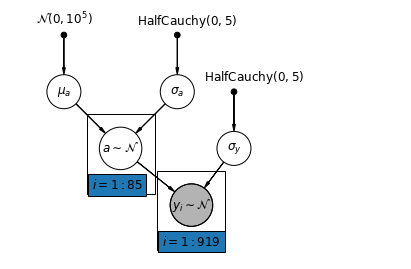

def partial_pooling_model(county):

"""Creates a joint distribution for the partial pooling model."""

return tfd.JointDistributionSequential([

tfd.Normal(loc=0., scale=1e5), # mu_a

tfd.HalfCauchy(loc=0., scale=5), # sigma_a

lambda sigma_a, mu_a: tfd.MultivariateNormalDiag( # a

loc=mu_a[..., tf.newaxis] * tf.ones([num_counties])[tf.newaxis, ...],

scale_identity_multiplier=sigma_a),

tfd.HalfCauchy(loc=0., scale=5), # sigma_y

lambda sigma_y, a: tfd.MultivariateNormalDiag( # y

loc=tf.gather(a, county, axis=-1),

scale_identity_multiplier=sigma_y)

])

@tf.function

def partial_pooling_log_prob(mu_a, sigma_a, a, sigma_y):

"""Computes joint log prob pinned at `log_radon`."""

return partial_pooling_model(county).log_prob(

[mu_a, sigma_a, a, sigma_y, log_radon])

@tf.function

def sample_partial_pooling(num_chains, num_results, num_burnin_steps):

"""Samples from the partial pooling model."""

hmc = tfp.mcmc.HamiltonianMonteCarlo(

target_log_prob_fn=partial_pooling_log_prob,

num_leapfrog_steps=10,

step_size=0.01)

initial_state = [

tf.zeros([num_chains], name='init_mu_a'),

tf.ones([num_chains], name='init_sigma_a'),

tf.zeros([num_chains, num_counties], name='init_a'),

tf.ones([num_chains], name='init_sigma_y')

]

unconstraining_bijectors = [

tfb.Identity(), # mu_a

tfb.Exp(), # sigma_a

tfb.Identity(), # a

tfb.Exp() # sigma_y

]

kernel = tfp.mcmc.TransformedTransitionKernel(

inner_kernel=hmc, bijector=unconstraining_bijectors)

samples, kernel_results = tfp.mcmc.sample_chain(

num_results=num_results,

num_burnin_steps=num_burnin_steps,

current_state=initial_state,

kernel=kernel)

acceptance_probs = tf.reduce_mean(

tf.cast(kernel_results.inner_results.is_accepted, tf.float32), axis=0)

return samples, acceptance_probs

PartialPoolingModel = collections.namedtuple(

'PartialPoolingModel', ['mu_a', 'sigma_a', 'a', 'sigma_y'])

samples, acceptance_probs = sample_partial_pooling(

num_chains=4, num_results=1000, num_burnin_steps=1000)

print('Acceptance Probabilities: ', acceptance_probs.numpy())

partial_pooling_samples = PartialPoolingModel._make(samples)

Acceptance Probabilities: [0.989 0.978 0.987 0.987]

for var in ['mu_a', 'sigma_a', 'sigma_y']:

print(

'R-hat for ', var, '\t:',

tfp.mcmc.potential_scale_reduction(getattr(partial_pooling_samples,

var)).numpy())

R-hat for mu_a : 1.0276643 R-hat for sigma_a : 1.0204039 R-hat for sigma_y : 1.0008202

partial_pooling_intercepts = reduce_samples(

partial_pooling_samples.a.numpy(), np.mean)

partial_pooling_intercepts_se = reduce_samples(

partial_pooling_samples.a.numpy(), np.std)

def plot_unpooled_vs_partial_pooling_estimates():

fig, axes = plt.subplots(1, 2, figsize=(14, 6), sharex=True, sharey=True)

# Order counties by number of observations (and add some jitter).

num_obs_per_county = (

radon.groupby('county')['idnum'].count().values.astype(np.float32))

num_obs_per_county += np.random.normal(scale=0.5, size=num_counties)

intercepts_list = [unpooled_intercepts, partial_pooling_intercepts]

intercepts_se_list = [unpooled_intercepts_se, partial_pooling_intercepts_se]

for ax, means, std_errors in zip(axes, intercepts_list, intercepts_se_list):

ax.plot(num_obs_per_county, means, 'C0.')

for n, m, se in zip(num_obs_per_county, means, std_errors):

ax.plot([n, n], [m - se, m + se], 'C1-', alpha=.5)

for ax in axes:

ax.set_xscale('log')

ax.set_xlabel('No. of Observations Per County')

ax.set_xlim(1, 100)

ax.set_ylabel('Log Radon Estimate (with Standard Error)')

ax.set_ylim(0, 3)

ax.hlines(partial_pooling_intercepts.mean(), .9, 125, 'k', '--', alpha=.5)

axes[0].set_title('Unpooled Estimates')

axes[1].set_title('Partially Pooled Estimates')

plot_unpooled_vs_partial_pooling_estimates()

請注意未合併和部分合併估計值之間的差異,尤其是在樣本大小較小時。前者更極端且更不精確。

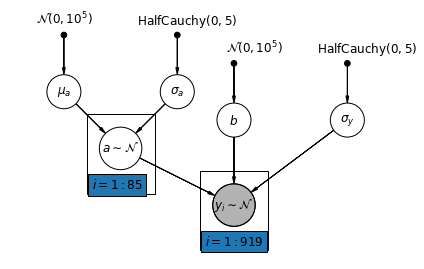

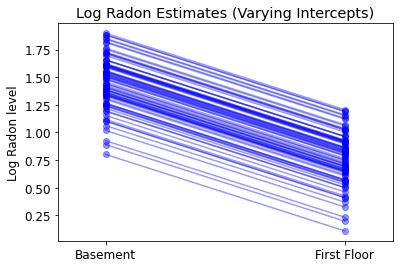

5.2 變動截距

現在,我們考慮一個更複雜的模型,該模型允許截距根據隨機效應在郡之間變化。

\(y_i = \alpha_{j[i]} + \beta x_{i} + \epsilon_i\) 其中 \(\epsilon_i \sim N(0, \sigma_y^2)\),而截距隨機效應

\[\alpha_{j[i]} \sim N(\mu_{\alpha}, \sigma_{\alpha}^2)\]

斜率 \(\beta\),讓觀察值根據測量位置(地下室或一樓)而變化,仍然是在不同郡之間共享的固定效應。

與未合併模型一樣,我們為每個郡設定單獨的截距,但多層次模型不是為每個郡擬合單獨的最小平方迴歸模型,而是在郡之間共享強度,從而在資料較少的郡中進行更合理的推論。

def varying_intercept_model(floor, county):

"""Creates a joint distribution for the varying intercept model."""

return tfd.JointDistributionSequential([

tfd.Normal(loc=0., scale=1e5), # mu_a

tfd.HalfCauchy(loc=0., scale=5), # sigma_a

lambda sigma_a, mu_a: tfd.MultivariateNormalDiag( # a

loc=affine(tf.ones([num_counties]), mu_a[..., tf.newaxis]),

scale_identity_multiplier=sigma_a),

tfd.Normal(loc=0., scale=1e5), # b

tfd.HalfCauchy(loc=0., scale=5), # sigma_y

lambda sigma_y, b, a: tfd.MultivariateNormalDiag( # y

loc=affine(floor, b[..., tf.newaxis], tf.gather(a, county, axis=-1)),

scale_identity_multiplier=sigma_y)

])

def varying_intercept_log_prob(mu_a, sigma_a, a, b, sigma_y):

"""Computes joint log prob pinned at `log_radon`."""

return varying_intercept_model(floor, county).log_prob(

[mu_a, sigma_a, a, b, sigma_y, log_radon])

@tf.function

def sample_varying_intercepts(num_chains, num_results, num_burnin_steps):

"""Samples from the varying intercepts model."""

hmc = tfp.mcmc.HamiltonianMonteCarlo(

target_log_prob_fn=varying_intercept_log_prob,

num_leapfrog_steps=10,

step_size=0.01)

initial_state = [

tf.zeros([num_chains], name='init_mu_a'),

tf.ones([num_chains], name='init_sigma_a'),

tf.zeros([num_chains, num_counties], name='init_a'),

tf.zeros([num_chains], name='init_b'),

tf.ones([num_chains], name='init_sigma_y')

]

unconstraining_bijectors = [

tfb.Identity(), # mu_a

tfb.Exp(), # sigma_a

tfb.Identity(), # a

tfb.Identity(), # b

tfb.Exp() # sigma_y

]

kernel = tfp.mcmc.TransformedTransitionKernel(

inner_kernel=hmc, bijector=unconstraining_bijectors)

samples, kernel_results = tfp.mcmc.sample_chain(

num_results=num_results,

num_burnin_steps=num_burnin_steps,

current_state=initial_state,

kernel=kernel)

acceptance_probs = tf.reduce_mean(

tf.cast(kernel_results.inner_results.is_accepted, tf.float32), axis=0)

return samples, acceptance_probs

VaryingInterceptsModel = collections.namedtuple(

'VaryingInterceptsModel', ['mu_a', 'sigma_a', 'a', 'b', 'sigma_y'])

samples, acceptance_probs = sample_varying_intercepts(

num_chains=4, num_results=1000, num_burnin_steps=1000)

print('Acceptance Probabilities: ', acceptance_probs.numpy())

varying_intercepts_samples = VaryingInterceptsModel._make(samples)

Acceptance Probabilities: [0.989 0.98 0.988 0.983]

for var in ['mu_a', 'sigma_a', 'b', 'sigma_y']:

print(

'R-hat for ', var, ': ',

tfp.mcmc.potential_scale_reduction(

getattr(varying_intercepts_samples, var)).numpy())

R-hat for mu_a : 1.0196627 R-hat for sigma_a : 1.0671698 R-hat for b : 1.0017126 R-hat for sigma_y : 0.99950683

varying_intercepts_estimates = LinearEstimates(

sample_mean(varying_intercepts_samples.a),

sample_mean(varying_intercepts_samples.b))

sample_counties = ('Lac Qui Parle', 'Aitkin', 'Koochiching', 'Douglas', 'Clay',

'Stearns', 'Ramsey', 'St Louis')

plot_estimates(

linear_estimates=[

unpooled_estimates, pooled_estimate, varying_intercepts_estimates

],

labels=['Unpooled', 'Pooled', 'Varying Intercepts'],

sample_counties=sample_counties)

def plot_posterior(var_name, var_samples):

if isinstance(var_samples, tf.Tensor):

var_samples = var_samples.numpy() # convert to numpy array

fig = plt.figure(figsize=(10, 3))

ax = fig.add_subplot(111)

ax.hist(var_samples.flatten(), bins=40, edgecolor='white')

sample_mean = var_samples.mean()

ax.text(

sample_mean,

100,

'mean={:.3f}'.format(sample_mean),

color='white',

fontsize=12)

ax.set_xlabel('posterior of ' + var_name)

plt.show()

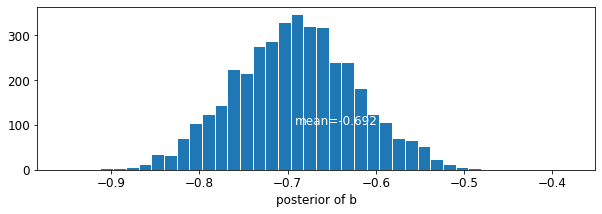

plot_posterior('b', varying_intercepts_samples.b)

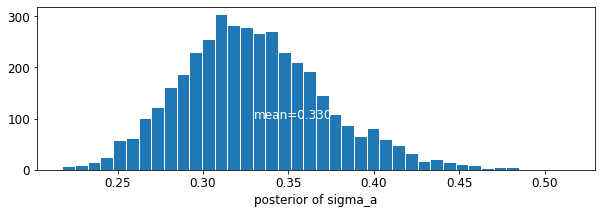

plot_posterior('sigma_a', varying_intercepts_samples.sigma_a)

地板係數的估計值約為 -0.69,可以解釋為在考量郡之後,沒有地下室的房屋的氡含量約為有地下室房屋的一半 (\(\exp(-0.69) = 0.50\))。

for var in ['b']:

var_samples = getattr(varying_intercepts_samples, var)

mean = var_samples.numpy().mean()

std = var_samples.numpy().std()

r_hat = tfp.mcmc.potential_scale_reduction(var_samples).numpy()

n_eff = tfp.mcmc.effective_sample_size(var_samples).numpy().sum()

print('var: ', var, ' mean: ', mean, ' std: ', std, ' n_eff: ', n_eff,

' r_hat: ', r_hat)

var: b mean: -0.6920927 std: 0.07004689 n_eff: 430.58865 r_hat: 1.0017126

def plot_intercepts_and_slopes(linear_estimates, title):

xvals = np.arange(2)

intercepts = np.ones([num_counties]) * linear_estimates.intercept

slopes = np.ones([num_counties]) * linear_estimates.slope

fig, ax = plt.subplots()

for c in range(num_counties):

ax.plot(xvals, intercepts[c] + slopes[c] * xvals, 'bo-', alpha=0.4)

plt.xlim(-0.2, 1.2)

ax.set_xticks([0, 1])

ax.set_xticklabels(['Basement', 'First Floor'])

ax.set_ylabel('Log Radon level')

plt.title(title)

plt.show()

plot_intercepts_and_slopes(varying_intercepts_estimates,

'Log Radon Estimates (Varying Intercepts)')

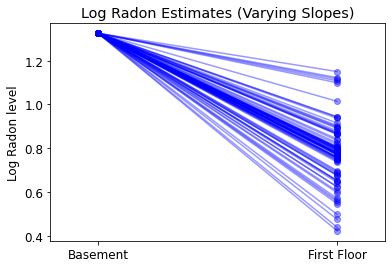

5.3 變動斜率

或者,我們可以假設一個模型,該模型允許郡根據測量位置(地下室或一樓)如何影響氡讀數而變化。在這種情況下,截距 \(\alpha\) 在郡之間共享。

\[y_i = \alpha + \beta_{j[i]} x_{i} + \epsilon_i\]

def varying_slopes_model(floor, county):

"""Creates a joint distribution for the varying slopes model."""

return tfd.JointDistributionSequential([

tfd.Normal(loc=0., scale=1e5), # mu_b

tfd.HalfCauchy(loc=0., scale=5), # sigma_b

tfd.Normal(loc=0., scale=1e5), # a

lambda _, sigma_b, mu_b: tfd.MultivariateNormalDiag( # b

loc=affine(tf.ones([num_counties]), mu_b[..., tf.newaxis]),

scale_identity_multiplier=sigma_b),

tfd.HalfCauchy(loc=0., scale=5), # sigma_y

lambda sigma_y, b, a: tfd.MultivariateNormalDiag( # y

loc=affine(floor, tf.gather(b, county, axis=-1), a[..., tf.newaxis]),

scale_identity_multiplier=sigma_y)

])

def varying_slopes_log_prob(mu_b, sigma_b, a, b, sigma_y):

return varying_slopes_model(floor, county).log_prob(

[mu_b, sigma_b, a, b, sigma_y, log_radon])

@tf.function

def sample_varying_slopes(num_chains, num_results, num_burnin_steps):

"""Samples from the varying slopes model."""

hmc = tfp.mcmc.HamiltonianMonteCarlo(

target_log_prob_fn=varying_slopes_log_prob,

num_leapfrog_steps=25,

step_size=0.01)

initial_state = [

tf.zeros([num_chains], name='init_mu_b'),

tf.ones([num_chains], name='init_sigma_b'),

tf.zeros([num_chains], name='init_a'),

tf.zeros([num_chains, num_counties], name='init_b'),

tf.ones([num_chains], name='init_sigma_y')

]

unconstraining_bijectors = [

tfb.Identity(), # mu_b

tfb.Exp(), # sigma_b

tfb.Identity(), # a

tfb.Identity(), # b

tfb.Exp() # sigma_y

]

kernel = tfp.mcmc.TransformedTransitionKernel(

inner_kernel=hmc, bijector=unconstraining_bijectors)

samples, kernel_results = tfp.mcmc.sample_chain(

num_results=num_results,

num_burnin_steps=num_burnin_steps,

current_state=initial_state,

kernel=kernel)

acceptance_probs = tf.reduce_mean(

tf.cast(kernel_results.inner_results.is_accepted, tf.float32), axis=0)

return samples, acceptance_probs

VaryingSlopesModel = collections.namedtuple(

'VaryingSlopesModel', ['mu_b', 'sigma_b', 'a', 'b', 'sigma_y'])

samples, acceptance_probs = sample_varying_slopes(

num_chains=4, num_results=1000, num_burnin_steps=1000)

print('Acceptance Probabilities: ', acceptance_probs.numpy())

varying_slopes_samples = VaryingSlopesModel._make(samples)

Acceptance Probabilities: [0.98 0.982 0.986 0.988]

for var in ['mu_b', 'sigma_b', 'a', 'sigma_y']:

print(

'R-hat for ', var, '\t: ',

tfp.mcmc.potential_scale_reduction(getattr(varying_slopes_samples,

var)).numpy())

R-hat for mu_b : 1.0972525 R-hat for sigma_b : 1.1294962 R-hat for a : 1.0047072 R-hat for sigma_y : 1.0015919

varying_slopes_estimates = LinearEstimates(

sample_mean(varying_slopes_samples.a),

sample_mean(varying_slopes_samples.b))

plot_intercepts_and_slopes(varying_slopes_estimates,

'Log Radon Estimates (Varying Slopes)')

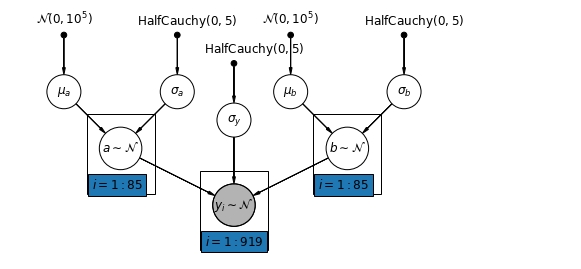

5.4 變動截距和斜率

最通用的模型允許截距和斜率都依郡而異

\[y_i = \alpha_{j[i]} + \beta_{j[i]} x_{i} + \epsilon_i\]

def varying_intercepts_and_slopes_model(floor, county):

"""Creates a joint distribution for the varying slope model."""

return tfd.JointDistributionSequential([

tfd.Normal(loc=0., scale=1e5), # mu_a

tfd.HalfCauchy(loc=0., scale=5), # sigma_a

tfd.Normal(loc=0., scale=1e5), # mu_b

tfd.HalfCauchy(loc=0., scale=5), # sigma_b

lambda sigma_b, mu_b, sigma_a, mu_a: tfd.MultivariateNormalDiag( # a

loc=affine(tf.ones([num_counties]), mu_a[..., tf.newaxis]),

scale_identity_multiplier=sigma_a),

lambda _, sigma_b, mu_b: tfd.MultivariateNormalDiag( # b

loc=affine(tf.ones([num_counties]), mu_b[..., tf.newaxis]),

scale_identity_multiplier=sigma_b),

tfd.HalfCauchy(loc=0., scale=5), # sigma_y

lambda sigma_y, b, a: tfd.MultivariateNormalDiag( # y

loc=affine(floor, tf.gather(b, county, axis=-1),

tf.gather(a, county, axis=-1)),

scale_identity_multiplier=sigma_y)

])

@tf.function

def varying_intercepts_and_slopes_log_prob(mu_a, sigma_a, mu_b, sigma_b, a, b,

sigma_y):

"""Computes joint log prob pinned at `log_radon`."""

return varying_intercepts_and_slopes_model(floor, county).log_prob(

[mu_a, sigma_a, mu_b, sigma_b, a, b, sigma_y, log_radon])

@tf.function

def sample_varying_intercepts_and_slopes(num_chains, num_results,

num_burnin_steps):

"""Samples from the varying intercepts and slopes model."""

hmc = tfp.mcmc.HamiltonianMonteCarlo(

target_log_prob_fn=varying_intercepts_and_slopes_log_prob,

num_leapfrog_steps=50,

step_size=0.01)

initial_state = [

tf.zeros([num_chains], name='init_mu_a'),

tf.ones([num_chains], name='init_sigma_a'),

tf.zeros([num_chains], name='init_mu_b'),

tf.ones([num_chains], name='init_sigma_b'),

tf.zeros([num_chains, num_counties], name='init_a'),

tf.zeros([num_chains, num_counties], name='init_b'),

tf.ones([num_chains], name='init_sigma_y')

]

unconstraining_bijectors = [

tfb.Identity(), # mu_a

tfb.Exp(), # sigma_a

tfb.Identity(), # mu_b

tfb.Exp(), # sigma_b

tfb.Identity(), # a

tfb.Identity(), # b

tfb.Exp() # sigma_y

]

kernel = tfp.mcmc.TransformedTransitionKernel(

inner_kernel=hmc, bijector=unconstraining_bijectors)

samples, kernel_results = tfp.mcmc.sample_chain(

num_results=num_results,

num_burnin_steps=num_burnin_steps,

current_state=initial_state,

kernel=kernel)

acceptance_probs = tf.reduce_mean(

tf.cast(kernel_results.inner_results.is_accepted, tf.float32), axis=0)

return samples, acceptance_probs

VaryingInterceptsAndSlopesModel = collections.namedtuple(

'VaryingInterceptsAndSlopesModel',

['mu_a', 'sigma_a', 'mu_b', 'sigma_b', 'a', 'b', 'sigma_y'])

samples, acceptance_probs = sample_varying_intercepts_and_slopes(

num_chains=4, num_results=1000, num_burnin_steps=500)

print('Acceptance Probabilities: ', acceptance_probs.numpy())

varying_intercepts_and_slopes_samples = VaryingInterceptsAndSlopesModel._make(

samples)

Acceptance Probabilities: [0.989 0.958 0.984 0.985]

for var in ['mu_a', 'sigma_a', 'mu_b', 'sigma_b']:

print(

'R-hat for ', var, '\t: ',

tfp.mcmc.potential_scale_reduction(

getattr(varying_intercepts_and_slopes_samples, var)).numpy())

R-hat for mu_a : 1.0002819 R-hat for sigma_a : 1.0014255 R-hat for mu_b : 1.0111941 R-hat for sigma_b : 1.0994663

varying_intercepts_and_slopes_estimates = LinearEstimates(

sample_mean(varying_intercepts_and_slopes_samples.a),

sample_mean(varying_intercepts_and_slopes_samples.b))

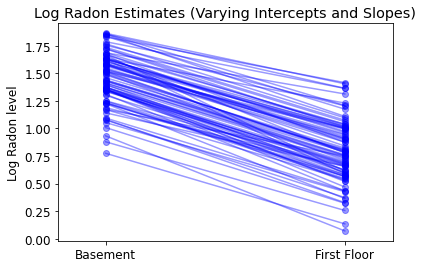

plot_intercepts_and_slopes(

varying_intercepts_and_slopes_estimates,

'Log Radon Estimates (Varying Intercepts and Slopes)')

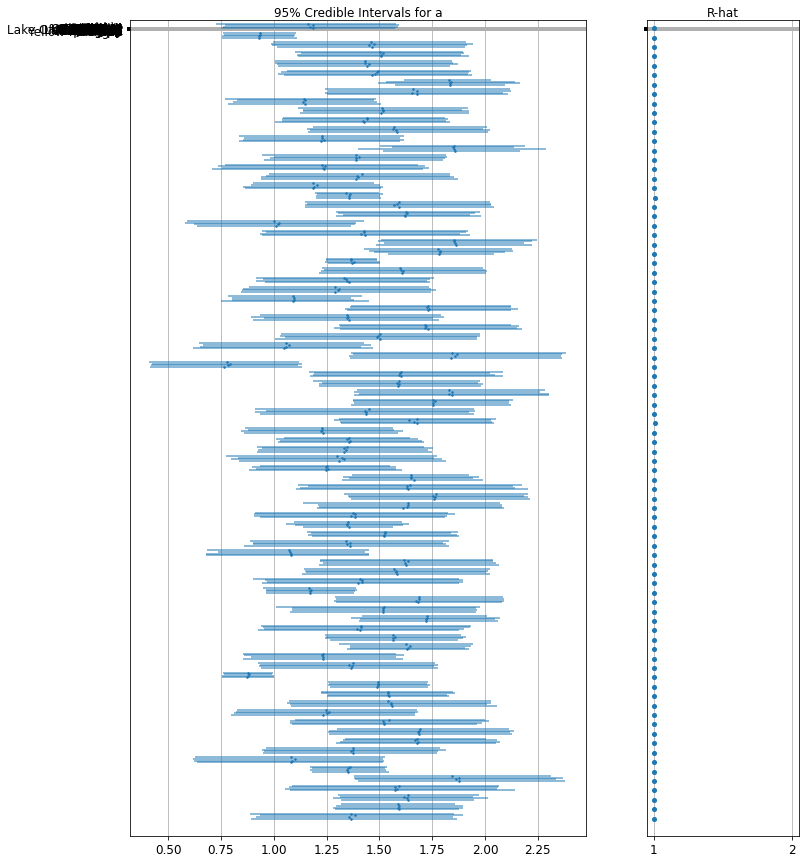

forest_plot(

num_chains=4,

num_vars=num_counties,

var_name='a',

var_labels=county_name,

samples=varying_intercepts_and_slopes_samples.a.numpy())

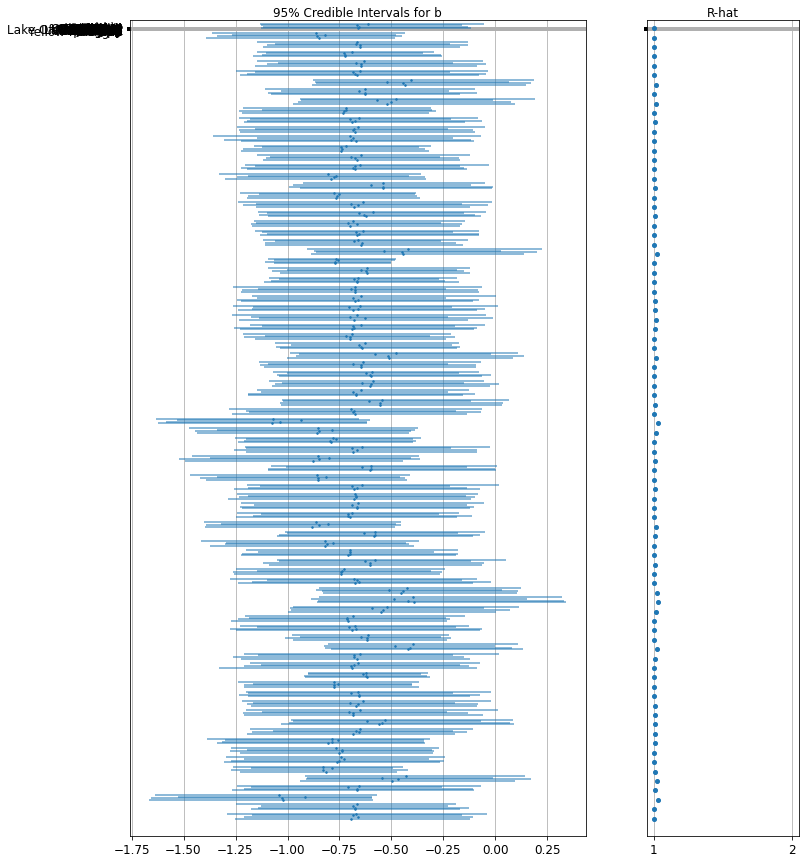

forest_plot(

num_chains=4,

num_vars=num_counties,

var_name='b',

var_labels=county_name,

samples=varying_intercepts_and_slopes_samples.b.numpy())

6 新增群組層級預測因子

多層次模型的主要優勢之一是能夠同時處理多個層級的預測因子。如果我們考慮上面的變動截距模型

\(y_i = \alpha_{j[i]} + \beta x_{i} + \epsilon_i\) 我們可以指定另一個具有郡級共變數的迴歸模型,而不是使用簡單的隨機效應來描述預期氡含量的變異。在這裡,我們使用郡鈾讀數 \(u_j\),據信它與氡含量有關

\(\alpha_j = \gamma_0 + \gamma_1 u_j + \zeta_j\)\(\zeta_j \sim N(0, \sigma_{\alpha}^2)\) 因此,我們現在結合了房屋層級預測因子(地板或地下室)以及郡級預測因子(鈾)。

請注意,該模型同時具有每個郡的指標變數和郡級共變數。在古典迴歸中,這會導致共線性。在多層次模型中,截距向群組層級線性模型的預期值的部分合併避免了這種情況。

群組層級預測因子也有助於減少群組層級變異 \(\sigma_{\alpha}\)。其重要含義是群組層級估計值會導致更強的合併。

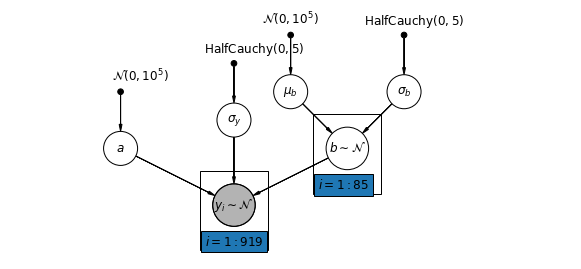

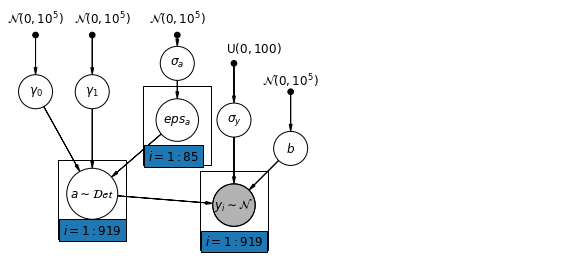

6.1 階層式截距模型

def hierarchical_intercepts_model(floor, county, log_uranium):

"""Creates a joint distribution for the varying slope model."""

return tfd.JointDistributionSequential([

tfd.HalfCauchy(loc=0., scale=5), # sigma_a

lambda sigma_a: tfd.MultivariateNormalDiag( # eps_a

loc=tf.zeros([num_counties]),

scale_identity_multiplier=sigma_a),

tfd.Normal(loc=0., scale=1e5), # gamma_0

tfd.Normal(loc=0., scale=1e5), # gamma_1

tfd.Normal(loc=0., scale=1e5), # b

tfd.Uniform(low=0., high=100), # sigma_y

lambda sigma_y, b, gamma_1, gamma_0, eps_a: tfd.

MultivariateNormalDiag( # y

loc=affine(

floor, b[..., tf.newaxis],

affine(log_uranium, gamma_1[..., tf.newaxis],

gamma_0[..., tf.newaxis]) + tf.gather(eps_a, county, axis=-1)),

scale_identity_multiplier=sigma_y)

])

def hierarchical_intercepts_log_prob(sigma_a, eps_a, gamma_0, gamma_1, b,

sigma_y):

"""Computes joint log prob pinned at `log_radon`."""

return hierarchical_intercepts_model(floor, county, log_uranium).log_prob(

[sigma_a, eps_a, gamma_0, gamma_1, b, sigma_y, log_radon])

@tf.function

def sample_hierarchical_intercepts(num_chains, num_results, num_burnin_steps):

"""Samples from the hierarchical intercepts model."""

hmc = tfp.mcmc.HamiltonianMonteCarlo(

target_log_prob_fn=hierarchical_intercepts_log_prob,

num_leapfrog_steps=10,

step_size=0.01)

initial_state = [

tf.ones([num_chains], name='init_sigma_a'),

tf.zeros([num_chains, num_counties], name='eps_a'),

tf.zeros([num_chains], name='init_gamma_0'),

tf.zeros([num_chains], name='init_gamma_1'),

tf.zeros([num_chains], name='init_b'),

tf.ones([num_chains], name='init_sigma_y')

]

unconstraining_bijectors = [

tfb.Exp(), # sigma_a

tfb.Identity(), # eps_a

tfb.Identity(), # gamma_0

tfb.Identity(), # gamma_0

tfb.Identity(), # b

# Maps reals to [0, 100].

tfb.Chain([tfb.Shift(shift=50.),

tfb.Scale(scale=50.),

tfb.Tanh()]) # sigma_y

]

kernel = tfp.mcmc.TransformedTransitionKernel(

inner_kernel=hmc, bijector=unconstraining_bijectors)

samples, kernel_results = tfp.mcmc.sample_chain(

num_results=num_results,

num_burnin_steps=num_burnin_steps,

current_state=initial_state,

kernel=kernel)

acceptance_probs = tf.reduce_mean(

tf.cast(kernel_results.inner_results.is_accepted, tf.float32), axis=0)

return samples, acceptance_probs

HierarchicalInterceptsModel = collections.namedtuple(

'HierarchicalInterceptsModel',

['sigma_a', 'eps_a', 'gamma_0', 'gamma_1', 'b', 'sigma_y'])

samples, acceptance_probs = sample_hierarchical_intercepts(

num_chains=4, num_results=2000, num_burnin_steps=500)

print('Acceptance Probabilities: ', acceptance_probs.numpy())

hierarchical_intercepts_samples = HierarchicalInterceptsModel._make(samples)

Acceptance Probabilities: [0.956 0.959 0.9675 0.958 ]

for var in ['sigma_a', 'gamma_0', 'gamma_1', 'b', 'sigma_y']:

print(

'R-hat for', var, ':',

tfp.mcmc.potential_scale_reduction(

getattr(hierarchical_intercepts_samples, var)).numpy())

R-hat for sigma_a : 1.0204408 R-hat for gamma_0 : 1.0075455 R-hat for gamma_1 : 1.0054599 R-hat for b : 1.0011046 R-hat for sigma_y : 1.0004083

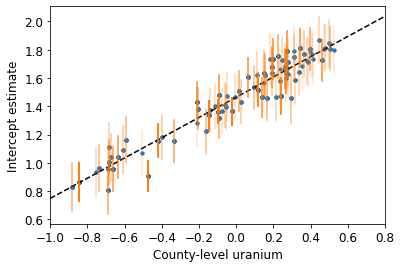

def plot_hierarchical_intercepts():

mean_and_var = lambda x : [reduce_samples(x, fn) for fn in [np.mean, np.var]]

gamma_0_mean, gamma_0_var = mean_and_var(

hierarchical_intercepts_samples.gamma_0)

gamma_1_mean, gamma_1_var = mean_and_var(

hierarchical_intercepts_samples.gamma_1)

eps_a_means, eps_a_vars = mean_and_var(hierarchical_intercepts_samples.eps_a)

mu_a_means = gamma_0_mean + gamma_1_mean * log_uranium

mu_a_vars = gamma_0_var + np.square(log_uranium) * gamma_1_var

a_means = mu_a_means + eps_a_means[county]

a_stds = np.sqrt(mu_a_vars + eps_a_vars[county])

plt.figure()

plt.scatter(log_uranium, a_means, marker='.', c='C0')

xvals = np.linspace(-1, 0.8)

plt.plot(xvals,gamma_0_mean + gamma_1_mean * xvals, 'k--')

plt.xlim(-1, 0.8)

for ui, m, se in zip(log_uranium, a_means, a_stds):

plt.plot([ui, ui], [m - se, m + se], 'C1-', alpha=0.1)

plt.xlabel('County-level uranium')

plt.ylabel('Intercept estimate')

plot_hierarchical_intercepts()

截距的標準誤差比沒有郡級共變數的部分合併模型窄。

6.2 層級之間的相關性

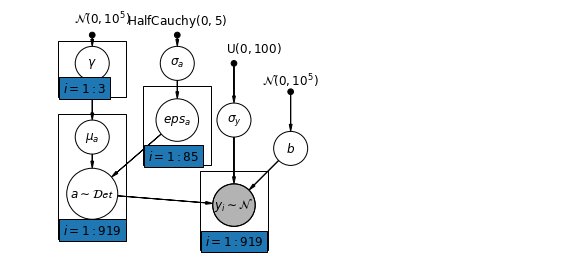

在某些情況下,在多個層級擁有預測因子可以揭示個人層級變數和群組殘差之間的相關性。我們可以透過在群組截距模型中包含個人預測因子的平均值作為共變數來解決這個問題。

\(\alpha_j = \gamma_0 + \gamma_1 u_j + \gamma_2 \bar{x} + \zeta_j\) 這些通常稱為情境效應。

# Create a new variable for mean of floor across counties

xbar = tf.convert_to_tensor(radon.groupby('county')['floor'].mean(), tf.float32)

xbar = tf.gather(xbar, county, axis=-1)

def contextual_effects_model(floor, county, log_uranium, xbar):

"""Creates a joint distribution for the varying slope model."""

return tfd.JointDistributionSequential([

tfd.HalfCauchy(loc=0., scale=5), # sigma_a

lambda sigma_a: tfd.MultivariateNormalDiag( # eps_a

loc=tf.zeros([num_counties]),

scale_diag=sigma_a[..., tf.newaxis] * tf.ones([num_counties])),

tfd.Normal(loc=0., scale=1e5), # gamma_0

tfd.Normal(loc=0., scale=1e5), # gamma_1

tfd.Normal(loc=0., scale=1e5), # gamma_2

tfd.Normal(loc=0., scale=1e5), # b

tfd.Uniform(low=0., high=100), # sigma_y

lambda sigma_y, b, gamma_2, gamma_1, gamma_0, eps_a: tfd.

MultivariateNormalDiag( # y

loc=affine(

floor, b[..., tf.newaxis],

affine(log_uranium, gamma_1[..., tf.newaxis], gamma_0[

..., tf.newaxis]) + affine(xbar, gamma_2[..., tf.newaxis]) +

tf.gather(eps_a, county, axis=-1)),

scale_diag=sigma_y[..., tf.newaxis] * tf.ones_like(xbar))

])

def contextual_effects_log_prob(sigma_a, eps_a, gamma_0, gamma_1, gamma_2, b,

sigma_y):

"""Computes joint log prob pinned at `log_radon`."""

return contextual_effects_model(floor, county, log_uranium, xbar).log_prob(

[sigma_a, eps_a, gamma_0, gamma_1, gamma_2, b, sigma_y, log_radon])

@tf.function

def sample_contextual_effects(num_chains, num_results, num_burnin_steps):

"""Samples from the hierarchical intercepts model."""

hmc = tfp.mcmc.HamiltonianMonteCarlo(

target_log_prob_fn=contextual_effects_log_prob,

num_leapfrog_steps=10,

step_size=0.01)

initial_state = [

tf.ones([num_chains], name='init_sigma_a'),

tf.zeros([num_chains, num_counties], name='eps_a'),

tf.zeros([num_chains], name='init_gamma_0'),

tf.zeros([num_chains], name='init_gamma_1'),

tf.zeros([num_chains], name='init_gamma_2'),

tf.zeros([num_chains], name='init_b'),

tf.ones([num_chains], name='init_sigma_y')

]

unconstraining_bijectors = [

tfb.Exp(), # sigma_a

tfb.Identity(), # eps_a

tfb.Identity(), # gamma_0

tfb.Identity(), # gamma_1

tfb.Identity(), # gamma_2

tfb.Identity(), # b

tfb.Chain([tfb.Shift(shift=50.),

tfb.Scale(scale=50.),

tfb.Tanh()]) # sigma_y

]

kernel = tfp.mcmc.TransformedTransitionKernel(

inner_kernel=hmc, bijector=unconstraining_bijectors)

samples, kernel_results = tfp.mcmc.sample_chain(

num_results=num_results,

num_burnin_steps=num_burnin_steps,

current_state=initial_state,

kernel=kernel)

acceptance_probs = tf.reduce_mean(

tf.cast(kernel_results.inner_results.is_accepted, tf.float32), axis=0)

return samples, acceptance_probs

ContextualEffectsModel = collections.namedtuple(

'ContextualEffectsModel',

['sigma_a', 'eps_a', 'gamma_0', 'gamma_1', 'gamma_2', 'b', 'sigma_y'])

samples, acceptance_probs = sample_contextual_effects(

num_chains=4, num_results=2000, num_burnin_steps=500)

print('Acceptance Probabilities: ', acceptance_probs.numpy())

contextual_effects_samples = ContextualEffectsModel._make(samples)

Acceptance Probabilities: [0.948 0.952 0.956 0.953]

for var in ['sigma_a', 'gamma_0', 'gamma_1', 'gamma_2', 'b', 'sigma_y']:

print(

'R-hat for ', var, ': ',

tfp.mcmc.potential_scale_reduction(

getattr(contextual_effects_samples, var)).numpy())

R-hat for sigma_a : 1.1393573 R-hat for gamma_0 : 1.0081229 R-hat for gamma_1 : 1.0007668 R-hat for gamma_2 : 1.012864 R-hat for b : 1.0019505 R-hat for sigma_y : 1.0056173

for var in ['gamma_0', 'gamma_1', 'gamma_2']:

var_samples = getattr(contextual_effects_samples, var)

mean = var_samples.numpy().mean()

std = var_samples.numpy().std()

r_hat = tfp.mcmc.potential_scale_reduction(var_samples).numpy()

n_eff = tfp.mcmc.effective_sample_size(var_samples).numpy().sum()

print(var, ' mean: ', mean, ' std: ', std, ' n_eff: ', n_eff, ' r_hat: ',

r_hat)

gamma_0 mean: 1.3939122 std: 0.051875897 n_eff: 572.4374 r_hat: 1.0081229 gamma_1 mean: 0.7207277 std: 0.090660274 n_eff: 727.2628 r_hat: 1.0007668 gamma_2 mean: 0.40686083 std: 0.20155264 n_eff: 381.74048 r_hat: 1.012864

因此,我們可能從中推斷出,沒有地下室的房屋比例較高的郡往往具有較高的氡基線水平。這可能與土壤類型有關,而土壤類型反過來可能會影響建造的結構類型。

6.3 預測

Gelman (2006) 使用交叉驗證測試來檢查未合併、合併和部分合併模型的預測誤差。

均方根交叉驗證預測誤差

- 未合併 = 0.86

- 合併 = 0.84

- 多層次 = 0.79

在多層次模型中可以進行兩種預測

- 現有群組中的新個體

- 新群組中的新個體

例如,如果我們想預測聖路易斯郡一棟沒有地下室的新房屋,我們只需要從具有適當截距的氡模型中取樣即可。

county_name.index('St Louis')

69

也就是說,

\[\tilde{y}_i \sim N(\alpha_{69} + \beta (x_i=1), \sigma_y^2)\]

st_louis_log_uranium = tf.convert_to_tensor(

radon.where(radon['county'] == 69)['log_uranium_ppm'].mean(), tf.float32)

st_louis_xbar = tf.convert_to_tensor(

radon.where(radon['county'] == 69)['floor'].mean(), tf.float32)

@tf.function

def intercept_a(gamma_0, gamma_1, gamma_2, eps_a, log_uranium, xbar, county):

return (affine(log_uranium, gamma_1, gamma_0) + affine(xbar, gamma_2) +

tf.gather(eps_a, county, axis=-1))

def contextual_effects_predictive_model(floor, county, log_uranium, xbar,

st_louis_log_uranium, st_louis_xbar):

"""Creates a joint distribution for the contextual effects model."""

return tfd.JointDistributionSequential([

tfd.HalfCauchy(loc=0., scale=5), # sigma_a

lambda sigma_a: tfd.MultivariateNormalDiag( # eps_a

loc=tf.zeros([num_counties]),

scale_diag=sigma_a[..., tf.newaxis] * tf.ones([num_counties])),

tfd.Normal(loc=0., scale=1e5), # gamma_0

tfd.Normal(loc=0., scale=1e5), # gamma_1

tfd.Normal(loc=0., scale=1e5), # gamma_2

tfd.Normal(loc=0., scale=1e5), # b

tfd.Uniform(low=0., high=100), # sigma_y

# y

lambda sigma_y, b, gamma_2, gamma_1, gamma_0, eps_a: (

tfd.MultivariateNormalDiag(

loc=affine(

floor, b[..., tf.newaxis],

intercept_a(gamma_0[..., tf.newaxis],

gamma_1[..., tf.newaxis], gamma_2[..., tf.newaxis],

eps_a, log_uranium, xbar, county)),

scale_diag=sigma_y[..., tf.newaxis] * tf.ones_like(xbar))),

# stl_pred

lambda _, sigma_y, b, gamma_2, gamma_1, gamma_0, eps_a: tfd.Normal(

loc=intercept_a(gamma_0, gamma_1, gamma_2, eps_a,

st_louis_log_uranium, st_louis_xbar, 69) + b,

scale=sigma_y)

])

@tf.function

def contextual_effects_predictive_log_prob(sigma_a, eps_a, gamma_0, gamma_1,

gamma_2, b, sigma_y, stl_pred):

"""Computes joint log prob pinned at `log_radon`."""

return contextual_effects_predictive_model(floor, county, log_uranium, xbar,

st_louis_log_uranium,

st_louis_xbar).log_prob([

sigma_a, eps_a, gamma_0,

gamma_1, gamma_2, b, sigma_y,

log_radon, stl_pred

])

@tf.function

def sample_contextual_effects_predictive(num_chains, num_results,

num_burnin_steps):

"""Samples from the contextual effects predictive model."""

hmc = tfp.mcmc.HamiltonianMonteCarlo(

target_log_prob_fn=contextual_effects_predictive_log_prob,

num_leapfrog_steps=50,

step_size=0.01)

initial_state = [

tf.ones([num_chains], name='init_sigma_a'),

tf.zeros([num_chains, num_counties], name='eps_a'),

tf.zeros([num_chains], name='init_gamma_0'),

tf.zeros([num_chains], name='init_gamma_1'),

tf.zeros([num_chains], name='init_gamma_2'),

tf.zeros([num_chains], name='init_b'),

tf.ones([num_chains], name='init_sigma_y'),

tf.zeros([num_chains], name='init_stl_pred')

]

unconstraining_bijectors = [

tfb.Exp(), # sigma_a

tfb.Identity(), # eps_a

tfb.Identity(), # gamma_0

tfb.Identity(), # gamma_1

tfb.Identity(), # gamma_2

tfb.Identity(), # b

tfb.Chain([tfb.Shift(shift=50.),

tfb.Scale(scale=50.),

tfb.Tanh()]), # sigma_y

tfb.Identity(), # stl_pred

]

kernel = tfp.mcmc.TransformedTransitionKernel(

inner_kernel=hmc, bijector=unconstraining_bijectors)

samples, kernel_results = tfp.mcmc.sample_chain(

num_results=num_results,

num_burnin_steps=num_burnin_steps,

current_state=initial_state,

kernel=kernel)

acceptance_probs = tf.reduce_mean(

tf.cast(kernel_results.inner_results.is_accepted, tf.float32), axis=0)

return samples, acceptance_probs

ContextualEffectsPredictiveModel = collections.namedtuple(

'ContextualEffectsPredictiveModel', [

'sigma_a', 'eps_a', 'gamma_0', 'gamma_1', 'gamma_2', 'b', 'sigma_y',

'stl_pred'

])

samples, acceptance_probs = sample_contextual_effects_predictive(

num_chains=4, num_results=2000, num_burnin_steps=500)

print('Acceptance Probabilities: ', acceptance_probs.numpy())

contextual_effects_pred_samples = ContextualEffectsPredictiveModel._make(

samples)

Acceptance Probabilities: [0.981 0.9795 0.972 0.9705]

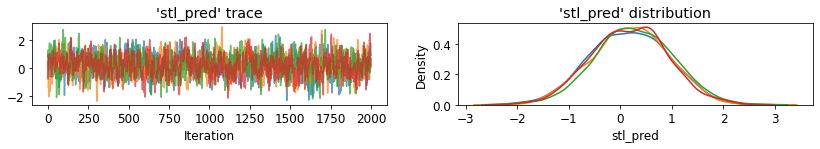

for var in [

'sigma_a', 'gamma_0', 'gamma_1', 'gamma_2', 'b', 'sigma_y', 'stl_pred'

]:

print(

'R-hat for ', var, ': ',

tfp.mcmc.potential_scale_reduction(

getattr(contextual_effects_pred_samples, var)).numpy())

R-hat for sigma_a : 1.0053602 R-hat for gamma_0 : 1.0008001 R-hat for gamma_1 : 1.0015156 R-hat for gamma_2 : 0.99972683 R-hat for b : 1.0045198 R-hat for sigma_y : 1.0114483 R-hat for stl_pred : 1.0045049

plot_traces('stl_pred', contextual_effects_pred_samples.stl_pred, num_chains=4)

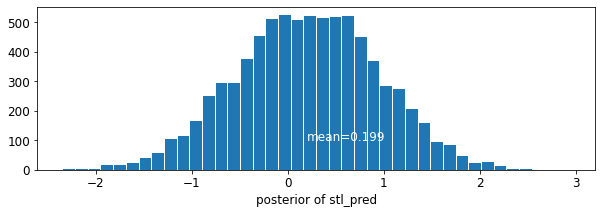

plot_posterior('stl_pred', contextual_effects_pred_samples.stl_pred)

7 結論

多層次模型的優點

- 考量觀察資料的自然階層結構。

- 估計(代表性不足)群組的係數。

- 在估計群組層級係數時,納入個人和群組層級資訊。

- 允許各群組之間個人層級係數的變異。

參考文獻

Gelman, A., & Hill, J. (2006). Data Analysis Using Regression and Multilevel/Hierarchical Models (1st ed.). Cambridge University Press.

Gelman, A. (2006). Multilevel (Hierarchical) modeling: what it can and cannot do. Technometrics, 48(3), 432–435.