在 TensorFlow.org 上檢視 在 TensorFlow.org 上檢視

|

在 Google Colab 中執行 在 Google Colab 中執行

|

在 GitHub 上檢視 在 GitHub 上檢視

|

下載筆記本 下載筆記本

|

查看 TF Hub 模型 查看 TF Hub 模型

|

TensorFlow Hub (TF-Hub) 是一個平台,可分享封裝在可重複使用資源 (特別是預先訓練的模組) 中的機器學習專業知識。

在這個 Colab 中,我們將使用一個模組,該模組封裝了 DELF 神經網路,以及用於處理圖片以識別關鍵點及其描述子的邏輯。神經網路的權重是在地標圖片上訓練的,如這篇論文中所述。

設定

pip install scikit-image

from absl import logging

import matplotlib.pyplot as plt

import numpy as np

from PIL import Image, ImageOps

from scipy.spatial import cKDTree

from skimage.feature import plot_matches

from skimage.measure import ransac

from skimage.transform import AffineTransform

from six import BytesIO

import tensorflow as tf

import tensorflow_hub as hub

from six.moves.urllib.request import urlopen

資料

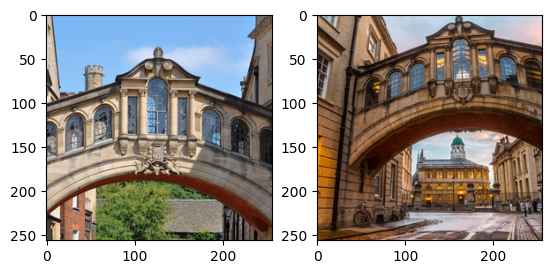

在下一個儲存格中,我們指定兩個圖片的網址,我們將使用 DELF 處理這些圖片,以便比對和比較它們。

選擇圖片

下載、調整大小、儲存和顯示圖片。

def download_and_resize(name, url, new_width=256, new_height=256):

path = tf.keras.utils.get_file(url.split('/')[-1], url)

image = Image.open(path)

image = ImageOps.fit(image, (new_width, new_height), Image.LANCZOS)

return image

image1 = download_and_resize('image_1.jpg', IMAGE_1_URL)

image2 = download_and_resize('image_2.jpg', IMAGE_2_URL)

plt.subplot(1,2,1)

plt.imshow(image1)

plt.subplot(1,2,2)

plt.imshow(image2)

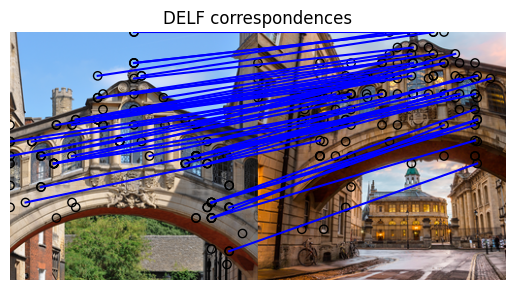

Downloading data from https://upload.wikimedia.org/wikipedia/commons/2/28/Bridge_of_Sighs%2C_Oxford.jpg 7013850/7013850 ━━━━━━━━━━━━━━━━━━━━ 0s 0us/step Downloading data from https://upload.wikimedia.org/wikipedia/commons/c/c3/The_Bridge_of_Sighs_and_Sheldonian_Theatre%2C_Oxford.jpg 14164194/14164194 ━━━━━━━━━━━━━━━━━━━━ 1s 0us/step <matplotlib.image.AxesImage at 0x7f496c454c40>

將 DELF 模組套用至資料

DELF 模組將圖片作為輸入,並使用向量描述值得注意的點。以下儲存格包含此 Colab 邏輯的核心。

delf = hub.load('https://tfhub.dev/google/delf/1').signatures['default']

2024-03-09 14:59:22.756986: E external/local_xla/xla/stream_executor/cuda/cuda_driver.cc:282] failed call to cuInit: CUDA_ERROR_NO_DEVICE: no CUDA-capable device is detected

def run_delf(image):

np_image = np.array(image)

float_image = tf.image.convert_image_dtype(np_image, tf.float32)

return delf(

image=float_image,

score_threshold=tf.constant(100.0),

image_scales=tf.constant([0.25, 0.3536, 0.5, 0.7071, 1.0, 1.4142, 2.0]),

max_feature_num=tf.constant(1000))

result1 = run_delf(image1)

result2 = run_delf(image2)

使用位置和描述向量來比對圖片

此後處理和視覺化不需要 TensorFlow

match_images(image1, image2, result1, result2)

Loaded image 1's 233 features Loaded image 2's 262 features Found 50 inliers