在 TensorFlow.org 上檢視 在 TensorFlow.org 上檢視

|

在 Google Colab 中執行 在 Google Colab 中執行

|

在 GitHub 上檢視原始碼 在 GitHub 上檢視原始碼

|

下載筆記本 下載筆記本

|

雜訊普遍存在於現代量子電腦中。量子位元容易受到周圍環境、不完美的製造、TLS,有時甚至 伽瑪射線 的干擾。在達到大規模錯誤校正之前,現今的演算法必須能夠在雜訊存在的情況下保持運作。這使得在雜訊下測試演算法成為驗證量子演算法/模型是否能在現今的量子電腦上運作的重要步驟。

在本教學課程中,您將透過高階 tfq.layers API 探索 TFQ 中雜訊電路模擬的基礎知識。

設定

pip install tensorflow==2.15.0 tensorflow-quantum==0.7.3

pip install -q git+https://github.com/tensorflow/docs

# Update package resources to account for version changes.

import importlib, pkg_resources

importlib.reload(pkg_resources)

/tmpfs/tmp/ipykernel_32316/1875984233.py:2: DeprecationWarning: pkg_resources is deprecated as an API. See https://setuptools.pypa.io/en/latest/pkg_resources.html import importlib, pkg_resources <module 'pkg_resources' from '/tmpfs/src/tf_docs_env/lib/python3.9/site-packages/pkg_resources/__init__.py'>

import random

import cirq

import sympy

import tensorflow_quantum as tfq

import tensorflow as tf

import numpy as np

# Plotting

import matplotlib.pyplot as plt

import tensorflow_docs as tfdocs

import tensorflow_docs.plots

2024-05-18 11:48:47.699511: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:9261] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered 2024-05-18 11:48:47.699552: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:607] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered 2024-05-18 11:48:47.701066: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1515] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered 2024-05-18 11:48:49.731639: E external/local_xla/xla/stream_executor/cuda/cuda_driver.cc:274] failed call to cuInit: CUDA_ERROR_NO_DEVICE: no CUDA-capable device is detected

1. 瞭解量子雜訊

1.1 基本電路雜訊

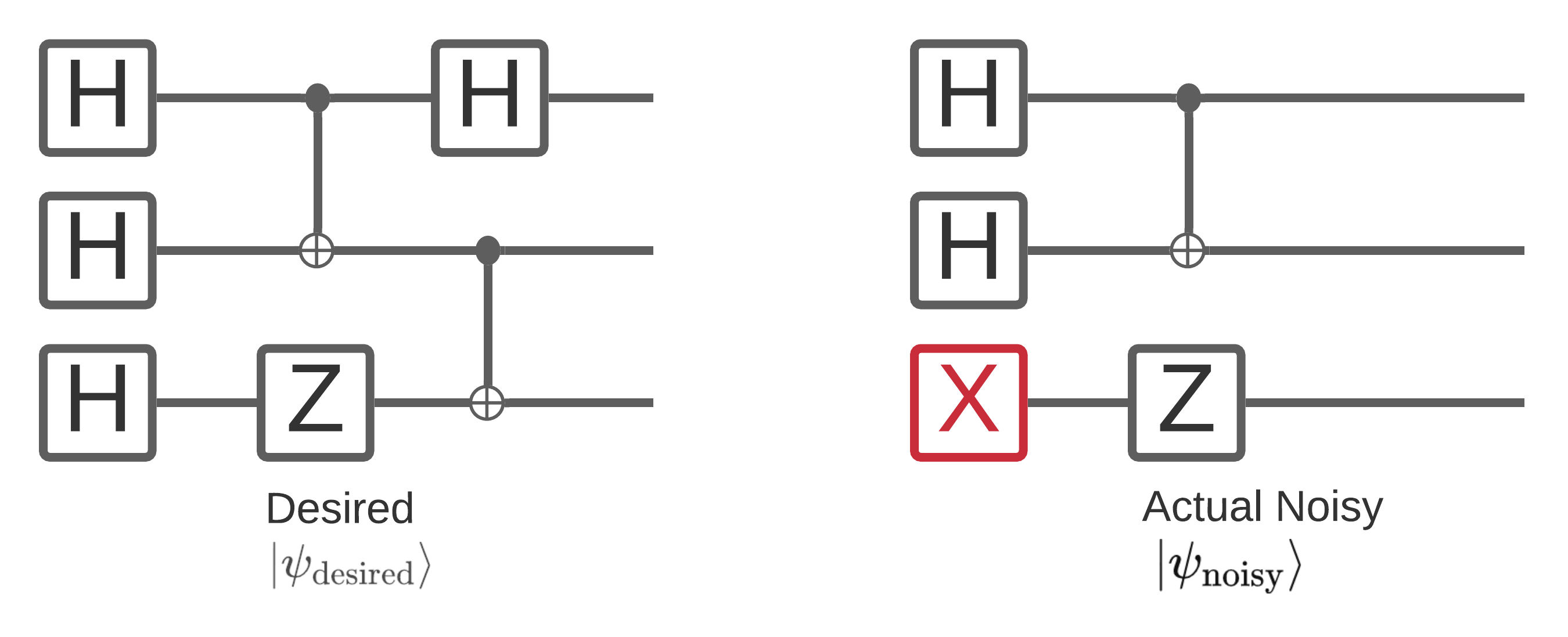

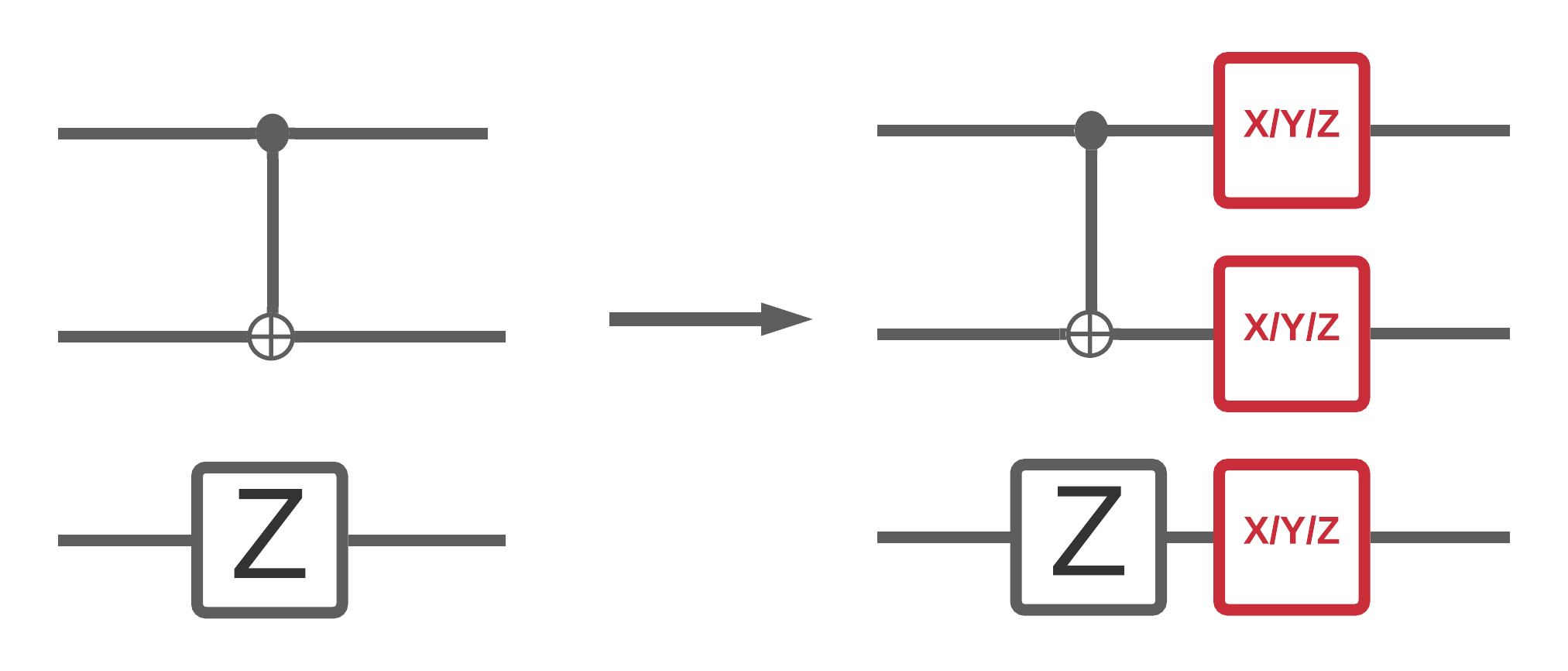

量子電腦上的雜訊會影響您可以從中測量的位元字串樣本。一種直觀的思考方式是,有雜訊的量子電腦會像下圖一樣,在隨機位置「插入」、「刪除」或「取代」閘

基於這種直觀理解,在處理雜訊時,您不再使用單一純態 \(|\psi \rangle\),而是處理所需電路所有可能雜訊實現的系綜:\(\rho = \sum_j p_j |\psi_j \rangle \langle \psi_j |\)。其中 \(p_j\) 給出系統處於 \(|\psi_j \rangle\) 的機率。

回到上面的圖片,如果我們事先知道我們的系統 90% 的時間執行完美,或 10% 的時間發生錯誤,且只有一種故障模式,那麼我們的系綜將是

\(\rho = 0.9 |\psi_\text{desired} \rangle \langle \psi_\text{desired}| + 0.1 |\psi_\text{noisy} \rangle \langle \psi_\text{noisy}| \)

如果我們的電路可能出錯的方式不只一種,那麼系綜 \(\rho\) 將包含兩個以上的項 (每種可能發生的新雜訊實現都有一項)。\(\rho\) 被稱為描述您的雜訊系統的密度矩陣。

1.2 使用通道對電路雜訊建模

不幸的是,實際上幾乎不可能知道您的電路可能出錯的所有方式及其確切機率。您可以做出一個簡化假設,即在電路中的每個操作之後,都存在某種類型的通道,大約捕捉了該操作可能如何出錯。您可以快速建立具有一些雜訊的電路

def x_circuit(qubits):

"""Produces an X wall circuit on `qubits`."""

return cirq.Circuit(cirq.X.on_each(*qubits))

def make_noisy(circuit, p):

"""Add a depolarization channel to all qubits in `circuit` before measurement."""

return circuit + cirq.Circuit(cirq.depolarize(p).on_each(*circuit.all_qubits()))

my_qubits = cirq.GridQubit.rect(1, 2)

my_circuit = x_circuit(my_qubits)

my_noisy_circuit = make_noisy(my_circuit, 0.5)

my_circuit

my_noisy_circuit

您可以使用以下程式碼檢查無雜訊密度矩陣 \(\rho\)

rho = cirq.final_density_matrix(my_circuit)

np.round(rho, 3)

array([[0.+0.j, 0.+0.j, 0.+0.j, 0.+0.j],

[0.+0.j, 0.+0.j, 0.+0.j, 0.+0.j],

[0.+0.j, 0.+0.j, 0.+0.j, 0.+0.j],

[0.+0.j, 0.+0.j, 0.+0.j, 1.+0.j]], dtype=complex64)

並使用以下程式碼檢查有雜訊密度矩陣 \(\rho\)

rho = cirq.final_density_matrix(my_noisy_circuit)

np.round(rho, 3)

array([[0.111+0.j, 0. +0.j, 0. +0.j, 0. +0.j],

[0. +0.j, 0.222+0.j, 0. +0.j, 0. +0.j],

[0. +0.j, 0. +0.j, 0.222+0.j, 0. +0.j],

[0. +0.j, 0. +0.j, 0. +0.j, 0.444+0.j]], dtype=complex64)

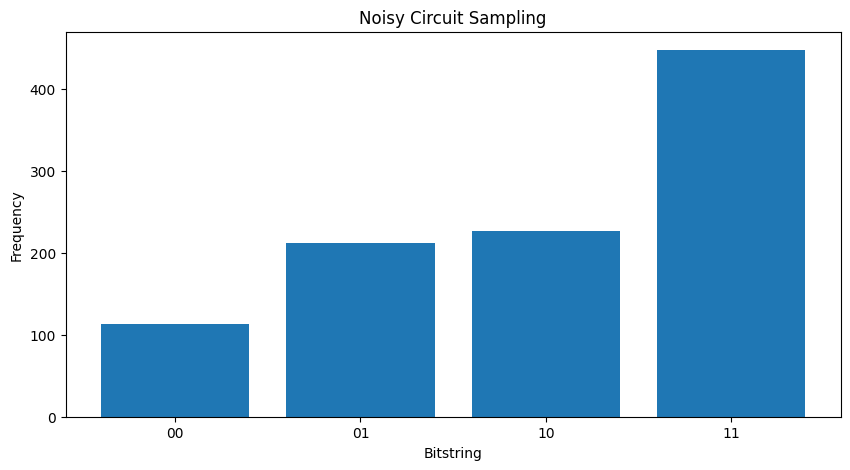

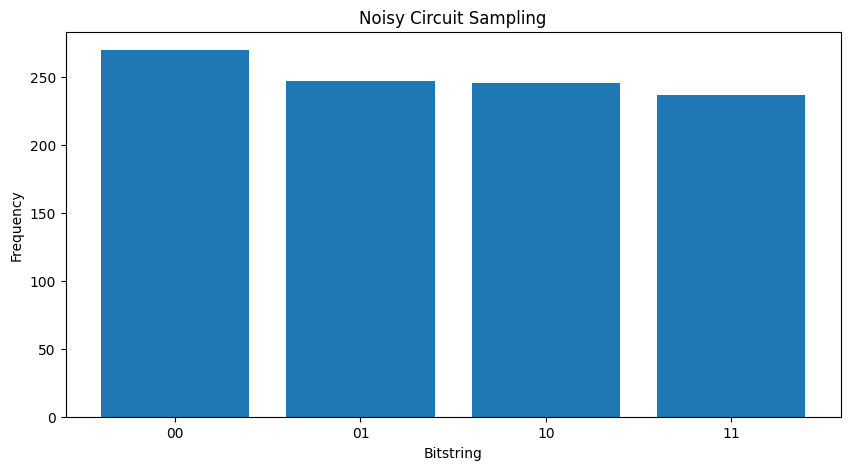

比較兩個不同的 \( \rho \),您可以看到雜訊已影響狀態的振幅 (以及隨之而來的取樣機率)。在無雜訊的情況下,您總是期望取樣 \( |11\rangle \) 狀態。但在有雜訊的狀態下,現在取樣 \( |00\rangle \) 或 \( |01\rangle \) 或 \( |10\rangle \) 的機率也不為零

"""Sample from my_noisy_circuit."""

def plot_samples(circuit):

samples = cirq.sample(circuit + cirq.measure(*circuit.all_qubits(), key='bits'), repetitions=1000)

freqs, _ = np.histogram(samples.data['bits'], bins=[i+0.01 for i in range(-1,2** len(my_qubits))])

plt.figure(figsize=(10,5))

plt.title('Noisy Circuit Sampling')

plt.xlabel('Bitstring')

plt.ylabel('Frequency')

plt.bar([i for i in range(2** len(my_qubits))], freqs, tick_label=['00','01','10','11'])

plot_samples(my_noisy_circuit)

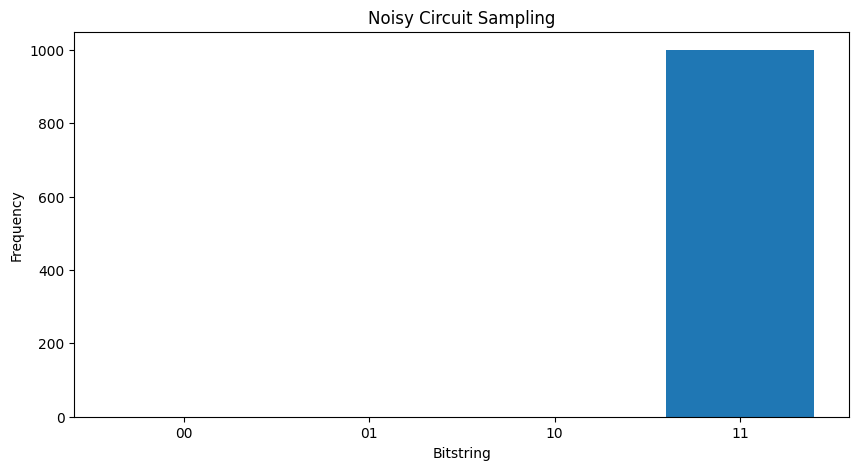

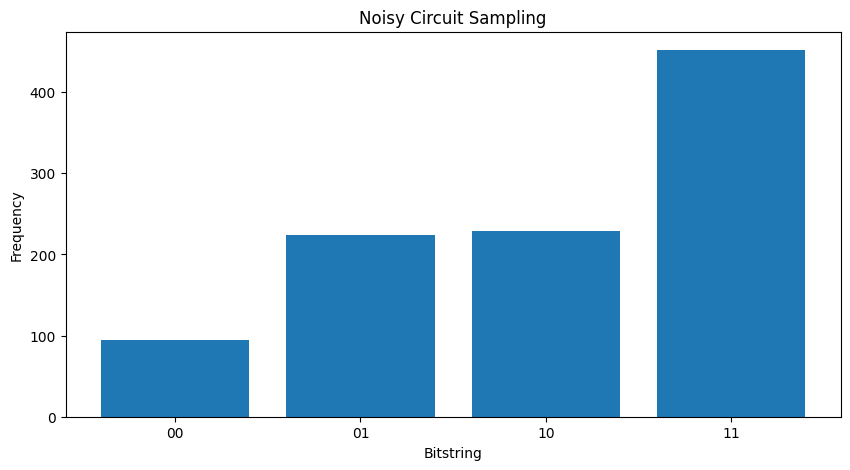

在沒有任何雜訊的情況下,您將始終獲得 \(|11\rangle\)

"""Sample from my_circuit."""

plot_samples(my_circuit)

如果您進一步增加雜訊,將越來越難以區分所需的行為 (取樣 \(|11\rangle\)) 與雜訊

my_really_noisy_circuit = make_noisy(my_circuit, 0.75)

plot_samples(my_really_noisy_circuit)

2. TFQ 中的基本雜訊

瞭解雜訊如何影響電路執行後,您可以探索雜訊在 TFQ 中的運作方式。TensorFlow Quantum 使用蒙地卡羅/基於軌跡的模擬,作為密度矩陣模擬的替代方案。這是因為密度矩陣模擬的記憶體複雜度限制了大型模擬,使用傳統的完整密度矩陣模擬方法時,量子位元數 <= 20 個。蒙地卡羅/軌跡將記憶體成本換為額外的時間成本。backend='noisy' 選項適用於所有 tfq.layers.Sample、tfq.layers.SampledExpectation 和 tfq.layers.Expectation (在 Expectation 的情況下,這確實新增了必要的 repetitions 參數)。

2.1 TFQ 中的雜訊取樣

若要使用 TFQ 和軌跡模擬重新建立上述圖表,您可以使用 tfq.layers.Sample

"""Draw bitstring samples from `my_noisy_circuit`"""

bitstrings = tfq.layers.Sample(backend='noisy')(my_noisy_circuit, repetitions=1000)

numeric_values = np.einsum('ijk,k->ij', bitstrings.to_tensor().numpy(), [1, 2])[0]

freqs, _ = np.histogram(numeric_values, bins=[i+0.01 for i in range(-1,2** len(my_qubits))])

plt.figure(figsize=(10,5))

plt.title('Noisy Circuit Sampling')

plt.xlabel('Bitstring')

plt.ylabel('Frequency')

plt.bar([i for i in range(2** len(my_qubits))], freqs, tick_label=['00','01','10','11'])

<BarContainer object of 4 artists>

2.2 基於雜訊樣本的期望值

若要執行基於雜訊樣本的期望值計算,您可以使用 tfq.layers.SampleExpectation

some_observables = [cirq.X(my_qubits[0]), cirq.Z(my_qubits[0]), 3.0 * cirq.Y(my_qubits[1]) + 1]

some_observables

[cirq.X(cirq.GridQubit(0, 0)),

cirq.Z(cirq.GridQubit(0, 0)),

cirq.PauliSum(cirq.LinearDict({frozenset({(cirq.GridQubit(0, 1), cirq.Y)}): (3+0j), frozenset(): (1+0j)}))]

透過從電路取樣來計算無雜訊期望值估計

noiseless_sampled_expectation = tfq.layers.SampledExpectation(backend='noiseless')(

my_circuit, operators=some_observables, repetitions=10000

)

noiseless_sampled_expectation.numpy()

array([[-0.0066, -1. , 0.9892]], dtype=float32)

將這些估計值與有雜訊的版本進行比較

noisy_sampled_expectation = tfq.layers.SampledExpectation(backend='noisy')(

[my_noisy_circuit, my_really_noisy_circuit], operators=some_observables, repetitions=10000

)

noisy_sampled_expectation.numpy()

/tmpfs/src/tf_docs_env/lib/python3.9/site-packages/keras/src/initializers/initializers.py:120: UserWarning: The initializer RandomUniform is unseeded and being called multiple times, which will return identical values each time (even if the initializer is unseeded). Please update your code to provide a seed to the initializer, or avoid using the same initializer instance more than once.

warnings.warn(

array([[-0.0034 , -0.34820002, 0.97959995],

[-0.0118 , 0.0042 , 1.015 ]], dtype=float32)

您可以看到雜訊特別影響了 \(\langle \psi | Z | \psi \rangle\) 的準確性,my_really_noisy_circuit 非常迅速地集中到 0。

2.3 雜訊解析期望值計算

執行雜訊解析期望值計算與上述幾乎相同

noiseless_analytic_expectation = tfq.layers.Expectation(backend='noiseless')(

my_circuit, operators=some_observables

)

noiseless_analytic_expectation.numpy()

array([[ 1.9106853e-15, -1.0000000e+00, 1.0000002e+00]], dtype=float32)

noisy_analytic_expectation = tfq.layers.Expectation(backend='noisy')(

[my_noisy_circuit, my_really_noisy_circuit], operators=some_observables, repetitions=10000

)

noisy_analytic_expectation.numpy()

array([[ 1.9106853e-15, -3.3100003e-01, 1.0000000e+00],

[ 1.9106855e-15, 5.0000018e-03, 1.0000000e+00]], dtype=float32)

3. 混合模型和量子資料雜訊

現在您已在 TFQ 中實作了一些雜訊電路模擬,您可以透過比較和對比雜訊與無雜訊效能,來實驗雜訊如何影響量子模型和混合量子古典模型。檢查模型或演算法是否對雜訊穩健性的良好初步檢查,是在電路範圍的去極化模型下進行測試,該模型看起來像這樣

其中電路的每個時間切片 (有時稱為時刻) 在該時間切片中的每個閘操作之後附加一個去極化通道。去極化通道將以機率 \(p\) 套用 \(\{X, Y, Z \}\) 之一,或以機率 \(1-p\) 不套用任何內容 (保留原始操作)。

3.1 資料

在此範例中,您可以使用 tfq.datasets 模組中準備好的一些電路作為訓練資料

qubits = cirq.GridQubit.rect(1, 8)

circuits, labels, pauli_sums, _ = tfq.datasets.xxz_chain(qubits, 'closed')

circuits[0]

Downloading data from https://storage.googleapis.com/download.tensorflow.org/data/quantum/spin_systems/XXZ_chain.zip 184449737/184449737 [==============================] - 2s 0us/step

編寫一個小型輔助函式將有助於產生有雜訊與無雜訊案例的資料

def get_data(qubits, depolarize_p=0.):

"""Return quantum data circuits and labels in `tf.Tensor` form."""

circuits, labels, pauli_sums, _ = tfq.datasets.xxz_chain(qubits, 'closed')

if depolarize_p >= 1e-5:

circuits = [circuit.with_noise(cirq.depolarize(depolarize_p)) for circuit in circuits]

tmp = list(zip(circuits, labels))

random.shuffle(tmp)

circuits_tensor = tfq.convert_to_tensor([x[0] for x in tmp])

labels_tensor = tf.convert_to_tensor([x[1] for x in tmp])

return circuits_tensor, labels_tensor

3.2 定義模型電路

現在您已擁有電路形式的量子資料,您將需要一個電路來對此資料建模,就像資料一樣,您可以編寫一個輔助函式來產生此電路,選擇性地包含雜訊

def modelling_circuit(qubits, depth, depolarize_p=0.):

"""A simple classifier circuit."""

dim = len(qubits)

ret = cirq.Circuit(cirq.H.on_each(*qubits))

for i in range(depth):

# Entangle layer.

ret += cirq.Circuit(cirq.CX(q1, q2) for (q1, q2) in zip(qubits[::2], qubits[1::2]))

ret += cirq.Circuit(cirq.CX(q1, q2) for (q1, q2) in zip(qubits[1::2], qubits[2::2]))

# Learnable rotation layer.

# i_params = sympy.symbols(f'layer-{i}-0:{dim}')

param = sympy.Symbol(f'layer-{i}')

single_qb = cirq.X

if i % 2 == 1:

single_qb = cirq.Y

ret += cirq.Circuit(single_qb(q) ** param for q in qubits)

if depolarize_p >= 1e-5:

ret = ret.with_noise(cirq.depolarize(depolarize_p))

return ret, [op(q) for q in qubits for op in [cirq.X, cirq.Y, cirq.Z]]

modelling_circuit(qubits, 3)[0]

3.3 模型建構和訓練

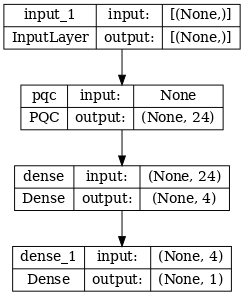

在建構資料和模型電路後,您需要的最後一個輔助函式是能夠組裝有雜訊或無雜訊混合量子 tf.keras.Model 的函式

def build_keras_model(qubits, depolarize_p=0.):

"""Prepare a noisy hybrid quantum classical Keras model."""

spin_input = tf.keras.Input(shape=(), dtype=tf.dtypes.string)

circuit_and_readout = modelling_circuit(qubits, 4, depolarize_p)

if depolarize_p >= 1e-5:

quantum_model = tfq.layers.NoisyPQC(*circuit_and_readout, sample_based=False, repetitions=10)(spin_input)

else:

quantum_model = tfq.layers.PQC(*circuit_and_readout)(spin_input)

intermediate = tf.keras.layers.Dense(4, activation='sigmoid')(quantum_model)

post_process = tf.keras.layers.Dense(1)(intermediate)

return tf.keras.Model(inputs=[spin_input], outputs=[post_process])

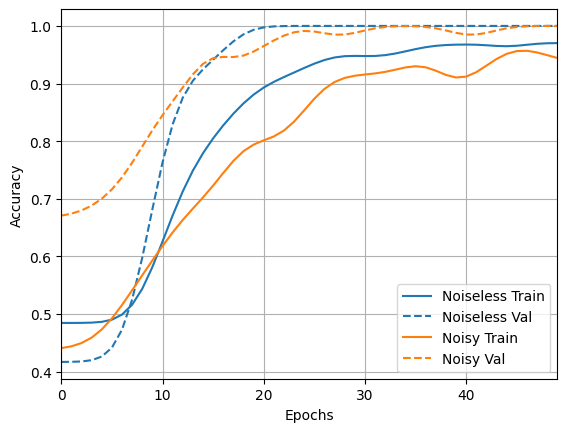

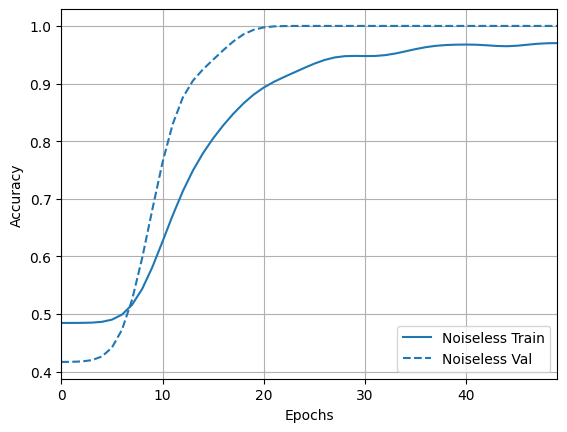

4. 比較效能

4.1 無雜訊基準

使用您的資料產生和模型建構程式碼,您現在可以比較和對比無雜訊和雜訊設定中的模型效能,首先您可以執行參考無雜訊訓練

training_histories = dict()

depolarize_p = 0.

n_epochs = 50

phase_classifier = build_keras_model(qubits, depolarize_p)

phase_classifier.compile(optimizer=tf.keras.optimizers.Adam(learning_rate=0.02),

loss=tf.keras.losses.BinaryCrossentropy(from_logits=True),

metrics=['accuracy'])

# Show the keras plot of the model

tf.keras.utils.plot_model(phase_classifier, show_shapes=True, dpi=70)

noiseless_data, noiseless_labels = get_data(qubits, depolarize_p)

training_histories['noiseless'] = phase_classifier.fit(x=noiseless_data,

y=noiseless_labels,

batch_size=16,

epochs=n_epochs,

validation_split=0.15,

verbose=1)

Epoch 1/50 4/4 [==============================] - 1s 129ms/step - loss: 0.6970 - accuracy: 0.4844 - val_loss: 0.6656 - val_accuracy: 0.4167 Epoch 2/50 4/4 [==============================] - 0s 62ms/step - loss: 0.6841 - accuracy: 0.4844 - val_loss: 0.6620 - val_accuracy: 0.4167 Epoch 3/50 4/4 [==============================] - 0s 66ms/step - loss: 0.6754 - accuracy: 0.4844 - val_loss: 0.6578 - val_accuracy: 0.4167 Epoch 4/50 4/4 [==============================] - 0s 62ms/step - loss: 0.6622 - accuracy: 0.4844 - val_loss: 0.6480 - val_accuracy: 0.4167 Epoch 5/50 4/4 [==============================] - 0s 63ms/step - loss: 0.6539 - accuracy: 0.4844 - val_loss: 0.6344 - val_accuracy: 0.4167 Epoch 6/50 4/4 [==============================] - 0s 62ms/step - loss: 0.6417 - accuracy: 0.4844 - val_loss: 0.6193 - val_accuracy: 0.4167 Epoch 7/50 4/4 [==============================] - 0s 61ms/step - loss: 0.6276 - accuracy: 0.4844 - val_loss: 0.6020 - val_accuracy: 0.4167 Epoch 8/50 4/4 [==============================] - 0s 60ms/step - loss: 0.6129 - accuracy: 0.4844 - val_loss: 0.5817 - val_accuracy: 0.4167 Epoch 9/50 4/4 [==============================] - 0s 61ms/step - loss: 0.5952 - accuracy: 0.5000 - val_loss: 0.5595 - val_accuracy: 0.6667 Epoch 10/50 4/4 [==============================] - 0s 60ms/step - loss: 0.5758 - accuracy: 0.6250 - val_loss: 0.5357 - val_accuracy: 0.7500 Epoch 11/50 4/4 [==============================] - 0s 61ms/step - loss: 0.5531 - accuracy: 0.6562 - val_loss: 0.5101 - val_accuracy: 0.9167 Epoch 12/50 4/4 [==============================] - 0s 59ms/step - loss: 0.5314 - accuracy: 0.7031 - val_loss: 0.4837 - val_accuracy: 0.9167 Epoch 13/50 4/4 [==============================] - 0s 59ms/step - loss: 0.5048 - accuracy: 0.7656 - val_loss: 0.4573 - val_accuracy: 0.9167 Epoch 14/50 4/4 [==============================] - 0s 59ms/step - loss: 0.4801 - accuracy: 0.7812 - val_loss: 0.4296 - val_accuracy: 0.9167 Epoch 15/50 4/4 [==============================] - 0s 61ms/step - loss: 0.4558 - accuracy: 0.7812 - val_loss: 0.4025 - val_accuracy: 0.9167 Epoch 16/50 4/4 [==============================] - 0s 60ms/step - loss: 0.4295 - accuracy: 0.8281 - val_loss: 0.3758 - val_accuracy: 0.9167 Epoch 17/50 4/4 [==============================] - 0s 59ms/step - loss: 0.4047 - accuracy: 0.8438 - val_loss: 0.3518 - val_accuracy: 1.0000 Epoch 18/50 4/4 [==============================] - 0s 60ms/step - loss: 0.3803 - accuracy: 0.8594 - val_loss: 0.3289 - val_accuracy: 1.0000 Epoch 19/50 4/4 [==============================] - 0s 61ms/step - loss: 0.3571 - accuracy: 0.8750 - val_loss: 0.3087 - val_accuracy: 1.0000 Epoch 20/50 4/4 [==============================] - 0s 60ms/step - loss: 0.3358 - accuracy: 0.9062 - val_loss: 0.2889 - val_accuracy: 1.0000 Epoch 21/50 4/4 [==============================] - 0s 60ms/step - loss: 0.3169 - accuracy: 0.9062 - val_loss: 0.2698 - val_accuracy: 1.0000 Epoch 22/50 4/4 [==============================] - 0s 60ms/step - loss: 0.2975 - accuracy: 0.9062 - val_loss: 0.2526 - val_accuracy: 1.0000 Epoch 23/50 4/4 [==============================] - 0s 60ms/step - loss: 0.2826 - accuracy: 0.9062 - val_loss: 0.2349 - val_accuracy: 1.0000 Epoch 24/50 4/4 [==============================] - 0s 59ms/step - loss: 0.2642 - accuracy: 0.9219 - val_loss: 0.2246 - val_accuracy: 1.0000 Epoch 25/50 4/4 [==============================] - 0s 60ms/step - loss: 0.2503 - accuracy: 0.9375 - val_loss: 0.2138 - val_accuracy: 1.0000 Epoch 26/50 4/4 [==============================] - 0s 60ms/step - loss: 0.2378 - accuracy: 0.9375 - val_loss: 0.2049 - val_accuracy: 1.0000 Epoch 27/50 4/4 [==============================] - 0s 59ms/step - loss: 0.2265 - accuracy: 0.9531 - val_loss: 0.1962 - val_accuracy: 1.0000 Epoch 28/50 4/4 [==============================] - 0s 59ms/step - loss: 0.2158 - accuracy: 0.9531 - val_loss: 0.1866 - val_accuracy: 1.0000 Epoch 29/50 4/4 [==============================] - 0s 59ms/step - loss: 0.2066 - accuracy: 0.9531 - val_loss: 0.1747 - val_accuracy: 1.0000 Epoch 30/50 4/4 [==============================] - 0s 60ms/step - loss: 0.1975 - accuracy: 0.9531 - val_loss: 0.1639 - val_accuracy: 1.0000 Epoch 31/50 4/4 [==============================] - 0s 59ms/step - loss: 0.1909 - accuracy: 0.9375 - val_loss: 0.1539 - val_accuracy: 1.0000 Epoch 32/50 4/4 [==============================] - 0s 59ms/step - loss: 0.1819 - accuracy: 0.9375 - val_loss: 0.1524 - val_accuracy: 1.0000 Epoch 33/50 4/4 [==============================] - 0s 59ms/step - loss: 0.1757 - accuracy: 0.9531 - val_loss: 0.1474 - val_accuracy: 1.0000 Epoch 34/50 4/4 [==============================] - 0s 59ms/step - loss: 0.1690 - accuracy: 0.9531 - val_loss: 0.1460 - val_accuracy: 1.0000 Epoch 35/50 4/4 [==============================] - 0s 58ms/step - loss: 0.1656 - accuracy: 0.9531 - val_loss: 0.1391 - val_accuracy: 1.0000 Epoch 36/50 4/4 [==============================] - 0s 59ms/step - loss: 0.1594 - accuracy: 0.9688 - val_loss: 0.1390 - val_accuracy: 1.0000 Epoch 37/50 4/4 [==============================] - 0s 59ms/step - loss: 0.1547 - accuracy: 0.9688 - val_loss: 0.1550 - val_accuracy: 1.0000 Epoch 38/50 4/4 [==============================] - 0s 60ms/step - loss: 0.1542 - accuracy: 0.9688 - val_loss: 0.1244 - val_accuracy: 1.0000 Epoch 39/50 4/4 [==============================] - 0s 59ms/step - loss: 0.1467 - accuracy: 0.9688 - val_loss: 0.1275 - val_accuracy: 1.0000 Epoch 40/50 4/4 [==============================] - 0s 60ms/step - loss: 0.1485 - accuracy: 0.9688 - val_loss: 0.1254 - val_accuracy: 1.0000 Epoch 41/50 4/4 [==============================] - 0s 59ms/step - loss: 0.1421 - accuracy: 0.9531 - val_loss: 0.1239 - val_accuracy: 1.0000 Epoch 42/50 4/4 [==============================] - 0s 59ms/step - loss: 0.1453 - accuracy: 0.9844 - val_loss: 0.1243 - val_accuracy: 1.0000 Epoch 43/50 4/4 [==============================] - 0s 59ms/step - loss: 0.1333 - accuracy: 0.9844 - val_loss: 0.1065 - val_accuracy: 1.0000 Epoch 44/50 4/4 [==============================] - 0s 58ms/step - loss: 0.1361 - accuracy: 0.9375 - val_loss: 0.0930 - val_accuracy: 1.0000 Epoch 45/50 4/4 [==============================] - 0s 59ms/step - loss: 0.1303 - accuracy: 0.9531 - val_loss: 0.1082 - val_accuracy: 1.0000 Epoch 46/50 4/4 [==============================] - 0s 60ms/step - loss: 0.1237 - accuracy: 0.9688 - val_loss: 0.1091 - val_accuracy: 1.0000 Epoch 47/50 4/4 [==============================] - 0s 59ms/step - loss: 0.1202 - accuracy: 0.9844 - val_loss: 0.1053 - val_accuracy: 1.0000 Epoch 48/50 4/4 [==============================] - 0s 59ms/step - loss: 0.1169 - accuracy: 0.9688 - val_loss: 0.0991 - val_accuracy: 1.0000 Epoch 49/50 4/4 [==============================] - 0s 59ms/step - loss: 0.1142 - accuracy: 0.9688 - val_loss: 0.0982 - val_accuracy: 1.0000 Epoch 50/50 4/4 [==============================] - 0s 61ms/step - loss: 0.1129 - accuracy: 0.9688 - val_loss: 0.1005 - val_accuracy: 1.0000

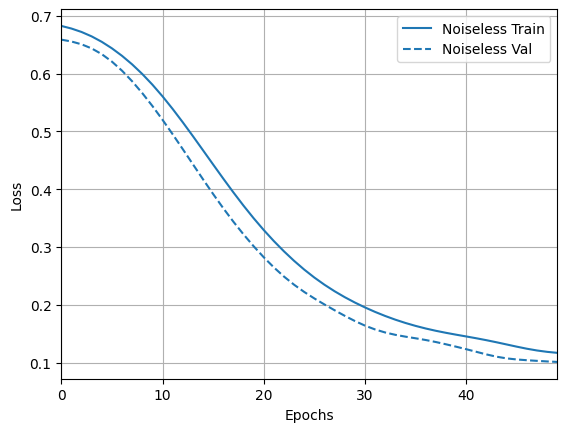

並探索結果和準確性

loss_plotter = tfdocs.plots.HistoryPlotter(metric = 'loss', smoothing_std=10)

loss_plotter.plot(training_histories)

acc_plotter = tfdocs.plots.HistoryPlotter(metric = 'accuracy', smoothing_std=10)

acc_plotter.plot(training_histories)

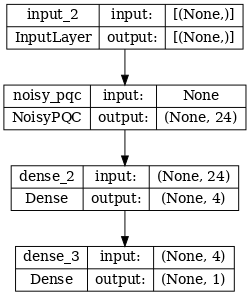

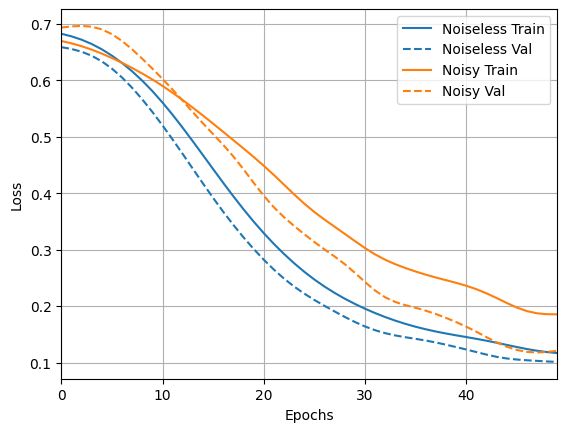

4.2 雜訊比較

現在您可以建構一個具有雜訊結構的新模型,並與上述模型進行比較,程式碼幾乎相同

depolarize_p = 0.001

n_epochs = 50

noisy_phase_classifier = build_keras_model(qubits, depolarize_p)

noisy_phase_classifier.compile(optimizer=tf.keras.optimizers.Adam(learning_rate=0.02),

loss=tf.keras.losses.BinaryCrossentropy(from_logits=True),

metrics=['accuracy'])

# Show the keras plot of the model

tf.keras.utils.plot_model(noisy_phase_classifier, show_shapes=True, dpi=70)

noisy_data, noisy_labels = get_data(qubits, depolarize_p)

training_histories['noisy'] = noisy_phase_classifier.fit(x=noisy_data,

y=noisy_labels,

batch_size=16,

epochs=n_epochs,

validation_split=0.15,

verbose=1)

Epoch 1/50 4/4 [==============================] - 9s 1s/step - loss: 0.6851 - accuracy: 0.4375 - val_loss: 0.6770 - val_accuracy: 0.6667 Epoch 2/50 4/4 [==============================] - 5s 1s/step - loss: 0.6696 - accuracy: 0.4375 - val_loss: 0.6996 - val_accuracy: 0.6667 Epoch 3/50 4/4 [==============================] - 5s 1s/step - loss: 0.6609 - accuracy: 0.4375 - val_loss: 0.7085 - val_accuracy: 0.6667 Epoch 4/50 4/4 [==============================] - 5s 1s/step - loss: 0.6501 - accuracy: 0.4375 - val_loss: 0.7097 - val_accuracy: 0.6667 Epoch 5/50 4/4 [==============================] - 5s 1s/step - loss: 0.6461 - accuracy: 0.4844 - val_loss: 0.6984 - val_accuracy: 0.7500 Epoch 6/50 4/4 [==============================] - 5s 1s/step - loss: 0.6379 - accuracy: 0.4844 - val_loss: 0.6806 - val_accuracy: 0.6667 Epoch 7/50 4/4 [==============================] - 5s 1s/step - loss: 0.6281 - accuracy: 0.5156 - val_loss: 0.6689 - val_accuracy: 0.7500 Epoch 8/50 4/4 [==============================] - 5s 1s/step - loss: 0.6176 - accuracy: 0.5781 - val_loss: 0.6502 - val_accuracy: 0.7500 Epoch 9/50 4/4 [==============================] - 5s 1s/step - loss: 0.6082 - accuracy: 0.5938 - val_loss: 0.6304 - val_accuracy: 0.8333 Epoch 10/50 4/4 [==============================] - 5s 1s/step - loss: 0.5994 - accuracy: 0.5781 - val_loss: 0.6079 - val_accuracy: 0.8333 Epoch 11/50 4/4 [==============================] - 5s 1s/step - loss: 0.5889 - accuracy: 0.6250 - val_loss: 0.5922 - val_accuracy: 0.9167 Epoch 12/50 4/4 [==============================] - 5s 1s/step - loss: 0.5698 - accuracy: 0.6875 - val_loss: 0.5856 - val_accuracy: 0.8333 Epoch 13/50 4/4 [==============================] - 5s 1s/step - loss: 0.5624 - accuracy: 0.6875 - val_loss: 0.5666 - val_accuracy: 0.8333 Epoch 14/50 4/4 [==============================] - 5s 1s/step - loss: 0.5419 - accuracy: 0.6719 - val_loss: 0.5141 - val_accuracy: 1.0000 Epoch 15/50 4/4 [==============================] - 5s 1s/step - loss: 0.5321 - accuracy: 0.7188 - val_loss: 0.5024 - val_accuracy: 1.0000 Epoch 16/50 4/4 [==============================] - 5s 1s/step - loss: 0.5228 - accuracy: 0.6875 - val_loss: 0.4970 - val_accuracy: 1.0000 Epoch 17/50 4/4 [==============================] - 5s 1s/step - loss: 0.4946 - accuracy: 0.7812 - val_loss: 0.4924 - val_accuracy: 0.9167 Epoch 18/50 4/4 [==============================] - 5s 1s/step - loss: 0.4873 - accuracy: 0.7969 - val_loss: 0.4714 - val_accuracy: 0.8333 Epoch 19/50 4/4 [==============================] - 5s 1s/step - loss: 0.4708 - accuracy: 0.8281 - val_loss: 0.4329 - val_accuracy: 1.0000 Epoch 20/50 4/4 [==============================] - 5s 1s/step - loss: 0.4628 - accuracy: 0.7969 - val_loss: 0.3888 - val_accuracy: 1.0000 Epoch 21/50 4/4 [==============================] - 5s 1s/step - loss: 0.4419 - accuracy: 0.7969 - val_loss: 0.3807 - val_accuracy: 0.9167 Epoch 22/50 4/4 [==============================] - 5s 1s/step - loss: 0.4307 - accuracy: 0.7969 - val_loss: 0.3384 - val_accuracy: 1.0000 Epoch 23/50 4/4 [==============================] - 5s 1s/step - loss: 0.4010 - accuracy: 0.7969 - val_loss: 0.3665 - val_accuracy: 1.0000 Epoch 24/50 4/4 [==============================] - 5s 1s/step - loss: 0.3922 - accuracy: 0.8125 - val_loss: 0.3271 - val_accuracy: 1.0000 Epoch 25/50 4/4 [==============================] - 5s 1s/step - loss: 0.3638 - accuracy: 0.8906 - val_loss: 0.3407 - val_accuracy: 1.0000 Epoch 26/50 4/4 [==============================] - 5s 1s/step - loss: 0.3456 - accuracy: 0.9062 - val_loss: 0.2935 - val_accuracy: 1.0000 Epoch 27/50 4/4 [==============================] - 5s 1s/step - loss: 0.3580 - accuracy: 0.9219 - val_loss: 0.2527 - val_accuracy: 1.0000 Epoch 28/50 4/4 [==============================] - 5s 1s/step - loss: 0.3323 - accuracy: 0.9062 - val_loss: 0.3143 - val_accuracy: 0.9167 Epoch 29/50 4/4 [==============================] - 5s 1s/step - loss: 0.3380 - accuracy: 0.9062 - val_loss: 0.3100 - val_accuracy: 1.0000 Epoch 30/50 4/4 [==============================] - 5s 1s/step - loss: 0.2893 - accuracy: 0.9375 - val_loss: 0.2266 - val_accuracy: 1.0000 Epoch 31/50 4/4 [==============================] - 5s 1s/step - loss: 0.3008 - accuracy: 0.9062 - val_loss: 0.2205 - val_accuracy: 1.0000 Epoch 32/50 4/4 [==============================] - 5s 1s/step - loss: 0.2806 - accuracy: 0.9062 - val_loss: 0.2191 - val_accuracy: 1.0000 Epoch 33/50 4/4 [==============================] - 5s 1s/step - loss: 0.2657 - accuracy: 0.9219 - val_loss: 0.1817 - val_accuracy: 1.0000 Epoch 34/50 4/4 [==============================] - 5s 1s/step - loss: 0.2722 - accuracy: 0.9375 - val_loss: 0.2123 - val_accuracy: 1.0000 Epoch 35/50 4/4 [==============================] - 5s 1s/step - loss: 0.2790 - accuracy: 0.8906 - val_loss: 0.1979 - val_accuracy: 1.0000 Epoch 36/50 4/4 [==============================] - 5s 1s/step - loss: 0.2423 - accuracy: 0.9844 - val_loss: 0.2043 - val_accuracy: 1.0000 Epoch 37/50 4/4 [==============================] - 5s 1s/step - loss: 0.2493 - accuracy: 0.9688 - val_loss: 0.1863 - val_accuracy: 1.0000 Epoch 38/50 4/4 [==============================] - 5s 1s/step - loss: 0.2604 - accuracy: 0.8906 - val_loss: 0.1987 - val_accuracy: 1.0000 Epoch 39/50 4/4 [==============================] - 5s 1s/step - loss: 0.2329 - accuracy: 0.8906 - val_loss: 0.1580 - val_accuracy: 1.0000 Epoch 40/50 4/4 [==============================] - 5s 1s/step - loss: 0.2420 - accuracy: 0.8906 - val_loss: 0.1558 - val_accuracy: 1.0000 Epoch 41/50 4/4 [==============================] - 5s 1s/step - loss: 0.2384 - accuracy: 0.8750 - val_loss: 0.2039 - val_accuracy: 0.9167 Epoch 42/50 4/4 [==============================] - 5s 1s/step - loss: 0.2396 - accuracy: 0.9375 - val_loss: 0.1347 - val_accuracy: 1.0000 Epoch 43/50 4/4 [==============================] - 5s 1s/step - loss: 0.2038 - accuracy: 0.9531 - val_loss: 0.1266 - val_accuracy: 1.0000 Epoch 44/50 4/4 [==============================] - 5s 1s/step - loss: 0.2223 - accuracy: 0.9531 - val_loss: 0.1334 - val_accuracy: 1.0000 Epoch 45/50 4/4 [==============================] - 5s 1s/step - loss: 0.2050 - accuracy: 0.9688 - val_loss: 0.1155 - val_accuracy: 1.0000 Epoch 46/50 4/4 [==============================] - 5s 1s/step - loss: 0.1815 - accuracy: 0.9531 - val_loss: 0.1298 - val_accuracy: 1.0000 Epoch 47/50 4/4 [==============================] - 5s 1s/step - loss: 0.1666 - accuracy: 1.0000 - val_loss: 0.0986 - val_accuracy: 1.0000 Epoch 48/50 4/4 [==============================] - 5s 1s/step - loss: 0.1885 - accuracy: 0.9375 - val_loss: 0.0958 - val_accuracy: 1.0000 Epoch 49/50 4/4 [==============================] - 5s 1s/step - loss: 0.1865 - accuracy: 0.9219 - val_loss: 0.1410 - val_accuracy: 1.0000 Epoch 50/50 4/4 [==============================] - 5s 1s/step - loss: 0.1887 - accuracy: 0.9375 - val_loss: 0.1307 - val_accuracy: 1.0000

loss_plotter.plot(training_histories)

acc_plotter.plot(training_histories)