在 TensorFlow.org 上檢視 在 TensorFlow.org 上檢視

|

在 Google Colab 中執行 在 Google Colab 中執行

|

在 GitHub 上檢視原始碼 在 GitHub 上檢視原始碼

|

下載筆記本 下載筆記本

|

在此範例中,您將探索 McClean (2019) 的研究結果,該研究指出並非所有量子神經網路結構都適用於學習。尤其是,您將會看到某些大型隨機量子電路系列無法作為良好的量子神經網路,因為它們的梯度幾乎在所有地方都會消失。在此範例中,您不會針對特定的學習問題訓練任何模型,而是專注於更簡單的問題,亦即瞭解梯度的行為。

設定

pip install tensorflow==2.15.0

安裝 TensorFlow Quantum

pip install tensorflow-quantum==0.7.3

# Update package resources to account for version changes.

import importlib, pkg_resources

importlib.reload(pkg_resources)

/tmpfs/tmp/ipykernel_20821/1875984233.py:2: DeprecationWarning: pkg_resources is deprecated as an API. See https://setuptools.pypa.io/en/latest/pkg_resources.html import importlib, pkg_resources <module 'pkg_resources' from '/tmpfs/src/tf_docs_env/lib/python3.9/site-packages/pkg_resources/__init__.py'>

現在匯入 TensorFlow 和模組依附元件

import tensorflow as tf

import tensorflow_quantum as tfq

import cirq

import sympy

import numpy as np

# visualization tools

%matplotlib inline

import matplotlib.pyplot as plt

from cirq.contrib.svg import SVGCircuit

np.random.seed(1234)

2024-05-18 11:31:46.453729: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:9261] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered 2024-05-18 11:31:46.453776: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:607] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered 2024-05-18 11:31:46.455255: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1515] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered 2024-05-18 11:31:49.800511: E external/local_xla/xla/stream_executor/cuda/cuda_driver.cc:274] failed call to cuInit: CUDA_ERROR_NO_DEVICE: no CUDA-capable device is detected

1. 摘要

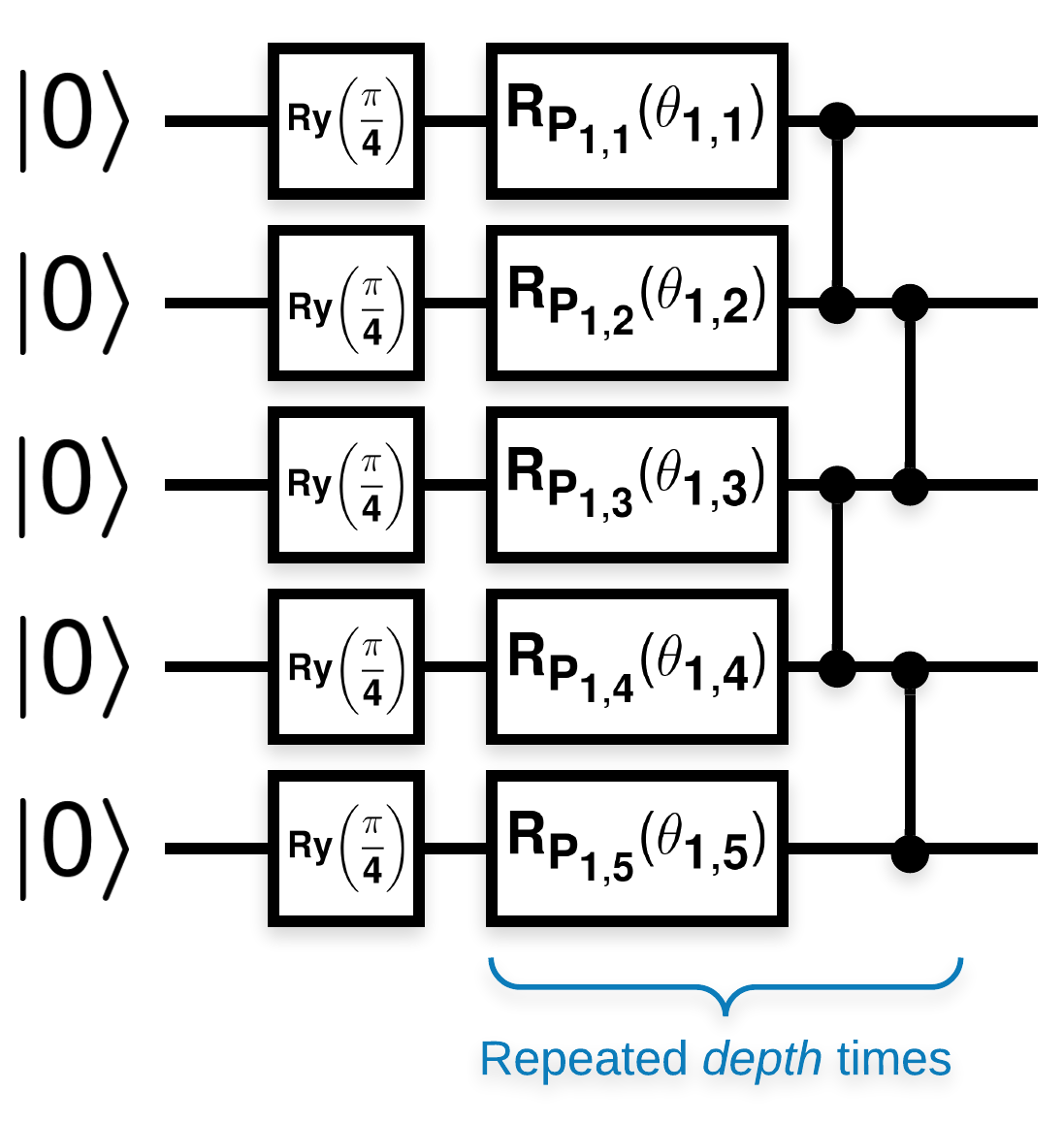

具有許多區塊的隨機量子電路,外觀如下 (\(R_{P}(\theta)\) 是隨機 Pauli 旋轉)

其中,如果 \(f(x)\) 定義為關於任何量子位元 \(a\) 和 \(b\) 的 \(Z_{a}Z_{b}\) 的期望值,則會出現 \(f'(x)\) 的平均值非常接近 0 且變化不大的問題。您將在下方看到這一點

2. 產生隨機電路

論文中的建構方式很容易理解。以下實作一個簡單的函式,可在量子位元集上產生具有指定深度的隨機量子電路 (有時稱為量子神經網路 (QNN))

def generate_random_qnn(qubits, symbol, depth):

"""Generate random QNN's with the same structure from McClean et al."""

circuit = cirq.Circuit()

for qubit in qubits:

circuit += cirq.ry(np.pi / 4.0)(qubit)

for d in range(depth):

# Add a series of single qubit rotations.

for i, qubit in enumerate(qubits):

random_n = np.random.uniform()

random_rot = np.random.uniform(

) * 2.0 * np.pi if i != 0 or d != 0 else symbol

if random_n > 2. / 3.:

# Add a Z.

circuit += cirq.rz(random_rot)(qubit)

elif random_n > 1. / 3.:

# Add a Y.

circuit += cirq.ry(random_rot)(qubit)

else:

# Add a X.

circuit += cirq.rx(random_rot)(qubit)

# Add CZ ladder.

for src, dest in zip(qubits, qubits[1:]):

circuit += cirq.CZ(src, dest)

return circuit

generate_random_qnn(cirq.GridQubit.rect(1, 3), sympy.Symbol('theta'), 2)

作者研究單一參數 \(\theta_{1,1}\) 的梯度。讓我們在電路中放置一個 sympy.Symbol (在 \(\theta_{1,1}\) 所在的位置) 來跟著做。由於作者並未分析電路中任何其他符號的統計資料,因此現在就將其替換為隨機值,而不是稍後再替換。

3. 執行電路

產生一些此類電路以及可觀測值,以測試梯度變化不大的說法。首先,產生一批隨機電路。選擇隨機ZZ 可觀測值,並使用 TensorFlow Quantum 批次計算梯度和變異數。

3.1 批次變異數計算

讓我們編寫一個輔助函式,以計算給定可觀測值在批次電路上的梯度變異數

def process_batch(circuits, symbol, op):

"""Compute the variance of a batch of expectations w.r.t. op on each circuit that

contains `symbol`. Note that this method sets up a new compute graph every time it is

called so it isn't as performant as possible."""

# Setup a simple layer to batch compute the expectation gradients.

expectation = tfq.layers.Expectation()

# Prep the inputs as tensors

circuit_tensor = tfq.convert_to_tensor(circuits)

values_tensor = tf.convert_to_tensor(

np.random.uniform(0, 2 * np.pi, (n_circuits, 1)).astype(np.float32))

# Use TensorFlow GradientTape to track gradients.

with tf.GradientTape() as g:

g.watch(values_tensor)

forward = expectation(circuit_tensor,

operators=op,

symbol_names=[symbol],

symbol_values=values_tensor)

# Return variance of gradients across all circuits.

grads = g.gradient(forward, values_tensor)

grad_var = tf.math.reduce_std(grads, axis=0)

return grad_var.numpy()[0]

3.1 設定並執行

選擇要產生的隨機電路數量及其深度,以及它們應作用的量子位元數量。然後繪製結果。

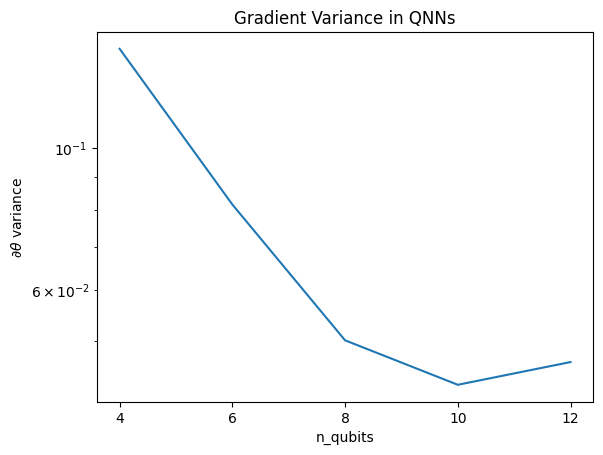

n_qubits = [2 * i for i in range(2, 7)

] # Ranges studied in paper are between 2 and 24.

depth = 50 # Ranges studied in paper are between 50 and 500.

n_circuits = 200

theta_var = []

for n in n_qubits:

# Generate the random circuits and observable for the given n.

qubits = cirq.GridQubit.rect(1, n)

symbol = sympy.Symbol('theta')

circuits = [

generate_random_qnn(qubits, symbol, depth) for _ in range(n_circuits)

]

op = cirq.Z(qubits[0]) * cirq.Z(qubits[1])

theta_var.append(process_batch(circuits, symbol, op))

plt.semilogy(n_qubits, theta_var)

plt.title('Gradient Variance in QNNs')

plt.xlabel('n_qubits')

plt.xticks(n_qubits)

plt.ylabel('$\\partial \\theta$ variance')

plt.show()

WARNING:tensorflow:5 out of the last 5 calls to <function Adjoint.differentiate_analytic at 0x7f2a204ee4c0> triggered tf.function retracing. Tracing is expensive and the excessive number of tracings could be due to (1) creating @tf.function repeatedly in a loop, (2) passing tensors with different shapes, (3) passing Python objects instead of tensors. For (1), please define your @tf.function outside of the loop. For (2), @tf.function has reduce_retracing=True option that can avoid unnecessary retracing. For (3), please refer to https://tensorflow.dev.org.tw/guide/function#controlling_retracing and https://tensorflow.dev.org.tw/api_docs/python/tf/function for more details.

此圖表顯示,對於量子機器學習問題,您不能只是隨意猜測隨機 QNN ansatz 並期望獲得最佳結果。模型電路中必須存在一些結構,梯度才能變化到足以發生學習的程度。

4. 啟發法

Grant (2019) 提出的一種有趣的啟發法,可讓使用者從非常接近隨機的狀態開始,但又不完全隨機。作者使用與 McClean 等人相同的電路,針對古典控制參數提出不同的初始化技術,以避免貧瘠高原。初始化技術會先以完全隨機的控制參數啟動某些層,但在緊隨其後的層中,選擇參數,使前幾層所做的初始轉換復原。作者將此稱為恆等區塊。

此啟發法的優點在於,僅變更單一參數,目前區塊外的所有其他區塊都將保持恆等,且梯度訊號會比以往更強。這可讓使用者挑選要修改的變數和區塊,以取得強大的梯度訊號。此啟發法並不能防止使用者在訓練階段陷入貧瘠高原 (並限制完全同步更新),它只是保證您可以從高原外開始。

4.1 新的 QNN 建構

現在建構一個函式來產生恆等區塊 QNN。此實作方式與論文中的實作方式略有不同。目前,請查看單一參數梯度的行為,使其與 McClean 等人保持一致,因此可以進行一些簡化。

若要產生恆等區塊並訓練模型,通常您需要 \(U1(\theta_{1a}) U1(\theta_{1b})^{\dagger}\),而不是 \(U1(\theta_1) U1(\theta_1)^{\dagger}\)。最初,\(\theta_{1a}\) 和 \(\theta_{1b}\) 是相同的角度,但它們是獨立學習的。否則,即使在訓練後,您也將始終獲得恆等。恆等區塊數量的選擇是經驗性的。區塊越深,區塊中間的變異數越小。但在區塊的開始和結束時,參數梯度的變異數應該很大。

def generate_identity_qnn(qubits, symbol, block_depth, total_depth):

"""Generate random QNN's with the same structure from Grant et al."""

circuit = cirq.Circuit()

# Generate initial block with symbol.

prep_and_U = generate_random_qnn(qubits, symbol, block_depth)

circuit += prep_and_U

# Generate dagger of initial block without symbol.

U_dagger = (prep_and_U[1:])**-1

circuit += cirq.resolve_parameters(

U_dagger, param_resolver={symbol: np.random.uniform() * 2 * np.pi})

for d in range(total_depth - 1):

# Get a random QNN.

prep_and_U_circuit = generate_random_qnn(

qubits,

np.random.uniform() * 2 * np.pi, block_depth)

# Remove the state-prep component

U_circuit = prep_and_U_circuit[1:]

# Add U

circuit += U_circuit

# Add U^dagger

circuit += U_circuit**-1

return circuit

generate_identity_qnn(cirq.GridQubit.rect(1, 3), sympy.Symbol('theta'), 2, 2)

4.2 比較

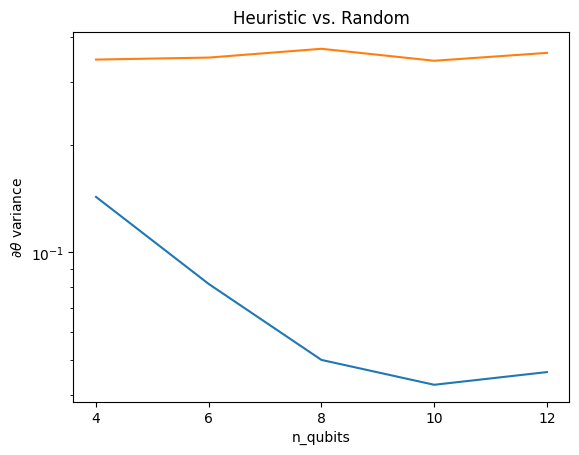

在這裡您可以看到,啟發法確實有助於防止梯度變異數快速消失

block_depth = 10

total_depth = 5

heuristic_theta_var = []

for n in n_qubits:

# Generate the identity block circuits and observable for the given n.

qubits = cirq.GridQubit.rect(1, n)

symbol = sympy.Symbol('theta')

circuits = [

generate_identity_qnn(qubits, symbol, block_depth, total_depth)

for _ in range(n_circuits)

]

op = cirq.Z(qubits[0]) * cirq.Z(qubits[1])

heuristic_theta_var.append(process_batch(circuits, symbol, op))

plt.semilogy(n_qubits, theta_var)

plt.semilogy(n_qubits, heuristic_theta_var)

plt.title('Heuristic vs. Random')

plt.xlabel('n_qubits')

plt.xticks(n_qubits)

plt.ylabel('$\\partial \\theta$ variance')

plt.show()

WARNING:tensorflow:6 out of the last 6 calls to <function Adjoint.differentiate_analytic at 0x7f2a204ee4c0> triggered tf.function retracing. Tracing is expensive and the excessive number of tracings could be due to (1) creating @tf.function repeatedly in a loop, (2) passing tensors with different shapes, (3) passing Python objects instead of tensors. For (1), please define your @tf.function outside of the loop. For (2), @tf.function has reduce_retracing=True option that can avoid unnecessary retracing. For (3), please refer to https://tensorflow.dev.org.tw/guide/function#controlling_retracing and https://tensorflow.dev.org.tw/api_docs/python/tf/function for more details.

這是從 (接近) 隨機 QNN 獲得更強梯度訊號的重大改進。