在 TensorFlow.org 上檢視 在 TensorFlow.org 上檢視

|

在 Google Colab 中執行 在 Google Colab 中執行

|

在 GitHub 上檢視原始碼 在 GitHub 上檢視原始碼

|

下載筆記本 下載筆記本

|

總覽

TFL 預製聚合函數模型是快速又輕鬆的方式,可建構 TFL keras.Model 執行個體,以學習複雜的聚合函數。本指南概述建構 TFL 預製聚合函數模型並進行訓練/測試所需的步驟。

設定

安裝 TF Lattice 套件

pip install --pre -U tensorflow tf-keras tensorflow-lattice pydot graphviz

匯入必要套件

import tensorflow as tf

import collections

import logging

import numpy as np

import pandas as pd

import sys

import tensorflow_lattice as tfl

logging.disable(sys.maxsize)

# Use Keras 2.

version_fn = getattr(tf.keras, "version", None)

if version_fn and version_fn().startswith("3."):

import tf_keras as keras

else:

keras = tf.keras

下載 Puzzles 資料集

train_dataframe = pd.read_csv(

'https://raw.githubusercontent.com/wbakst/puzzles_data/master/train.csv')

train_dataframe.head()

test_dataframe = pd.read_csv(

'https://raw.githubusercontent.com/wbakst/puzzles_data/master/test.csv')

test_dataframe.head()

擷取並轉換特徵和標籤

# Features:

# - star_rating rating out of 5 stars (1-5)

# - word_count number of words in the review

# - is_amazon 1 = reviewed on amazon; 0 = reviewed on artifact website

# - includes_photo if the review includes a photo of the puzzle

# - num_helpful number of people that found this review helpful

# - num_reviews total number of reviews for this puzzle (we construct)

#

# This ordering of feature names will be the exact same order that we construct

# our model to expect.

feature_names = [

'star_rating', 'word_count', 'is_amazon', 'includes_photo', 'num_helpful',

'num_reviews'

]

def extract_features(dataframe, label_name):

# First we extract flattened features.

flattened_features = {

feature_name: dataframe[feature_name].values.astype(float)

for feature_name in feature_names[:-1]

}

# Construct mapping from puzzle name to feature.

star_rating = collections.defaultdict(list)

word_count = collections.defaultdict(list)

is_amazon = collections.defaultdict(list)

includes_photo = collections.defaultdict(list)

num_helpful = collections.defaultdict(list)

labels = {}

# Extract each review.

for i in range(len(dataframe)):

row = dataframe.iloc[i]

puzzle_name = row['puzzle_name']

star_rating[puzzle_name].append(float(row['star_rating']))

word_count[puzzle_name].append(float(row['word_count']))

is_amazon[puzzle_name].append(float(row['is_amazon']))

includes_photo[puzzle_name].append(float(row['includes_photo']))

num_helpful[puzzle_name].append(float(row['num_helpful']))

labels[puzzle_name] = float(row[label_name])

# Organize data into list of list of features.

names = list(star_rating.keys())

star_rating = [star_rating[name] for name in names]

word_count = [word_count[name] for name in names]

is_amazon = [is_amazon[name] for name in names]

includes_photo = [includes_photo[name] for name in names]

num_helpful = [num_helpful[name] for name in names]

num_reviews = [[len(ratings)] * len(ratings) for ratings in star_rating]

labels = [labels[name] for name in names]

# Flatten num_reviews

flattened_features['num_reviews'] = [len(reviews) for reviews in num_reviews]

# Convert data into ragged tensors.

star_rating = tf.ragged.constant(star_rating)

word_count = tf.ragged.constant(word_count)

is_amazon = tf.ragged.constant(is_amazon)

includes_photo = tf.ragged.constant(includes_photo)

num_helpful = tf.ragged.constant(num_helpful)

num_reviews = tf.ragged.constant(num_reviews)

labels = tf.constant(labels)

# Now we can return our extracted data.

return (star_rating, word_count, is_amazon, includes_photo, num_helpful,

num_reviews), labels, flattened_features

train_xs, train_ys, flattened_features = extract_features(train_dataframe, 'Sales12-18MonthsAgo')

test_xs, test_ys, _ = extract_features(test_dataframe, 'SalesLastSixMonths')

2024-03-23 11:15:20.462626: E external/local_xla/xla/stream_executor/cuda/cuda_driver.cc:282] failed call to cuInit: CUDA_ERROR_NO_DEVICE: no CUDA-capable device is detected

# Let's define our label minimum and maximum.

min_label, max_label = float(np.min(train_ys)), float(np.max(train_ys))

min_label, max_label = float(np.min(train_ys)), float(np.max(train_ys))

設定本指南中用於訓練的預設值

LEARNING_RATE = 0.1

BATCH_SIZE = 128

NUM_EPOCHS = 500

MIDDLE_DIM = 3

MIDDLE_LATTICE_SIZE = 2

MIDDLE_KEYPOINTS = 16

OUTPUT_KEYPOINTS = 8

特徵配置

特徵校正和每個特徵的配置是使用 tfl.configs.FeatureConfig 設定的。特徵配置包括單調性約束、每個特徵的正規化 (請參閱 tfl.configs.RegularizerConfig),以及格狀模型的格狀大小。

請注意,對於任何我們希望模型識別的特徵,我們都必須完整指定特徵配置。否則,模型將無法得知存在此類特徵。對於聚合模型,這些特徵將自動被考量並適當地處理為參差不齊的特徵。

計算分位數

雖然 tfl.configs.FeatureConfig 中 pwl_calibration_input_keypoints 的預設設定為「分位數」,但對於預製模型,我們必須手動定義輸入關鍵點。為此,我們先定義自己的輔助函式來計算分位數。

def compute_quantiles(features,

num_keypoints=10,

clip_min=None,

clip_max=None,

missing_value=None):

# Clip min and max if desired.

if clip_min is not None:

features = np.maximum(features, clip_min)

features = np.append(features, clip_min)

if clip_max is not None:

features = np.minimum(features, clip_max)

features = np.append(features, clip_max)

# Make features unique.

unique_features = np.unique(features)

# Remove missing values if specified.

if missing_value is not None:

unique_features = np.delete(unique_features,

np.where(unique_features == missing_value))

# Compute and return quantiles over unique non-missing feature values.

return np.quantile(

unique_features,

np.linspace(0., 1., num=num_keypoints),

interpolation='nearest').astype(float)

定義我們的特徵配置

現在我們可以計算分位數了,我們為每個希望模型接受為輸入的特徵定義一個特徵配置。

# Feature configs are used to specify how each feature is calibrated and used.

feature_configs = [

tfl.configs.FeatureConfig(

name='star_rating',

lattice_size=2,

monotonicity='increasing',

pwl_calibration_num_keypoints=5,

pwl_calibration_input_keypoints=compute_quantiles(

flattened_features['star_rating'], num_keypoints=5),

),

tfl.configs.FeatureConfig(

name='word_count',

lattice_size=2,

monotonicity='increasing',

pwl_calibration_num_keypoints=5,

pwl_calibration_input_keypoints=compute_quantiles(

flattened_features['word_count'], num_keypoints=5),

),

tfl.configs.FeatureConfig(

name='is_amazon',

lattice_size=2,

num_buckets=2,

),

tfl.configs.FeatureConfig(

name='includes_photo',

lattice_size=2,

num_buckets=2,

),

tfl.configs.FeatureConfig(

name='num_helpful',

lattice_size=2,

monotonicity='increasing',

pwl_calibration_num_keypoints=5,

pwl_calibration_input_keypoints=compute_quantiles(

flattened_features['num_helpful'], num_keypoints=5),

# Larger num_helpful indicating more trust in star_rating.

reflects_trust_in=[

tfl.configs.TrustConfig(

feature_name="star_rating", trust_type="trapezoid"),

],

),

tfl.configs.FeatureConfig(

name='num_reviews',

lattice_size=2,

monotonicity='increasing',

pwl_calibration_num_keypoints=5,

pwl_calibration_input_keypoints=compute_quantiles(

flattened_features['num_reviews'], num_keypoints=5),

)

]

/tmpfs/tmp/ipykernel_9889/285458577.py:8: DeprecationWarning: the `interpolation=` argument to quantile was renamed to `method=`, which has additional options. Users of the modes 'nearest', 'lower', 'higher', or 'midpoint' are encouraged to review the method they used. (Deprecated NumPy 1.22) pwl_calibration_input_keypoints=compute_quantiles( /tmpfs/tmp/ipykernel_9889/285458577.py:16: DeprecationWarning: the `interpolation=` argument to quantile was renamed to `method=`, which has additional options. Users of the modes 'nearest', 'lower', 'higher', or 'midpoint' are encouraged to review the method they used. (Deprecated NumPy 1.22) pwl_calibration_input_keypoints=compute_quantiles( /tmpfs/tmp/ipykernel_9889/285458577.py:34: DeprecationWarning: the `interpolation=` argument to quantile was renamed to `method=`, which has additional options. Users of the modes 'nearest', 'lower', 'higher', or 'midpoint' are encouraged to review the method they used. (Deprecated NumPy 1.22) pwl_calibration_input_keypoints=compute_quantiles( /tmpfs/tmp/ipykernel_9889/285458577.py:47: DeprecationWarning: the `interpolation=` argument to quantile was renamed to `method=`, which has additional options. Users of the modes 'nearest', 'lower', 'higher', or 'midpoint' are encouraged to review the method they used. (Deprecated NumPy 1.22) pwl_calibration_input_keypoints=compute_quantiles(

聚合函數模型

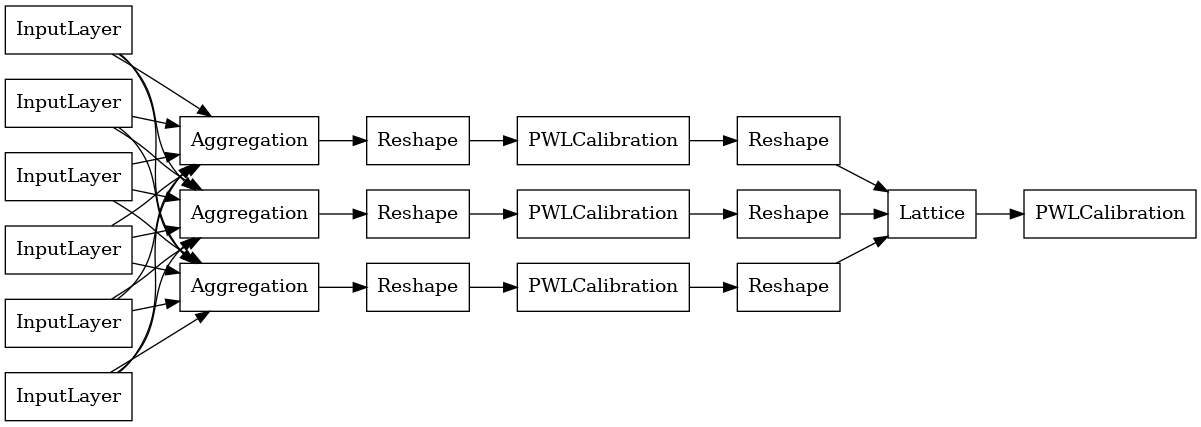

若要建構 TFL 預製模型,請先從 tfl.configs 建構模型配置。聚合函數模型是使用 tfl.configs.AggregateFunctionConfig 建構的。它會套用分段線性校正和類別校正,然後對參差不齊輸入的每個維度套用格狀模型。接著,它會對每個維度的輸出套用聚合層。之後,可以選擇性地套用輸出分段線性校正。

# Model config defines the model structure for the aggregate function model.

aggregate_function_model_config = tfl.configs.AggregateFunctionConfig(

feature_configs=feature_configs,

middle_dimension=MIDDLE_DIM,

middle_lattice_size=MIDDLE_LATTICE_SIZE,

middle_calibration=True,

middle_calibration_num_keypoints=MIDDLE_KEYPOINTS,

middle_monotonicity='increasing',

output_min=min_label,

output_max=max_label,

output_calibration=True,

output_calibration_num_keypoints=OUTPUT_KEYPOINTS,

output_initialization=np.linspace(

min_label, max_label, num=OUTPUT_KEYPOINTS))

# An AggregateFunction premade model constructed from the given model config.

aggregate_function_model = tfl.premade.AggregateFunction(

aggregate_function_model_config)

# Let's plot our model.

keras.utils.plot_model(

aggregate_function_model, show_layer_names=False, rankdir='LR')

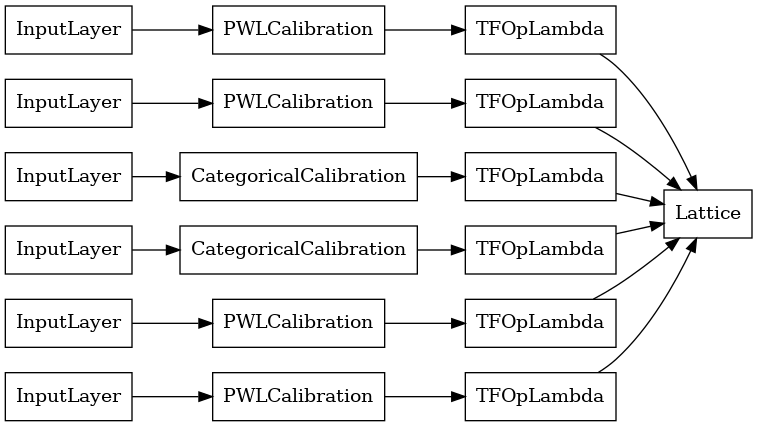

每個聚合層的輸出都是參差不齊輸入上校正格狀的平均輸出。以下是第一個聚合層內部使用的模型

aggregation_layers = [

layer for layer in aggregate_function_model.layers

if isinstance(layer, tfl.layers.Aggregation)

]

keras.utils.plot_model(

aggregation_layers[0].model, show_layer_names=False, rankdir='LR')

現在,與任何其他 keras.Model 一樣,我們將模型編譯並擬合到我們的資料。

aggregate_function_model.compile(

loss='mae',

optimizer=keras.optimizers.Adam(LEARNING_RATE))

aggregate_function_model.fit(

train_xs, train_ys, epochs=NUM_EPOCHS, batch_size=BATCH_SIZE, verbose=False)

<tf_keras.src.callbacks.History at 0x7ff66c544700>

訓練模型後,我們可以在測試集上評估它。

print('Test Set Evaluation...')

print(aggregate_function_model.evaluate(test_xs, test_ys))

Test Set Evaluation... 7/7 [==============================] - 2s 3ms/step - loss: 47.7068 47.70683670043945